Robots with a Vision

By Vishnu Sivadevan

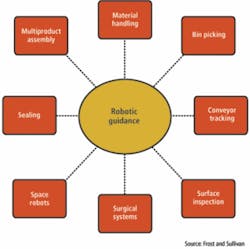

The deployment of robots in industrial environments is increasing so as to achieve manufacturing economies. This trend is being driven by the technological advancements in the field of robotics. Several important performance parameters of robots have seen a phenomenal change over the past decade—there has been a drastic improvement in the reliability of robots, the payloads robots can handle, and the diversity of applications that can be deployed. Computer-vision-based robotic guidance is a significant technology that has facilitated this change (see Fig. 1). Machine-vision-based feature tracking has replaced encoder-based tracking technology in many applications.

Robotic-vision-guidance systems perform tasks at various levels. They can inform the robot about part orientation to instruct it to pick it up accordingly. They can confirm that a part is in place for the robot to pick. Vision systems can operate robots in a “see and move” fashion or in real-time mode. That is, robotic movements can be determined before they move, or there can be parallel vision processing as they move in a continuous fashion. Both 2- and 3-D vision systems are being used in robotic guidance according to complexity and nature of the manufacturing or material-handling operation. Real-time 3-D vision systems are being used to guide autonomous robots.

Significance of vision-based guidance

Robotic-vision-guidance systems are being used extensively in material-handling operations. The most basic applications for vision systems enable robots in pick-and-place operations. Vision systems give information about the position of parts for robots to pick up. The image system obtains the image and processes it to determine the position of the part.

Vision systems are also being used to identify complex part positions where parts are presented to the robot in random positions and orientations. In some applications robots are guided to pick up moving parts on a conveyor by vision systems. Two-dimensional vision systems are largely used in robotic guidance. Recently, however, compact 3-D vision cameras have begun to be used for robotic guidance.

Vision guidance is a value addition to production lines that consist of multiproduct assembly, conveyer tracking, loading and unloading, two-handed work, and assembly of alternate parts. In addition, vision-based guidance could be a perfect choice for 3-D flex assembly, bin picking, and small lot assembly. Food and pharmaceutical packaging, flexible manufacturing, and multiproduct assembly usually involve complex systems that would benefit from the enhanced automation offered by machine-vision systems (see Fig. 2).

Automotive industry

In the modern industrial world, the automotive industry was one of the first to make use of automated robotic arms to manufacture and assemble vehicle components. The sector is increasingly using machine vision for inspection of parts, assembly operations, and robotic guidance. Vision systems guide robots to perform welding assemblies, painting, and part handling in the automotive industry. In addition, elimination of part fixturing by machine vision for pick-and-place robotic operations has benefited the automotive industry.

Automotive manufacturers have also faced problems when dealing specifically with customized vehicles. In those conditions, vision-guided robots can accommodate the changes. With the aid of 3-D vision, robots can perform real-time movements based on assessment in assembly operations. 3-D vision sensors are enabling performance of panel assembly and inspection by robots in the manufacture of automobiles.

Several innovative applications are being developed based on robotic guidance for this sector. Academic researchers and major automotive-reflector manufacturers in Europe have initiated and completed a project under the European Commission 5th Framework Competitive and Sustainable Growth project. The project—FINDER, or Fast Intelligent Defect Recognition System—has led to the development of technology to perform automated inspection of head-lamp reflectors. The project aims to develop a methodology for the characterization and classification of defects on reflectors.

The project also involves the development of smart diagnosis software for classifying aesthetic defects. One of the most challenging aspects of the project was to facilitate the handling of reflectors using suction grippers. Development of image processing, robot control, and material-handling software are an integral part of the project. Software that decides the best possible gripping points on the reflector is also under development. Further research would involve the finalization of system parts, after which the final prototype would be built and approved for industrial use.

Bin picking

Picking parts from bins using robots, irrespective of the orientation of the part in the bin, is the main challenge of robotic bin picking. The machine-vision system senses the orientation of the object in the bin, and the robot’s grippers in turn are positioned accordingly to pick the part. 2-D machine vision does not have sufficient capabilities to enable robots to perform bin picking. 3-D machine vision is more appropriate to deal with the change in ambient light conditions that challenge the identification of parts in bin picking (see Vision Systems Design, January 2008, p. 33).

The advent of 3-D image sensors and rapid advances in processing power have given an impetus to the bin-picking applications. The cost of processors and electronic devices has reduced considerably, making them viable for the manufacture of machine-vision systems. Concurrently, enhanced processing power has allowed multiple cameras to be used and complex 3-D algorithms to be executed with ease. Lower cost and higher performance make vision-guided robotics (VGR) an attractive option for industry.

Factories in the future are likely to have VGR as standard, due to the multiple benefits it offers. VGR lowers costs incurred for fixtures and tooling. These are eliminated when bin picking is considered as a standard option. This, in turn, increases reliability, safety, repeatability, and flexibility in the manufacturing process. Labor costs are also reduced, as manual bin picking is replaced by robots.

In the automotive industry, parts that are well machined, heavy, and with sharp geometric features are currently most suitable for bin picking. As the capabilities of 3-D VGR increase, this can be extended to a more diverse range of parts and applications. Engine components, some sheet-metal components, and certain parts that have to be placed on moving frames in assembly lines are good candidates for current 3-D VGR systems.

Random bin picking, now well established for certain applications in the automotive industry, still has many technical challenges to overcome (see Vision Systems Design, June 2007, p. 51). Geometry and orientation of parts, lighting conditions, and the speed at which random bin picking is done are being addressed by leaders in the technology. Braintech (North Vancouver, BC, Canada; www.braintech.com) has reached agreements with the robotics division of ABB (Auburn Hill, MI, USA; www.abb.com), US Toyota Motor Manufacturing (Buffalo, WV, USA; www.toyota.com), and the University of British Columbia (Vancouver, BC, Canada; www.ubc.ca) to research and develop vision guidance robotic systems that can perform bin picking accurately and efficiently.

Robotic manipulation

Robotic-manipulation technology involves the operation and control of robotic arms and grippers. The operation of mechanical grippers for material handling uses vision guidance systems to provide inputs to the robot controller. Visual perception for robotics in medical, surgical, and space applications uses computer vision.

“Robonaut,” developed by NASA, uses a model-based approach to visual perception. The robot is equipped with a video camera, which helps in viewing the object environment. Meanwhile, the memory of the robot is already fed with 3-D models of the possible objects in the visual field. The registered objects are then compared with the 3-D models, and they perform tasks accordingly, whether it is a picking, placing, or opening operation.

Intuitive Surgical (Sunnyvale, CA, USA; www.intuitivesurgical.com) has developed the “da Vinci” surgical system, which comprises a vision system, a patient-side cart, a console, and proprietary instruments. 3-D images of the surgical field are viewed by the surgeon seated at the console. The movements intended by the surgeon are easily transmitted by natural positioning of instruments via the robotic arm.

High innovation rates can be observed in the field of vision-guidance-system integration as advanced cameras, image-processing algorithms, illumination systems, and interfacing standards emerge. Visual servoing of robots will be the next technological advancement after 3-D vision guidance systems.

In the future, this technology will enable robots to handle parts moving on production lines. Currently, visual servoing is complex and expensive, but it has been demonstrated successfully. Advances in robust integration and cost reduction of processors and cameras would improve its viability and prospects for commercialization (see Fig. 3).

Vishnu Sivadevan is a research analyst at Frost & Sullivan, New York, NY, USA; www.frost.com.