Intelligent IP camera system design considerations

By Michael L. Long, Analog Devices

This article provides a discussion of the challenges and considerations associated with the implementation of an intelligent IP surveillance camera, presents a functional block diagram, and maps these functions onto a Blackfin embedded processor.

Intelligent surveillance

IP surveillance networks are becoming more and more "intelligent," and more of the intelligence is moving beyond the central control and monitor center to the camera nodes with every product generation. This onsite intelligence offers advantages over the centralized approach by enabling the distributed camera nodes to detect situations, zoom, pan, and scale the surveillance image based on rules set up for each node. This on-camera information can then be shared with the central monitor and with other nodes. Various video analysis algorithms, such as object detection and tracking, intrusion detection, and audio analysis algorithms such as specific sound detection and location, are beginning to take hold across the full range of video surveillance technologies. This distribution of intelligence directly impacts the performance levels of the local camera's processors, which must have excellent support for media processing, including audio/video compression and specialized analytics algorithms for event detection, tracking, etc.

IP camera system architecture

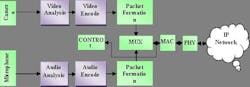

The IP camera system can be viewed as a group of functional blocks such as audio/video acquisition, intelligent video and audio analysis, audio/video encoding, stream media packetizing, and system control, as well as control for the camera's direction, focus, zoom, and brightness (exposure), etc. (see Fig.1).

The first step in the signal path for an intelligent camera is the acquisition of the audio/video stream from the sensors. This is relatively straightforward for the video signal, where the frames are scanned sequentially into the camera memory and for audio input is a simple scaling and A-D conversion of the microphone signal.

Traditional implementations of intelligent camera applications generally operate on raw media streams, although there are certain algorithms that can utilize the compressed media formats. Because there is such a wide range of potentially applicable intelligent processing elements, and because the field is constantly evolving, it is conventional practice to implement these "analytic-type" functions within a platform, providing a reasonable amount of performance headroom to be consumed through future software upgrades. This approach requires a processing engine that is reprogrammable to run multiple algorithms, and allows upgrades as algorithms are added and improved. Based on the output of certain analytic modules, the system's control and encoding functions may carry out corresponding intelligent processing -- for example, outlining the moving objects, enhancing encoder resolution or frame rate, or adjusting camera orientation to follow the sound source position. These modules generally have comparatively standardized input/output interfaces to aid universal adoption and specific end-application implementation.

The encoder block compresses the raw digital audio and video streams to reduce the bandwidth on the network and the storage requirements for central archiving. The compression adheres to standards such as MPEG, MJPEG, or H.264 that are available as hardware IP or software modules. The choice of hardware or software encoding is based on the availability of sufficient processing power to execute the encoding, and factors like future upgradeability of the codec. Another consideration that may influence the hardware/software decision is that some smart cameras may zoom in on an area of interest and encode it at a higher resolution or frame rate for inspection by the system operators.

The compressed files are finally formed into IP packets and transmitted over the network to the central monitor and archive locations. We have barely mentioned the control system for the camera, which handles the entire process from acquisition to network interface and performs various tasks based on the results of the analytics modules and the direction from the central monitor.

Partitioning of the functions in an intelligent camera

The partitioning of the functional blocks onto the intelligent camera hardware will largely determine the features, performance, cost, power, and development schedule of the camera. The greatest camera flexibility is achieved when the required number of blocks is implemented on the processor without performance degradation and with interfaces that efficiently connect the hardware from the sensors to the IP network. Processor selection is essential because the processor (or processors) must possess the performance to execute image acquisition, analysis, encoding, and network protocol stacks. Some of the blocks lend themselves more or less to a software or hardware solution. For instance, as mentioned previously, the analytics function may be subject to constant improvement or repurposing and is therefore not a good candidate for a fixed hardware implementation. Encoding is a function that is both performance hungry and can be fixed for a certain camera design, making it a candidate for dedicated hardware if it meets the other goals of power and cost.

Even in a camera application where all of the functional blocks are implemented in software on the embedded processor, the processor selection remains critical because the processing tasks command both straightforward control and data processing, as well as continuous media stream processing. Historically, this required a set of processors to efficiently handle the two processing loads, because a controller was woefully inadequate for media processing and a dedicated DSP was just not designed to be the primary system control. Still, designers prefer to use a single chip to perform the entirety of the device processing where possible, and the older approach of using discrete DSP and MCU processors has transitioned into a number of varied options.

The MCU+DSP combination is integrated into a single chip, or further into a heterogeneous multicore architecture, sharing some or all of the peripherals. Despite the "integration," developers still need to learn and use two tool chains during development and face possible constraints when attempting to shift or share functionality between the two devices.

Application-specific hardware is deployed on a system-on-chip (SoC) to deliver the data and signal-processing capability -- e.g., video encoder/decoder added to an MCU on SoC -- targeting an application with fixed functionality. By design, these chips lack processor headroom for future upgrade/performance flexibility.

High-end microcontrollers, running at high clock rates, are tasked with video signal processing. This approach is acceptable if low video quality, due to MCU performance limitations, is sufficient.

Utilize a convergent computing architecture that is based on a signal-processing core with the capability to execute DSP-grade signal-processing algorithms and efficiently process data and control code. This approach replaces the fixed hardware codecs in the SoC approach with software codecs, trading off dedicated hardware speed for software flexibility. A "converged" processor is an architecture that is adept at both signal and control processing.

Convergent platform

The last approach puts more resources in the hands of the embedded software developers. An architecture that allows application development in a high-level language reduces the manual optimization efforts and maintains the software IP in a developer–friendly language. Signal-processing code developers still need to know the hardware platform intimately in order to assess the impact that the processor's architecture will have on algorithm implementation and optimization. The software platform developers need to consider the architectural details of the processor to optimize the system, such as abstracting the system interface for parallel processing with multiple cores, or efficient use of the DMA channels. Deeply cross-functional embedded system developers and teams can best take advantage of this low-level hardware platform as the roles of the hardware designer, algorithm designer, and system software designer are blurring and overlapping.

The Blackfin processor family from Analog Devices Inc. (ADI) is an example of a convergent processor product. It is capable of meeting all of the signal-processing and control functions in today's cameras. In addition, the architecture supports a number of specialized video-processing features/instructions.

The Blackfin processors are supported by a diverse software ecosystem for ease of development. Blackfin is supported by well-known OS/RTOS packages including ADI's VisualDSP++ Kernel (VDK) and uClinux, uC/OSII, etc. In addition, Analog Devices provides many license-free audio/video codecs, hardware abstraction function libraries, and drivers for use in conjunction with its VisualDSP++ integrated development and debug software environment. Currently, there are many intelligent processing modules available for use on the Blackfin processor, both internally developed by Analog Devices and offered by their third-party partners. Examples of such modules include fish-eye correction, object detection, and object detection-based algorithms such as abandoned object detection, intrusion detection, and gunshot detection/location, among others.

Intelligent IP surveillance camera based on the Blackfin embedded processor

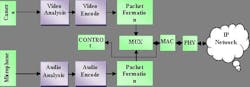

The system diagrammed in Fig. 2 has the Blackfin parallel peripheral interface (PPI) linked directly to the digital video-stream input to receive video signal, while the serial SPORT interface links to the audio input. The A/V data is transferred to SDRAM through a special DMA channel. If intelligent video analysis is needed, different analytic blocks may be inserted as required. A software encoder is responsible for compressing the A/V data collected at real time and transforming it into TS (Transport Stream) for transmission. The system is dataflow driven as the user can select various inputs, analysis, and coding blocks as needed, where each block's input and output are standardized dataflows, which also may be flexibly inserted into different places in the system dataflow for processing. A single-core Blackfin device can execute some of the blocks at the same time. However, in a dual-core processor like ADI's Blackfin BF561, or in a two-convergent-processor solution, all the blocks may be used simultaneously.

In an intelligent surveillance system based on ADI's Blackfin processor, one may include the use of a real-time operating system (RTOS) such as uC/OSII and its networking protocol stack as the operating system platform in order to maximize the overall utilization of devices, which in turn allows the user to focus on achieving the best encoding and intelligent algorithm capability possible. In a small RTOS, it is unnecessary to distinguish the user state from the core state since the overhead for accessing the system hardware resources is minimal, the interrupt and context shift times can be guaranteed at real time, and the memory utilization and allocation are comparatively unrestricted. These core execution features allow the user to take advantage of the processors' full DSP capability and directly leverage the license-free H.264 coding library offered by ADI.

Details of video acquisition and coding

All ADI Blackfin processors are equipped with PPIs for high-speed parallel data, especially video dataflow. The PPI interface can operate in a "hardware synchronization" mode of the BT.601 video dataflow and also automatically decodes BT.656 presynchronization, allowing for a seamless link to various video sources and image sensors. When used in conjunction with the onboard DMA controller, the PPI can be configured to read in only the effective video information from a given video frame, or conversely only the blanking region within a frame, resulting in significant bandwidth savings when whole video frame fetching is not required. Additionally, the PPI can ignore all of the second image field information of the interlaced BT.656 video stream, providing an effective way for quick extraction of the input signal. Finally, since the PPI can inherently decode a BT.656 video stream, it can be directly connected to popular hardware video decoders such as the ADV 71xx.

As previously mentioned, in an effort to provide the optimal video compression performance for end applications such as IP cameras, ADI provides license-free encoder software including MPEG4 and H.264. The performance of the H.264 decoder, for example, is optimized by making full use of the on-chip L1 cache. The data is transferred through DMA, running in parallel with the processing of the core. The major features include support for YUV 4:2:0 and UYVY 4:2:2 (CCIR-656) video input format, outputting the fundamental video stream in unit of NAL, support for Baseline Profile, and support for some Main Profile features (Interlaced Encoding, CABAC). Single-core Blackfin products can achieve real-time compression on video streams up to up to half D1 resolution while the dual-core ADI Blackfin BF561 can process up to full D1 in real time including I and P-frames, adaptive CBR code rate control, and other advanced features. For greater application flexibility, the bit rate of the ADI H.264 encoder is adjustable, allowing real-time transmission to be realized at low bit rates like those found within air interface standards such as CDMA2000 1xRTT.

Summary

The future development of embedded processors will yield high computing performance, coprocessing with hardware IPs, and multicore and application orientation. The software platform and software developer need to adapt to such variations and features. We demonstrate such a software and hardware platform for application in the surveillance domain, which is moving toward increasing local intelligence. Representing a new generation of processor converging signal processing and control capabilities, the Blackfin processor makes full use of its advantages in this application, demonstrating performance and flexibility so that it can quickly meet the market demand and ensure that system builders can provide differentiation and innovation.

Michael L. Long is product line manager, industrial video & imaging solutions, Analog Devices (www.analog.com).