Multispectral imaging benefits color measurement

Andy Wilson, Editor

“Color perception,” says Romik Chatterjee, vice president of engineering at Graftek Imaging (Austin, TX, USA; www.graftek.com), “depends on the type of illumination used to light the object, the spectral reflectance of the object itself, and how this reflected light is perceived by the observer.”

Although many different color models are available to describe color (see “Colors Everywhere,”Vision Systems Design, July 2008), the most perceptually uniform representation is the La*b* color space. To measure how different two colors are, a Delta-E value is computed that provides an indication of their relative differences. Before this value can be computed, an image of the object must be captured.

“While spectrophotometers only capture the spectral reflectance at single points in an image, RGB color cameras can capture RGB data about every point within the field of view. From this RGB data, the La*b* coordinates can be computed,” says Chatterjee. “However, light with different spectral content can have the same RGB coordinates and thus such systems cannot discern, for example, whether a yellow color is actually a pure yellow or made up of a mixture of red and green.”

To overcome this problem, absolute light intensity measurement across wavelengths at each point in the image must be captured. Chatterjee and his colleagues at Graftek have developed a multispectral measurement system based on a VariSpec liquid-crystal tunable filter (LCTF) from CRi (Woburn, MA; www.cri-inc.com). Similar to an interference filter, the wavelength of light transmitted through the VariSpec is electronically controllable, allowing the spectral response for each pixel to be measured at multiple wavelengths in the visible and near-infrared spectrum.

To image color objects over a 12-in.2 field of view (FOV), Graftek’s system uses a 60-mm f/4 Rodagon lens, mounted to the VariSpec filter, and a 4000 × 2672-pixel, IPX-11M5 Camera Link camera from Imperx (Boca Raton, FL, USA; www.imperx.com). Images from this camera are digitized into a host PC using a PCIe-1429 frame grabber from National Instruments (Austin, TX, USA; www.ni.com). LabVIEW-based MultiView software running on the PC controls the LCTF using serial commands over a USB interface.

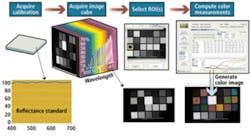

“By incrementally sliding a 7-nm bandpass filter over the 400–720-nm spectrum,” says Chatterjee, “multiple images can be taken at multiple wavelengths. This image data is then used to build an ‘image cube’ that represents the absolute light measurement at each point in the image.” Regions of interest within this color cube can then be selected at specific wavelengths and the La*b* measurements computed (see Fig. 1).

One of the most important development aspects was compensating for lighting variations across different wavelengths. “Initially,” says Chatterjee, “objects to be measured were illuminated with white LED lighting. Because the power spectrum of such light is not linear and the filter has out-of-band response, approximately five times more red light than blue will enter the camera when the filter is tuned to a [blue] wavelength of 440 nm.”

To compensate, a reflectance standard was used to compute the spectral transfer function of the system along with the known response of the LCTF. This transfer function based on the spectral response of the LED, LCTF, and camera was applied to measured data to compute the sample’s spectral reflectance. Initial measurements compared well with readings taken by a commercially available spectrophotometer for white, blue, green, and red (WBGR) patches yielding Delta-E values of 0.75, 2.72, 2.48, and 4.93, respectively.

Graftek replaced the original LED illumination with daylight spectrum lighting. Because this type of light has a flatter spectral response than LEDs and exhibits a higher spectral value in the blue spectrum, measured Delta-E values were shown to improve by more than 100%, exhibiting values of 0.65, 1.8224, 1.5993, and 2.572 for WBGR, respectively.

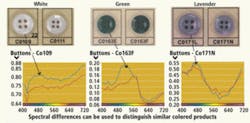

Chatterjee showed the results of how spectral differences measured by the system could be used to distinguish similar colored buttons (see Fig. 2). Although nearly imperceptible to the human eye, the spectral difference characteristics measured by the system clearly distinguished these color differences.