Time of flight provides cheaper technology option

Researchers led by MIT (Cambridge, MA, USA) Electrical engineering professor Vivek Goyal have developed a new time-of-flight (TOF) sensor that can acquire 3-D depth maps of scenes with high spatial resolution using just a single photodetector and no scanning components.

Traditionally, to image a scene in 3-D, engineers have deployed either light detection and ranging (lidar) or TOF systems that both work by measuring the difference in time between a transmitted pulse of light and one returned from the scene.

The lidar, for example, employs a scanning laser beam that fires a series of pulses into a scene and separately measures their time of return. But that makes data acquisition slower, and it requires a mechanical system to continually redirect the laser.

The alternative, employed by TOF cameras, is to illuminate the whole scene with laser pulses and use a bank of sensors to register the returned light. But sensors able to distinguish small groups of light particles are expensive; a typical time-of-flight camera can cost thousands of dollars.

The MIT researchers claim that their single photodetector system is so small, inexpensive, and power-efficient that it could be incorporated into a cell phone at very little extra cost.

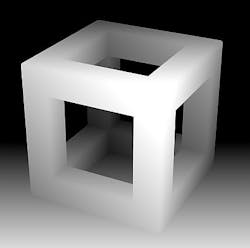

Using the system, the researchers were able to reconstruct 64 × 64-pixel depth maps of scenes by illuminating them with just over 200 randomly generated patterns created by a spatial light modulator. To reconstruct the scene, they used a parametric signal modeling algorithm to distinguish the depth that the objects were apart. A convex optimization procedure was then used to determine the correspondences between the spatial positions and the depths of the objects in the scene.

The researchers claim that the algorithm is simple enough to run on the type of processor found in a smart phone. By contrast, to interpret the data provided by a Microsoft Kinect device, the Xbox requires the extra processing power of a graphics processing unit, or GPU.

More information is available at www.opticsinfobase.org.

-- By Dave Wilson, Senior Editor, Vision Systems Design