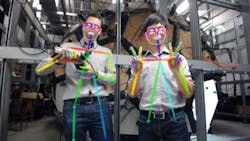

Researchers use computer vision technology to detect hand poses and track multiple people

A team of researchers from Carnegie Mellon University’s RoboticsInstitute have developed an algorithmthat enables the detection and reading of body posesand movements of multiple people from video, including the pose of each individual’s fingers, which the team says is a first.

The method was developed by using images from the Panoptic Studio, which is a two-story dome embedded with 480 VGA cameras running at 25 fps, synchronized among themselves using a hardware clock, and 31 HD cameras running at 30 fps, synchronized with a hardware clock, with the timing aligned with the VGA cameras. Additionally, the dome features 10 Kinect II 3D depth sensors (1920 x 1080 (RGB), 512 x 424 (depth) running at 30 fps, timing aligned among themselves and other sensors, as well as 5 digital light projectors synchronized with the HD cameras.

The 480 VGA cameras—which feature global shutter CMOS image sensors, a fixed focal length of 4.5 mm—are arranged modularly with 24 cameras in 20 standard hexagonal panels on the dome. The HD cameras sit in the middle of each of these 20 panels, as well as in the middle of a number of panels that do not contain any of the VGA cameras. Ten Kinect II 3D depth sensors are strategically placed around the inside of the dome. All of these components work in conjunction to produce a massive Multiview system for "social interaction capture."

Insights gained from studio enabled the Carnegie Mellon researchers to develop OpenPose, a library for real-time, multi-person keypoint detection and multi-threading written in C++ using OpenCV and Caffe. OpenPose represents the first real-time system to jointly detect human body, hand, and facial keypoints (in 130 total keypoints) on single images. In addition, according to the researchers, the system computational performance on body keypoint estimation is invariant to the number of detected people in the image.

Both tracking multiple people in real time and hand detection pose great challenges, particularly for the latter, according to the researchers. As people use their hands to hold objects and make gestures, a camera is unlikely to see all parts of the hand at the same time, and unlike the face and body, large datasets do not exist of hand images that have been annotated with labels of parts and positions, according to the press release.

But for every image that shows only part of the hand, often there is another image from a different angle with a full or complementary view of the hand, said Hanbyul Joo, a Ph.D. student in robotics. This is where the Panoptic Studio came into play.

"A single shot gives you 500 views of a person's hand, plus it automatically annotates the hand position," Joo explained. "Hands are too small to be annotated by most of our cameras, however, so for this study we used just 31 high-definition cameras, but were still able to build a massive data set."

Joo and Tomas Simon, another Ph.D. student, used their hands to generate thousands of views.

"The Panoptic Studio supercharges our research," Sheikh said. "It now is being used to improve body, face and hand detectors by jointly training them. Also, as work progresses to move from the 2-D models of humans to 3-D models, the facility's ability to automatically generate annotated images will be crucial."

When the Panoptic Studio was built 10 years ago with support from the National Science Foundation, it was not clear what impact it would have, Sheikh said.

"Now, we're able to break through a number of technical barriers primarily as a result of that NSF grant 10 years ago," he added. "We're sharing the code, but we're also sharing all thedata captured in the Panoptic Studio."

View more information on Panoptic studio.

View the Carnegie Mellon press release.

View/download the code.

Share your vision-related news by contacting James Carroll, Senior Web Editor, Vision Systems Design

To receive news like this in your inbox, click here.

Join our LinkedIn group | Like us on Facebook | Follow us on Twitter

Learn more: search the Vision Systems Design Buyer's Guide for companies, new products, press releases, and videos

About the Author

James Carroll

Former VSD Editor James Carroll joined the team 2013. Carroll covered machine vision and imaging from numerous angles, including application stories, industry news, market updates, and new products. In addition to writing and editing articles, Carroll managed the Innovators Awards program and webcasts.