Enabling autonomous vision through machine learning

With a mission of enabling autonomous vision by empowering cameras to see more clearly and perceive what cannot be sensed with today’s imaging systems, machine learning startup Algolux has their eye toward the next wave of vision.

This past November, I had the opportunity to visit the Algolux office and hear more about the company and its technology via Allan Benchetrit, CEO, who told me that many of today’s vision systems are sub-optimal for several applications his company sees in the market.

"Many [vision systems] are based on a legacy architecture based on human vision," he said. "Typical architecture may include a lens, camera, and ISP. The ISP gets hand tuned to provide pleasing images for humans to be able to see. The problem is that the ISP takes the raw data off the sensor and strips away much of the data, to create a visually pleasing image."

He continued, "Another problem exists because in a vision architecture, it is the same process being applied. If you are taking these visually pleasing images and feeding them into a neural network, some of the critical data may be missing from a computer vision standpoint. Algolux aims to address this opportunity by two means, the first of which is its CRISP-ML desktop tool that uses machine learning to automatically optimize a full imaging and vision system."

CRISP-ML features an optimal way to tune existing architectures according to Benchetrit. The tool "effectively combines large real-world datasets with standards-based metrics and chart-driven key performance indicators to holistically improve the performance of camera and vision systems." This, according to Algolux, can be done across combinations of components and operating conditions previously deemed as unfeasible."

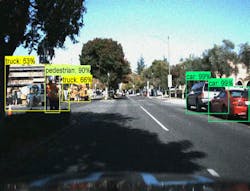

While CRISP-ML is used for camera preparation, Algolux’s CANA product is far more disruptive as it is embedded in the actual camera, according to the company. Benchetrit explained that, as of now, there is a big race for automotive companies to get to level 3 autonomy by 2020. The problem is that the baseline for level 3 is pretty low, he said, for example in places with sunshine, dry roads, no weather distortions, during the day, etc. When these systems encounter difficult conditions they are not able to process results with the desired level of confidence and consistency, he said.

>>>Editor’s note: View the SAE International autonomy levels here.

"What we are doing is focusing on the hard cases, by supporting low light, darkness, distortion caused by weather like rain or fog, and so on; which is the reason that we are breaking away from the legacy architectures of vision systems," said Benchetrit. "We are doing this by replacing the most problematic component (image processor) with a deep learning framework. We are able to discard the image processor and use the data off of the image sensor to train our model to be able to perform in sub-optimal conditions."

He added, "We are doing something that everyone in the automotive industry needs and cannot necessarily work on right now because they are focused on achieving baseline standards of level 3. We are addressing the evolution of vision systems using machine learning."

Together, CRISP-ML and CANA are enabling the design and implementation of a full software stack for image processing in a component-agnostic manner; this provides better results while reducing cost and enables a faster time-to-market, according to the company. The company has several customers of CRISP-ML while the CANA technology is in pilots, as the company is focusing on customers’ short-term needs.

"We are telling our customers to take an existing example of something challenging, show us the baseline, and let us prove to you that we can do better in the exact same conditions. Once we have proven this, let’s take on some more use cases, and from there, run it on your platform and go from there. Eventually, we will take on more and more cases, until we have proven that it can work on the clear majority of difficult cases," said Benchetrit.

For now, the ultimate goal of the company is to deliver on the promise of multi-sensor fusion, assimilating multiple data points from various sensors in real-time.

"We are not encumbered by legacy architecture or components. We believe we will be the first to be able to support multi-sensor fusion in level 3 autonomous driving and beyond," he said.

Looking toward possible applications outside of autonomous vehicles, Benchetrit indicated the software packages could be used in security and surveillance, border patrol, or in "any embedded camera that is going to be put through a variety of unpredictable use cases in changing conditions," including mobile devices, drones, and augmented reality/virtual reality.

View more information on Algolux.

Share your vision-related news by contacting James Carroll, Senior Web Editor, Vision Systems Design

To receive news like this in your inbox, click here.

Join our LinkedIn group | Like us on Facebook | Follow us on Twitter

About the Author

James Carroll

Former VSD Editor James Carroll joined the team 2013. Carroll covered machine vision and imaging from numerous angles, including application stories, industry news, market updates, and new products. In addition to writing and editing articles, Carroll managed the Innovators Awards program and webcasts.