Vision system monitors fish populations

Off-the-shelf components combine with an image-processing library and custom code to count migrating fish.

By R. Winn Hardin, Contributing Editor

Counting the types and numbers of migrating fish is very important to help manage the protection of endangered species and fish harvesting. Areas such as the Columbia River Basin in the US Pacific Northwest spend considerable money on this task. There are 12 mainstem dams on the Columbia and Snake rivers that report daily fish counts and two dams on the Yakima River. Additional fish-counting facilities exist on other river tributaries, as well.

Currently, fish populations are monitored manually by reviewing 24-hour, time-lapse videotapes made during the migration season as the fish climb ladders over manmade dams--a labor-intensive task. In some cases, videotaping is not considered reliable; therefore, a trained biologist may identify and count the fish.

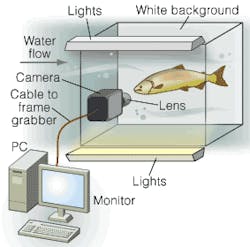

In answer to requests from commercial entities and environmentally concerned groups such as the Yakama Nation Fisheries Resource Management, the US Department of Agriculture (USDA) gave an SBIR Research Grant to vision-systems-designer Robert Schoenberger, president of Agris-Schoen Vision Systems, and D. J. Lee of Brigham Young University (BYU) to automate the fish-counting and classifying process, starting at the Prosser Dam on the Yakima River in Prosser, WA. Schoenberger and Lee put together a fish recognition and monitoring (FIRM) system using a Hitachi KP-F2A near-infrared (NIR) CCD camera, Matrox Imaging Meteor-II frame grabber, and PC with custom image-analysis routines to count passing fish and collect data on type, length, and other physical characteristics (see Fig. 1).

null

Natural retrofit

Counting fish in their native underwater surroundings poses several challenges: electronics do not work well in wet environments; visual contrast is reduced in underwater settings by bubbles, turbidity, sediment, and debris; and fish come in all shapes and sizes. Complicating the counting and classifying process is the fact that fish rarely stop in perfect profile, offering twisted, rotated, and moving targets for image-processing algorithms.

Schoenberger had to work around existing videotaping systems that consisted of standard CCD cameras and low-frequency fluorescent lighting. Adding extra light could adversely affect the ongoing videotaping of passing fish, so Schoenberger chose the monochrome Hitachi KP-F2A progressive-scan camera with sensitivity in the NIR. By utilizing an existing white board on the far side of the fish window and a camera that is sensitive to the NIR, Schoenberger was able to maximize the amount of collected light while improving the signal-to-noise ratio of underwater photography through the addition of the white backdrop. Additional boards on top of the ladder and the vault containing the camera limited changes in lighting from shifting weather conditions and the sun’s movement (see Fig. 2). “We chose a progressive-scan camera because, while the fish do not move very fast--letting us operate at 30 frames/s--they do move fast enough to cause problems with an interlaced video image,” Schoenberger explains.

The analog camera connects to a standard Pentium-based PC with 1 Gbyte of RAM through a Matrox Imaging Meteor-II frame grabber. Schoenberger and Lee chose the Meteor-II because of their familiarity with the MIL image-processing library. The relatively slow speed of the application (moving fish) compared to many industrial applications meant the PC could do all image processing without additional processors on the frame grabber. After calibration, the system runs in constant acquisition mode because visual cues in the images are the only way to trigger the system. “It’s easier than putting in photoelectric sensors to detect the fish,” notes Schoenberger.

Minus the water

Schoenberger calibrates the FIRM system by storing a reference image of empty water, which is subtracted from subsequent images to determine whether an object such as a stick or fish is present in the image. The system was designed to take a new reference image every hour to adapt to changing lighting conditions. If the differential image yields a positive result, the system uses MIL’s edge-detection algorithm to find the fish’s outline on route to creating a definitive contour, which will eventually allow the system to separate fish from debris and then identify the fish species (see Fig. 3).

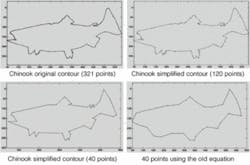

Because of low contrast and the different profiles of the fish or object under study, the edge-detection algorithm by itself was not always able to close the gaps and produce a full contour. The system uses two methods to enhance the edge detection: one based on the differential image (acquired image minus background image) and MIL’s edge-detection algorithm, and the other through binarization of the original image and subsequent blob analysis. The data sets are combined to find common edge pixels, resulting in as complete a fish contour as possible. After the final edge is created and the length of the fish normalized by excluding its tail (which introduces the most variation in length to a fish), a quick blob analysis determines if the object is likely to be a fish or some other object. If it’s a fish, the image processing continues.

The system then ports the edge data into C++ code developed by Agris-Schoen and D. J. Lee in which a “canny edge operator” algorithm reduces the width of the edge to a single pixel without extending the gaps. Next, the system uses an iterative vector-based algorithm to reduce the thousands of points that form the contour to only 40 points. The algorithm works iteratively, similar to a compression algorithm, shedding data points that are repetitive while preserving data that show critical features, generally defined as significant changes in contour direction (see Fig. 4).

The processing continues for each image until the fish passes out of the camera’s field of view. If the fish enters from the right and exits the left, the total fish count for that species increases by one. If the fish enters from the right and exits from the right, the counter is unchanged. If the fish travels against the stream, the count is decreased by one. After the system identifies the fish by type, it measures the length of the fish and other physical attributes and stores an image of the fish for later review.

Based on the changes in contour direction, the system locates the beginning and end of five critical features: the mouth and the dorsal, adipose, anal, and pelvic fins. Working with biologists at Yakama Nation Fisheries Resource Management and Dennis Shiowaza at BYU, Schoenberger and Lee designed the system to differentiate fish species. Vectors are created among various points, and the interrelationship between values categorizes the fish. Initial testing has shown that the system correctly identifies fish species between 70% and 100% of the time (see Fig. 5).

Schoenberger will soon begin testing other methods to improve the categorization process. “Because of the speed of the application, we can do a lot of processing simultaneously,” Schoenberger explains. “There are also cases where the fish is larger than the window, so we need to be able to identify it based on partial shapes through point-distribution models, power cepstrum, or B-spline curve matching.” By moving the system to a “voting accumulator” that polls each of the techniques and makes a final determination based on the results of several image-processing approaches, Schoenberger feels he can improve the system’s performance and open it up to new applications.

Joel Hubble, fisheries biologist at the US Bureau of Reclamation (formerly with the Yakama Nation), worked with the Bureau, the USDA, and Yakama Nation, as well as Agris-Schoen, on the Prosser Dam installation. “My initial goal was to automate the process to a point where it was reducing the time spent reviewing the tapes-basically fast forward to a fish and get rid of dead water that passes with no fish,” Hubble explains.

“We looked at how many minutes in a 24-hour period actually had fish, and it wasn’t a lot. If we could reduce the dead time, the efficiency would go way up. I was not optimistic that we could ever get to the point to classify fish species with high accuracy. This system looks promising. It isolates the fish on tape nicely, and it fulfilled our main objective.”

Company Info

Agris-Schoen Vision Systems

Merrifield, VA, USA

www.machinevision1.com

Brigham Young University

Provo, UT, USA

www.byu.edu

Hitachi Denshi America

Wadsworth, OH, USA

www.hdal.com

Matrox Imaging

Dorval, QC, Canada

www.matrox.com/imaging