Vision system expedites fish sorting

Neural-net computer built on an FPGA automates fish inspection on-board ship.

By C. G. Masi, Contributing Editor

To avoid spoilage, modern commercial fishing vessels carry on-board facilities for processing their catch. Human labor is at a premium, so commercial fishing concerns install automation whenever possible. One task that has resisted automation, however, is ensuring that only high-quality fish go into the filleting machines and that they go into the machines head first; otherwise, they would be ruined in the machine. “Someone has to look at every fish as they come below deck to the filleting machine,” says Steven Hoffman, director of research at Pisces VMK. “They have to check for correct orientation and correct species and pull out any damaged fish.”

Pisces VMK manufactures and installs processing equipment for commercial fishing fleets, so it is knowledgeable about both the advantages automation provides to commercial fishing fleets and the challenges it presents in designing the equipment. One of the biggest challenges is ensuring that the equipment is not only fool-proof, but easy to operate and maintain at sea, where there are no technical experts available to sort out problems with the equipment.

Fish are not parts manufactured on an assembly line but are natural objects that come in all sorts of sizes and shapes, and they enter the processing line in all possible orientations. Even when fishermen are targeting a particular species, the variations between acceptable and unacceptable fish is more than conventional vision systems can accurately process.

Automating the process

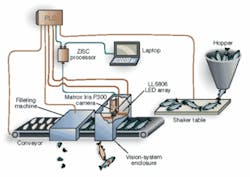

Pisces asked General Vision (GV) to develop a system to help automate the inspection process (see Fig. 1). GV develops image-recognition systems based on artificial intelligence (AI) and zero instruction set computing (ZISC). A ZISC processor is a neural network made up of a large number of simple processors. Each processor, called a neuron, compares a number of input values to a stored pattern, then passes the result on to other neurons. Those neurons process it along with other inputs; working together, the neurons collectively produce a decision.

AI solutions “learn” by experience. The learning process involves a teacher presenting the AI application with examples of sensory input patterns-that is, images-along with the correct classifications for each. The application then stores the example/category pairs in memory for future reference. When presented with a new target, the system can compare the target with its stored examples, pick out the closest match, and read out its classification. As the application gains more examples, it becomes better able to find a correct match, and its ability to correctly categorize new targets asymptotically approaches 100%.

In the Pisces case, the inputs derive from a digital camera, and the output is a classification of the subject fish into: accept, recycle, reject, or empty. An “accept” result says that the processor thinks the fish is the right species, within the acceptable size range, undamaged, and in the right orientation. A “recycle” result says the fish is perfectly fine, but moving down the line tail first, so it should be turned around and sent back. A “reject” result says that there is something (anything) wrong with the fish itself, and it should be dumped into the fish-meal grinder. An “empty” result means that there was no fish on the line.

GV created CogniSight IKB (Image Knowledge Builder) software--a simulation of a ZISC processor running on a laptop computer. After the simulation has been tested, it designs a hardware implementation programmed into the gates of a field-programmable gate array (FPGA).

During training, CogniSight IKB automatically develops a neural net simulation specifically tuned to classify the fish likely to be found in a boat’s catch at the time and place it was caught. Part of the teaching process is to iteratively tune the simulation using additional images to achieve very high (better than 95%) accuracy under the use conditions.

This simulated neural net can be, and sometimes is, used directly to process fish. Programming it into an Actel ProAsic3 FPGA, however, makes it run much faster-exceeding 60 frames/s. This speed advantage accrues from the neural net’s parallelism. Image information appearing at the neurons’ inputs propagates to the outputs at very high, appearing as a low-voltage digital signal at the output.

INTO THE HOPPER

Fish come below decks through a hopper that deposits them onto a shaker table that orients them properly to drop into trays on the conveyor line (see Fig. 2). The conveyor carries them through the vision-system enclosure, where incorrect species and damaged fish are identified. From the vision enclosure they go to the actual filleting machine, which removes heads, tails, and other unusable bits. Clean filets ready for packaging leave the machine.

An S7-224 CPU programmable logic controller manufactured by Siemens supervises operation of the machine. In addition to input from the vision system, it receives sensory input from and sends control signals to the shaker, conveyor, filleting station, and all the machine’s other components.

The vision system mounts in an enclosure that shields it from shipboard lighting. The sensor head is a Matrox Iris P300 remote-head smart camera, which has a 640 × 480-pixel, monochrome, 1/4-in.-format CCD sensor capable of running at 30 frames/s.

The lighting system consists of two LL5806 line lights manufactured by Advanced Illumination. Each light consists of six high-current LEDs mounted along a line 6 in. (300 mm) long by one LED wide. Hoffman mounts them on either side of the camera and adjusts their location to bring out the most important features to aid classification.

A training screen shows images of fish moving past the inspection screen one at a time (see Fig. 3). The trainer looks at the image displayed, makes a judgment about the fish, and pushes the appropriate button to classify it. The software identifies the differences between fish images that result in various classifications and works out a gate-interconnection scheme for the FPGA. The more fish images the system trains on, the better it will become at classifying fish.

While the images have to come from the particular machine that is being trained, they do not have to be live images. Crew members archive images to be used for later training, allowing the training to be done off-line using images stored on the laptop. When they have a “better” neural network to download to the FPGA, they can do it rapidly at any time.

“On a commercial fishing vessel, you have two shifts, making two crew members per machine,” says Hoffman. “With the vision system in place, one crew member can tend all four machines, reducing the crew roster. That is a significant savings.”

The system easily keeps up with a processing line running at 360 fish per minute, but can go faster. During a test, the system achieved 98% accuracy while classifying 16 tons of fish with a network of 80 neurons. This performance allows the line to run faster than was possible with human operators, so the boat fills its cargo hold in five days instead of seven-resulting in even more savings.

null