Andrew Wilson, Editor, [email protected]

“Reducing the bulk and weight of imaging systems is especially important for miniaturized, unmanned aerial vehicles and portable infrared telescopes,” says Eric Tremblay of the University of California, San Diego (UCSD; La Jolla, CA, USA; www.ucsd.edu). “Although miniature cameras such as those found in cell phones are commonplace, their light collection and resolution compare poorly with their full-size counterparts.” To reduce the thickness of the lens/detector combinations, Tremblay and his colleagues have developed a thin optical system based on the principles of the Cassegrain reflecting telescope, first developed in 1672 by Laurent Cassegrain (see http://en.wikipedia.org/wiki/Cassegrain_telescope).

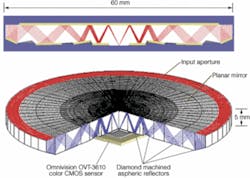

To reduce camera thickness but retain good light collection and high-resolution capabilities, Tremblay and colleagues replaced the lens of a traditional camera with a “folded” optical system. Instead of bending and focusing light as it passes through a series of separate mirrors and lenses, the folded system bends and focuses light while it is reflected back-and-forth inside a single 5-mm-thick optical crystal. The light is focused as if it were moving through a traditional lens system that is at least seven times thicker (see figure).

“Traditional camera lenses are typically made up of many different lens elements that work together to provide a sharp, high-quality image. Here we did much the same thing, but the elements are folded on top of one another to reduce the thickness of the optic,” says Tremblay. Folding the optic enables a longer effective focal length without increasing the distance from the front of the optic to the image sensor.

“The larger the number of folds in the imager, the more powerful it is,” said Ford. On a disk of calcium fluoride, Fresnel Technologies (Fort Worth, TX, USA; www.fresneltech.com) cut a series of concentric, reflective surfaces that bend and focus the light as it is bounced to a facing flat reflector. The two round surfaces with 60-mm diameter are separated by 5 mm of transparent calcium fluoride. This design forces light entering the ring-shaped aperture to bounce back-and-forth between the two reflective surfaces. The light follows a predetermined zigzag path as it moves from the largest of the four concentric optic surfaces to the smallest and then to a 3620 CMOS color image sensor from Omnivision (Sunnyvale, CA, USA; www.ovt.com).

To create functional prototype cameras, Tremblay and his colleagues partnered with Distant Focus (Champaign, IL, USA; www.distantfocus.com), an optical-system company that designed the camera’s electronic circuit boards and developed the camera’s interface and control software. In the laboratory, the engineers compared a 5-mm-thick, eight-fold imager optimized to focus on objects 2.5 m away with a conventional high-resolution, compact camera lens with 38-mm focal length. “At best focus,” says Tremblay, “the resolution, color, and image quality are similar between the two.” However, one drawback with the folded camera was its limited depth of focus.

“With a numerical aperture of 0.7 and approximately 90% obscured aperture, the depth of focus and depth of field become extremely narrow,” says Tremblay. “The depth of focus for the design was approximately 10 µm, corresponding to a depth of field of only 24 mm at a 2.5-m conjugate point,” he says.

In the latest generation of the camera, digital postprocessing techniques and design changes were successfully implemented. For example, wavefront coding, a technique originally developed by CDM Optics (Boulder, CO, USA; www.cdm-optics.com), was used to increase the depth of field in imaging systems (see Vision Systems Design, March 2005, p. 7). The engineers also increased depth of field in later generations of the optic by reducing aperture area, which sacrifices light collection; similar approaches are sometimes used to increase depth of field in reflective telescopes.