The proper use of optical filters solves many color imaging problems, sometimes without a color camera.

By Jason Dougherty

When an inspection application requires recognition and separation of color, a decision must be made to use a system that uses either a color or monochrome camera. Many times this decision is made without truly understanding what tools are available and which system may work best. System integrators and designers will often instinctively choose a color camera because, based on personal experience and human vision, this is what seems to make the most sense.While such a system may work, basing the decision on appearance rather than looking more closely at actual performance can be a less-than-optimal solution and a disservice to the customer.

Currently, more machine-vision applications are demanding higher frame rates at maximum resolution, as provided by state-of-the-art monochrome and linescan cameras. Monochrome cameras have a single sensor that determines gray-scale values. Each pixel on the sensor is given a numeric value based on the quantity of available light. Usually the smallest number (zero) represents black, and the largest number represents white, and in-between are varying shades of gray. Thus, these pixels are only providing information about light intensity, not color.

Ultimately, sensors used in color cameras and the way in which their pixels function are no different. Most color camera sensors require an integrated mosaic filter to determine the “color” of the subject matter. This reduces the overall resolution and light sensitivity, while increasing cost. When comparing the two, monochrome cameras, by and large, have higher resolution, better signal-to-noise ratio, increased light sensitivity, and better contrast.

As a result, the question must be asked: Is it necessary to sacrifice frames per second and at the same time filter out pixels for the benefit of capturing color images? Often the answer is “No,” but decision makers may not be aware of all of the tools that are available. Specifically, many users are not familiar with the various ways in which filters can be used to separate colors or that, compared to using a color camera, they can often be more effective, inexpensive, and also more readily available.

When many people think of filters, photography comes to mind. Photographers use filters such as polarizers to reduce reflections and deepen a blue sky. Light-balancing filters are used to warm or cool the color balance of a scene. In black-and-white photography, color filters will lighten their own and similar colors and darken tones of complementary colors. Neutral-density (ND) filters cut down overall brightness while allowing for longer exposure times and an increased depth of field.

These filters can all be useful in machine vision applications, as well, and will produce similar results. However, off-the-shelf photographic filters were not designed with machine vision in mind. The spectral response of a CCD or CMOS sensor is very different from that of photographic film or the human eye. It can also be difficult to find filters in the smaller sizes that will easily thread or slip onto the typically smaller CCD/CMOS camera lenses used in electronic imaging. Finally, photographic filters often do not offer the higher transmission or complete blocking of all unwanted ambient light required to achieve the superior results that a vision system is capable of and may be necessary.

Fortunately, there are filters available that have been designed expressly for machine-vision applications, cameras, and lenses-filters that match the camera’s spectral response and most of the currently used lighting types. Specific filters are available for use with monochromatic (color) or white LED lighting, fiberoptic illumination, structured laser-diode-generated light patterns, and other lighting commonly used in electronic imaging.

When matched together with the correct lighting and camera system, filtering is one of the most important factors affecting the ability of an imaging system to produce acceptable results, enabling it to rely less on the use of algorithms and eliminating the need for an elaborate shroud to block stray light. Selecting the right filter for the job is therefore critical to get the most out of any system while reducing costs.

COLOR SEPARATION

When an application calls for color recognition and separation of subject matter, many system designers will automatically consider it necessary to use a color camera. However, similar or more accurate results can often be achieved when using a monochrome camera together with a color filter-for example, an application that requires bell peppers be sorted based on color (see Fig. 1). Aside from simply wanting the bell peppers to be uniform in appearance, each color commands a different price, with yellow peppers selling for more than orange peppers, which in turn sell for more than red peppers.

Since each color is fairly close to the other in the visible spectrum, many system integrators faced with this task might believe that using a monochrome camera would not result in enough contrast to determine these differences. In the first image the same bell peppers are shot using a monochrome camera without a filter. Here the assumption would seem to be correct. The image does not offer enough contrast to accurately determine which bell pepper is the more expensive red type and which is the less expensive yellow variety. However, a broad, green bandpass filter that blocks the red and infrared portions of the spectrum cuts down on the amount of orange and fully passes yellow light.

As a result, there is now more than enough contrast to determine which pepper is which. The yellow pepper has a gray-scale value close to zero, the orange close to 125, and red around 255. In fact, when compared with a color camera, this method actually brings maximum contrast to the application. While this method may not work when the color variations are more subtle or there are several more colors that may be encountered in the application, it can be seen that optimal results when using a filter are not necessarily limited to two-color inspection operations.

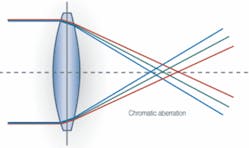

Filters that narrow the spectral range-particularly when used together with appropriately matched monochromatic LED or structured laser-diode lighting-can greatly increasing contrast. In addition, they can improve resolution by reducing the effect of chromatic aberration (see Fig. 2). Chromatic aberration occurs because a lens will not focus different wavelengths in exactly the same place since the focal length depends on refraction and the index of refraction for blue light (shorter wavelengths) is larger than that of red light (longer wavelengths). These differences create a slight blurring or aberration of the image.

Since the best focus for a lens is a function of wavelength, it is often beneficial to limit the wavelength range of the lighting illuminating the subject to be imaged. It can be particularly significant if there is a substantial ultraviolet (UV) and/or near-infrared (near-IR) component to the light in the surrounding area. Generally, the faster the lens (the lower the ‘f’ number) and the wider the field of view, the greater the benefits. Improvements in off-axis resolution by as much as 20%-50% are not unusual. Bandpass filters, in particular, are recommended to achieve this improvement (see Vision Systems Design, January 2006, p. 29).

When imaging with colored LED lighting, filters that will block ambient UV light, near-IR light, and all unwanted visible wavelengths, while transmitting greater than 90% of the desired LED wavelength range, can provide significant improvement in the image. This can be especially beneficial in high-resolution or telecentric gauging applications, where the filter also doubles as a protective dust cover for these more expensive lenses that often tend to be more susceptible to damage because of their larger size.

WHITE-LIGHT APPLICATIONS

In cases where a color camera is necessary, usually a “white” (full spectrum) light source of some type will be chosen for illumination, although at times ambient lighting may be relied upon. Some common white-light sources include halogen, fluorescent, xenon, metal halide, and, increasingly, LED lighting. LED lighting can utilize any of several varieties of white LEDs, or a combination of red, green, and blue LEDs, usually integrated into the same light head. Often the apparent color, or color rendering, of the subject under test will change significantly based on the type of white light that is chosen, and it may also change as the lighting degrades over a period of time. At times this can lead to unacceptable results.

Usually the apparent color of white light is more desirable when it approaches that of average, daylight sun. Some light sources, such as some incandescent, sodium, and halogen lamps, can appear more reddish. Others, such as some metal halide, xenon, and white LED lighting can appear a more bluish white. Some fluorescent lighting can cause objects to appear distinctly greenish. Many so-called “white” light sources can also emit a significant amount IR or UV light. Although the human eye is not sensitive in these ranges, camera sensors used in vision applications are often very sensitive.

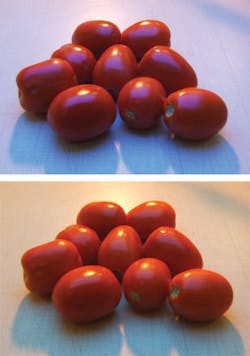

To correct for these apparent differences, different varieties of lighting have been developed with better color rendering. However, these alternatives may have shorter lifetimes, higher costs, and reduced or variable luminous intensity. An inexpensive “color-correction filter” threaded onto the camera lens may provide a simpler, much lower cost, and/or more-effective solution. For light sources with a higher red component (a low color temperature), a light-balancing filter placed over the camera lens or sensor that is typically bluish in color can be used to correct the color temperature. For “cool” white LED and other light sources that are dominated by bluish wavelengths (or high color temperature), a so-called “color-warming” filter that is usually amber can be used over the lens to produce color that appears more normal or natural (see Fig. 3). Not only does this allow the use of generally lower-cost, higher-power, and longer-lived lighting, it also can correct ambient light as well, while serving as a protective cover for the lens.

OTHER FILTERS

Many white light sources used in color applications will produce a significant amount of “nonwhite” light, particularly UV and NIR light. While often the camera will contain a filter or filters that block these additional wavelengths, it may be necessary to include UV- or IR-blocking filters in the application. These filters can usually be used in addition to the other filters by simply screwing them together.

Light that is reflected off of plastic, glass, liquids, lacquer, oil, grease, and other nonmetallic, specular surfaces is polarized light. To selectively reduce or remove this “glare,” a polarizer must be used. Linear or circular polarizing filters have the same effect, but for a lens with a manual focus and manual iris, a linear polarizer will usually be the better choice. If there is an autofocus or autoiris feature associated with the camera lens, this can involve the use of a beamsplitter (partial mirror) somewhere in the optical path that splits light to evaluate focusing distance or available light. A linear polarizer can sometimes interact with these features to give unpredictable focusing or exposure, and, in these cases, a circular polarizer is recommended.

Sometimes additional benefit can be gained by also polarizing the light source used, and in this case a plastic-laminated linear polarizer will usually be most appropriate choice. These can be purchased in sheet form and can be easily cut to a desired size and configuration.

While a polarizer can selectively remove most or all of an interfering glare under certain conditions, each polarizer used will usually reduce overall transmission by about 2/3. In “light-starved” applications, this may be a significant factor. In the opposite case-when there may be too much light and stopping down the lens iris alone does not provide acceptable results-a ND filter may be necessary. Neutral-density filters appear gray, reduce the light reaching the sensor, and have no effect on color balance. A typical application is one in which the object under test emits a very bright light. In addition, ND filters can decrease depth of field by allowing wider lens apertures to be used, which helps separate subjects under inspection from their foreground or background.

JASON DOUGHERTY is sales engineer at Midwest Optical Systems, Palatine, IL, USA; www.midwestopticalsystems.com.