IMAGE SENSORS: Neuromorphic vision sensors emulate the human retina

Although significant advances have been made in digital image sensor technology over the past thirty years, most of today's CMOS and CCD designs do not approach the biological sophistication of the human retina or human visual system. To do so requires circuits that mimic neuro-biological architectures - a concept known as neuromorphic engineering that was pioneered by Carver Mead in the late 1980s.

This inspiration drives the concept behind the Dynamic Vision Sensor (DVS), a 128 x 128 pixel vision sensor that has been developed by Professor Tobi Delbruck and his colleagues at the Institute of Neuroinformatics (Zurich, Switzerland; www.ini.uzh.ch).

Unlike conventional image sensors where image data is clocked synchronously from the device, the DVS is an asynchronous device that only outputs events should a change in brightness occur at any pixel. This reduces the amount of power that needs to be supplied to the device and lowers the data bandwidth. Camera designs such as the DVS 128 camera from iniLabs (Zurich, Switzerland; www.inilabs.com) that incorporate the device then can be designed with little on-board memory and low-cost interfaces (such as USB 2.0).

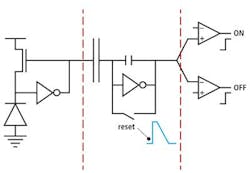

To detect brightness changes as they occur, the design of the device consists of an active logarithmic front end, followed by a switched capacitor differentiator and a pair of comparators. As light falls on the photodiode (far left) a photocurrent is generated at its output that is supplied by the source of a MOS transistor, whose gate is controlled by an inverting high-gain voltage amplifier. The amplifier input is also connected to the photodiode. As more light falls on the photodiode, the voltage at logarithmic front end output increases in an logarithmic fashion. The feedback holds the photodiode at a fixed "virtual ground" voltage, which increases the speed of the circuit and buffers its output.

Although a brightness level is calculated at every pixel, to achieve a sparse asynchronous output like that of the biological retina it is necessary to determine the brightness changes of each pixel as they occur. To accomplish this, the Log (I) value is first DC filtered using a capacitor and fed to a self-timed switched capacitor differentiator. Here, any change in the Log (I) values is amplified and finally compared using two separate quantizers (far right).

Should brightness values change from dark to light or vice versa since the last event sent from the pixel, the comparators actuate on-chip communication logic that send the row and column address of each pixel and the polarity of the intensity change off-chip. Sending the address also resets the pixel to memorize the new brightness value. In this way, only information regarding the intensity change of each pixel and its location need to be transferred from the camera to the host computer.

To analyze this data, Delbruck and his colleagues have developed a software framework that can be used to process the asynchronous data from cameras such as the iniLabs DVS 128 and extract information from them. Currently, both this camera and software are being used by the Institute of Fluid Dynamics in Zurich, Switzerland for particle tracking and at the University of Oslo (Oslo, Norway) for the analysis of hydrodynamic events. A number of other applications including particle tracking velocimetry (PTV), mobile robotics and sleep disorder research have also been demonstrated by Delbruck's team (see http://bit.ly/ualvFt).

While such developments can only approximate the workings of the human retina, Delbruck is fully aware of the spatio-temporal nature of human vision. To aid in such research, the next generation of the DVS will incorporate both an Active Pixel Sensor (APS) and Dynamic Vision Sensor in its design. In this manner both dynamic, low-latency asynchronous data and static image data will be able to be processed simultaneously.

Vision Systems Articles Archives

About the Author

Andy Wilson

Founding Editor

Founding editor of Vision Systems Design. Industry authority and author of thousands of technical articles on image processing, machine vision, and computer science.

B.Sc., Warwick University

Tel: 603-891-9115

Fax: 603-891-9297