Developers of the Cadillac (Warren, MI, USA;www.cadillac.com) Elmiraj, a modernized concept car update of a classic two-door grand coupe, used 3D scanning technology to bridge the design process between traditional hand-sculpting teams and digital modeling design teams.

The creation of the Elmiraj, which was showcased at the Los Angeles International Auto Show from November 22 through December 1 last year, was influenced by the use of 3D scanning. A video from Cadillac shows Mike Nolan of GM Design Fabrications Operations using a blue light scanner to capture a 3D image of the car. Though the make or model of the scanner is not specifically mentioned by GM (Detroit, MI, USA;www.gm.com), the scanner used was an ATOS Compact Scan 5M from GOM. (Braunschweig, Germany; www.gom.com)

The ATOS Compact Scan 5M scanner features a 1,200 x 900 mm measuring area, point spacing of 0.017 - 0.481 mm, and a working distance of 450-1200 mm. The scanner uses projected light patterns and an industrial camera to capture 3D shapes and translate them into data that can be manipulated into digital modeling programs. GM has used 3D scanning since 2001, but more on clay interior and exterior properties than drivable concept cars. With the Elmiraj, GM designers used 3D scanners to validate nearly every pattern, mold, and part during the development of the vehicle.

The scanners were also used to highlight distortions that represent curves or contours. Each scan is digitally stitched together and the data used to create a full scale model. Portions of the vehicle can be used for rapid prototyping part, according to GM.

Software recognizes objects without human calibration

A smart recognition algorithm developed by Brigham Young University (BYU) researchers is claimed to accurately identify objects in images or video sequences without human calibration. Dah-Jye Lee, BYU engineer, developed the algorithm along with his students with the intention of allowing the computer to decide which features are important. The algorithm sets its own parameters and does not require a reset each time a new object is recognized. Instead, it leans them autonomously.

Lee suggests that the idea is similar to teaching a child the difference between dogs and cats in the sense that, instead of trying to explain the difference, he is showing children images of the animals and they learn on their own to distinguish the two.

In a study published in the December issue of Pattern Recognition, Lee and his students describe how they fed the algorithm four image datasets from CalTech (motorbikes, faces, airplanes, and cars), and found 100% accurate recognition on each dataset.

When testing the algorithm on a dataset of fish images of four species of fish from BYU's biology department, the object recognition program could distinguish between the species with 99.4% accuracy.

Algorithm classifies a person by social group

Computer scientists at the University of California San Diego (UCSD, La Jolla, CA, USA;www.ucsd.edu) are developing a computer vision algorithm that uses group pictures to determine which social group, or urban tribe, a person may belong. The algorithm, which is currently 48% accurate on average, classifies people into groups such as formal, hipster, surfer or biker.

In the future, the team hopes that the algorithm may generate more relevant search results. The algorithm could also be used in surveillance cameras in public places to identify groups rather than individuals. In addition, an algorithm that could identify and separate people according to which social group they belong to would likely be useful in a machine vision setting such as pattern matching.

A 48% accuracy of the algorithm is considered a first step by UCSD computer science professor Serge Belongie. Still, the accuracy rate can be considered a good result, considering that the algorithm can evaluate a group of pictures rather than pictures of individuals, he says.

Gaia telescope maps Milky Way

European Space Agency's (ESA, Paris, France;www.esa.int) Gaia, which will capture images of more than one billion stars to create the most detailed 3D map of the Milky Way galaxy ever created, blasted off on the morning of December 18 last year from Kourou, French Guiana.

Gaia's mission to create a 3D map of the Milky Way galaxy will provided detailed physical properties of each star observed, characterizing their luminosity, effective temperature, gravity, and elemental composition. The stellar census will provide observational data to address a variety of important questions related to the origin, structure, and revolutionary history of the galaxy, according to the ESA.

At the heart of the system is the largest focal plane array, ever to be flown in space. This focal plane array has been designed and built by Astrium (Paris, France;www.astrium.eads.net) and contains a mosaic of 106 large area CCD image sensors manufactured by e2v (Chelmsford, Essex, England; www.e2v.com).

There are three variants of the CCD91-72, each optimized for different wavelengths in the range 250 to 1,000nm. The CCD package is three sided buttable to minimize the dead space between CCDs when they are tiled together in the mosaic.

"Gaia will outperform its predecessor in terms of accuracy, limit magnitude and number of objects. It will pinpoint the position of stars with accuracy in the order of 10-300 microarcseconds (10 microarcseconds is the size of ten-cent coin on the Moon, when viewed from Earth)," says Giuseppe Sarri, project manager of GAIA at the European Space Agency.

Gaia is also expected to discover tens of thousands of supernovas by comparing repeated scans of the sky. It is also expected, as a result of slight periodic wobbles in the positions of some stars, to reveal the presence of planets in orbit around them. Lastly, it will also likely uncover new asteroids in the solar system and refine the orbits of those already known.

Camera and image processor device aid visually impaired

Developed to assist the visually impaired, the OrCam (Jerusalem, Israel;www.orcam.com) device— which consists of a camera module and digital image processor—clips onto a user's glasses and analyzes and interprets a given scene before the device aurally describes it to the user.

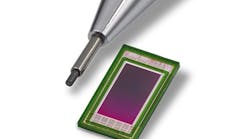

The OrCam consists of a VX6953CB camera module and the STV0987 image processor from ST (Geneva, Switzerland;www.st.com). The VX6953CB camera module features a 5MPixel ¼in CMOS image sensor with 1.4 x 1.4 µm pixel size, and the STV0987 image processor supports a frame rate of 15 fps frame rate, while providing image correction and enhancement capabilities, including a face detection and tracking algorithm, video stabilization and digital zoom. In addition, the STV0987 features automatic contrast stretching, sharpness enhancement, programmable gamma correction, image rotation/mirroring/flip for the Orcam's display.

The STV0987 and VX6953CB capture and process images in different lighting conditions and on a variety of surfaces, including newspapers and signs. The device then analyzes and interprets a given scene and aurally describes it to the user. In addition, the system is supplied with a pre-stored library of objects which a user can add to over time.

Researchers trap and transfer single cells

When developing new medications, biologists and pharmacologists test different active ingredients and chemical compounds. The main purpose is to discover how biological cells react to these substances. To do so, researchers often use a fluorescence microscope that produces digital, holographic images - computer-generated holograms in which the cells being studied can be viewed in 3D. The hologram is first created as an optical image, which is then digitized for recording and analysis.

To obtain precise and reliable answers to the question of how the cells react to chemical substances, each cell must be placed in an individual, hollow well on a microfluidic chip. To ensure the cells are of the same size, so that their reactions to the active agent can be compared, single cells must be transferred to the wells.

To do so, researchers at the Fraunhofer Institute for Production Technology IPT in Aachen have combined digital holographic microscopy with the use of optical tweezers - an instrument that uses the force of a focused laser beam to trap and move microscopic objects. This tool enables the researchers to pick up selected cells, transfer them to individual wells of a microarray, and keep them trapped.

Vision-guided robot discovers sea anemones

A camera-equipped underwater robot deployed by the Antarctic Geological Drilling (ANDRILL;www.andrill.org) program has found a new species of small sea anemones living in the underside of the Ross Ice Shelf off Antarctica. The 4.5-ft cylindrical subsea remotely operated vehicle (ROV) is comprised of a front-maneuvering thruster model, an electronics housing unit, a rear-maneuvering thruster module, a main thruster module and tether attachment point. The ROV, called the submersible capable of under-Ice navigation and imaging (SCINI), has a vision system consisting of a camera module with a tilting-forward camera and fish-eye lens, forward lights, a fixed-downward camera, downward lights, scaling lasers, and a projection cage.

SCINI's two cameras were supplied by Elphel 353 from Elphel (West Valley City, UT, USA;www.elphel.com). The downward-looking camera used was a 5 MPixel Elphel 353 camera with an internal FPGA that handles pipelined image processing and an embedded system running Linux. The camera features an Aptina (San Jose, CA, USA; www.aptina.com) CMOS image sensor, region of interest mode and on-chip binning. An Elphel 1.2 MPixel camera was used for the forward-looking camera. To study the underside of the ice, SCINI was operated inverted, so that the larger format camera was responsible for the observation of the anemones.

"ANDRILL Coulman High Project site surveys were the first time that SCINI or any other ROV was deployed through an ice shelf," Frank Rack, executive director of the ANDRILL Science Management Office at the University of Nebraska-Lincoln (UNL, Lincoln, NE, USA;www.unl.edu) told Vision Systems Design. "The ice shelf was 250-270m thick in the areas that the dives were performed. There were a total of 14 dives at two sites over several days, with the longest dive being slightly over 9 hours long."

The species of white anemones— named Edwardsiella andrillae-were burrowing into the underside of the ice, were discovered by SCINI in 2010, but were only publicly identified for the first time in a recent article in PLOS ONE. They are the first species identified known to live in ice, and also live upside down, hanging from the ice, compared to other sea anemones that live on or in the sea floor.

ANDRILL's Coulman High Project was financed by the National Science Foundation in the U.S. and the New Zealand Foundation for Research. It was deployed to learn more about the ocean currents beneath the ice shelf and to provide environmental data for modeling the behavior of the ANDRILL drill string, so the discovery of a new species came as a surprise to the team.

Plans for a project called Deep-SCINI are underway, which involves the further study of this environment using a robot to explorer deeper into the ocean (up to 1000m, compared to SCINI's 300m limit). NASA, which is interested in microbiology and the possibility of identifying potential habitats using remote sensing capabilities, is helping to finance the project.

Vision Systems Articles Archives