March 2014 snapshots: Computer vision and Google's Project Tango, Vision system identifies tumors, 3D movie of living sperm, marsupial robot

Computer vision aids Google's Project Tango

Over the past year, Google (Mountain View, CA, USA; www.google.com) has been working with universities, research institutions, and industrial partners to develop Project Tango, which aims to give mobile devices a human-scale understanding of space and motion.

Specifically, Google wants to concentrate all of the technology gathered from its various partners into a single mobile phone containing customized hardware and software designed to track the full 3D motion of the device while simultaneously creating a map of the environment. Sensors in the phone will allow it to make 3D measurements, updating its position and orientation in real-time, and combining that data into a single 3D model of the space around the user.

For the device's image sensors, OmniVision Technologies, Inc. (Santa Clara, CA, USA; www.ovt.com) is supplying its OV4682 and OV7251 CMOS image sensors. OV4682 records both RGB and IR information, the latter of which is used for depth analysis. OmniVision's OV7251 global shutter sensor is used for the device's motion tracking and orientation. In addition, the Myriad 1 computer vision processor from Movidius (San Mateo, CA, USA; www.movidius.com) helps to process computer vision data.

Google is distributing prototype devices to professional developers with the idea that the Tango might be used for indoor navigation/mapping, gaming, and the development of algorithms for processing sensor data.

Vision system identifies tumors

Researchers at the Cedars-Sinai Maxine Dunitz Neurosurgical Institute and Department of Neurosurgery (Los Angeles, CA, USA; http://cedars-sinai.edu/) have developed a vision system that uses a CCD camera, a synthetic version of a protein found in the venom of a deathstalker scorpion, and a near-infrared (NIR) laser to illuminate malignant brain tumors and other cancers.

Dr. Keith Black, chair and professor of the Department of Neurosurgery says that malignant brain tumors called gliomas are among the most lethal forms of tumors, with patients typically surviving only about 15 months after diagnosis. By removing the tumor, survival statistics will increase, but it is impossible to visualize with the naked eye where a tumor stops and brain tissue starts, he explains.

To do, a vision system developed at Cedars-Sinai acquires both white light and NIR images and combines them by superimposing them on an HD video monitor. The system employs a JAI (San Jose, CA, USA; www.jai.com) AD-130GE prism-based 2-CCD progressive area scan camera. The 1.3 MPixel camera, which is GigE Vision and GenICam compliant, features 1/3in CCD sensors to capture visible and NIR images.

A 16mm VIS-NIR compact fixed focal length lens from Edmund Optics (Barrington, NJ, USA; www.edmundoptics.com) was attached to the camera using a C-Mount and an NT56-353 C-Mount thin lens mount holder from Edmund Optics was attached in the front of the lens, which housed a 785-nm notch filter that filters excitation light from the return image. An LD785-SH300 785-nm laser diode from Thorlabs (Newton, NJ, USA; www.thorlabs.com) was used for NIR excitation while white light was provided with a commercially-available xenon light source from Storz. (Tuttlingen, Germany; www.karlstorz.com). White light and NIR fluorescence images were captured via the camera's GigE interface.

"Gliomas have tentacles that invade normal tissue and present big challenges for neurosurgeons: Taking out too much normal brain tissue can have catastrophic consequences, but stopping short of total removal gives remaining cancer cells a head start on growing back. That's why we have worked to develop imaging systems that will provide a clear distinction - during surgery - between diseased tissue and normal brain tissue," he says.

The imaging agent which is based on the protein found in the Israeli deathstalker scorpion, is called Tumor Paint BLZ-100, a product of Blaze Bioscience (Seattle, WA, USA; www.blazebioscience.com) The agent, called chlorotoxin, was bonded to an NIR dye, indocyanine green (ICG), a version of which is already approved by the FDA. Together, the imaging contrast agent attaches to brain tumors and when stimulated by the laser, emits a glow invisible to the eye, but imaged by the vision system.

In studies performed on laboratory mice with implanted human brain tumors, the system delineated tumor tissue from normal brain tissue, and with the NIR light's ability to penetrate deep into the tissue, the system identified tumors that had migrated away from the main tumor and would have evaded detection.

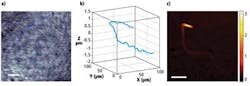

Scientists capture 3D movie of living sperm

A team of scientists from four separate European institutions have developed a vision-based tracking system that may provide physicians with a technique to help assess the viability of sperm used in in vitro fertilization (IVF).

Sperm cell mobility is a key predictor of IV success. This vision system acquires 3D images of the real-time movement and behavior of living sperm using a JAI (San Jose, CA, USA; www.jai.com) CV-M4 monochrome progressive scan camera. In addition to showing the movement and behavior of the sperm, the camera provides 3D images of the sperm's form and structure to detect potential infertility-causing anomalies, such as a "bent tail." Current processes for monitoring sperm concentration and mobility are assessed by subjective visual evaluation or a process known as computer-assisted sperm analysis, which only allows tracking and imaging in 2D.

With the newly developed method, researchers can visualize live sperm in space and time says Giuseppe Di Caprio of the Institute for Microelectronics and Microsystems of the National Research Council (NRC, Naples, Italy; www.na.imm.cnr.it), and Harvard University (Cambridge, MA, USA; www.harvard.edu).

In operation, laser light is separated into two beams. One beam is transmitted through a dish containing live sperm cells, and this is then recombined with the second beam.

"The superimposed beams generate an interference pattern recorded on camera," Di Caprio says. "This image is a hologram containing information relative to the morphologies of the sperm and their positioning in 3D space. Viewing a progressive series of these holograms in a real-time video, we can observe how the sperm move and determine if that movement is affected by any abnormalities in their shape and structure." Next, the researchers plan to use the system to define the best quality sperm for IVF. The four institutions involved in the project were the Institute for Microelectronics and Microsystems of the National Research Council (CNR) (Naples, Italy; www.na.imm.cnr.it), the National Institute of Optics of the CNR (Naples, Italy; www.ino.it/en), the Center for Assisted Fertilization, (Naples, Italy; www.centrofecondazioneassistita.com), the Free University of Brussels in Belgium (Brussels, Belgium; www.vub.ac.be/en). Rowland Institute at Harvard, Harvard University (Cambridge, MA, USA; www.rowland.harvard.edu) was also involved in the project.

Vision Systems Articles Archives

Marsupial robotic system monitors rivers

RIVERWATCH is a "marsupial" robotic system comprised of an autonomous surface vehicle (ASV) and an unmanned aerial vehicle (UAV) which together provides autonomous environmental monitoring of rivers. The project, which began as part of the European Clearing House of Open Robotics Development (ECHORD, Garching Germany; www.echord.info), pairs the ASV with a piggy-backed multi-rotor UAV for the automatic monitoring of riverine environments from an aerial, surface and underwater view. This coordinated aerial, underwater, and surface level perception will enable the autonomous gathering of environmental data. Both of the cooperative robots feature an integrated vision system.

The UAV is based on the VBrain from Virtualrobotix (Bergamo, Italy; www.virtualrobotix.it). It features open sourced control software and hardware and a six-rotor configuration for additional lifting capability. Its vision system features a FLIR (Wilsonville, OR, USA; www.flir.com) Quark 336 thermal imaging camera, which is equipped with a 336 x 256 uncooled VOx micro-bolometer thermal imager with a 17 x 17 µm pixel size. In addition, the UAV is equipped with a GoPro (San Mateo, CA, USA; www.gopro.com) Hero 3 WiFi camera, and a webcam.

RIVERWATCH's ASV is based on a 4.5m Nacra (Scheveningen, Netherlands; www.nacrasailing.com) catamaran with special carbon fiber reinforcements for the roll bars and motor supports. Its hulls have been filled with PVC closed cells foam board making it unsinkable. Its vision system is comprised of a number of cameras and sensors, including multiple Ladybug3 cameras from Point Grey (Richmond, BC, Canada; www.ptgrey.com). The ASV also features an LD-LRS2100 long range laser scanner from SICK (Waldkirch, Germany; http://www.sick.com). The scanner features infrared (905 nm) light sources with a 360° field of view and a scanning frequency of 5 Hz to 10 Hz. The scanner has an operating range of 2.5m to 250m and an IP67 enclosure. In addition, the ASV is equipped with DeltaT 837B fixed underwater sonar from Imagenex (Port Coquitlam, BC, Canada; www.imagenex.com) and Proflex 800 GPS-RTK positioning system from Ashtec (Sunnyvale, CA, USA; www.ashtech.com.)

Field tests for the RIVERWATCH system were carried out in a private lake in the Sesimbra region of Portugal. This application was the first in which an aquatic-aerial robotic team was deployed for environmental monitoring. While the initial work focused on the hardware and technology of the project, future work will be centered on making RIVERWATCH a completely autonomous system, with additional development needed to address the energy consumption of the ASV, the battery charge of the UAV and propulsion system characterization.

Vision Systems Articles Archives