January 2015 Snapshots: 3D robot vision, UAVs, medical imaging, imaging Jupiter

ATLAS, a humanoid robot with functioning limbs and hands, recently received programming updates from the Florida Institute for Human and Machine Cognition (IHMC; Pensacola, FL, USA; www.ihmc.us), to enable it to walk like a human with more agility than before.

Built by the Google-owned Boston Dynamics (Waltham, MA, USA; www.bostondynamics.com), with funding and oversight from the United States Defense Advanced Research Projects Agency (DARPA, Arlington, VA; www.darpa.mil), the robot features a head-mounted MultiSense-SL 3D data sensor from Carnegie Robotics (Pittsburgh, PA, USA; www.carnegierobotics.com) that is comprised of a UTM-30LX-EW laser from Hokuyo (Osaka, Japan; www.hokuyo-aut.jp) and a MultiSense S7 stereo camera from Carnegie Robotics. The camera is fitted with either a 30 fps 2MPixel CMV2000 or 4MPixel CMV4000 CMOS image sensor from CMOSIS (Antwerpen, Belgium; www.cmosis.com).

The Hokuyo-which outputs 43,000 points per second-is axially rotated on a spindle at a user-specified speed. The MultiSense SL features on-board processing for image rectification, stereo data processing, and time synchronizing of laser data with a spindle encoder and spindle motor control. Returned image data is then used to produce 3D point clouds by the MultiSense SL.

The ATLAS robot has four hydraulically-actuated limbs and is made of aircraft-grade aluminum and titanium. Standing 6ft tall and weighing 330lbs, the robot was designed for a variety of search and rescue missions. During DARPA's Robotics Challenge, ATLAS robots were provided to six teams to test the robot's ability to perform various tasks, including mounting and dismounting a vehicle, driving and using a power tool.

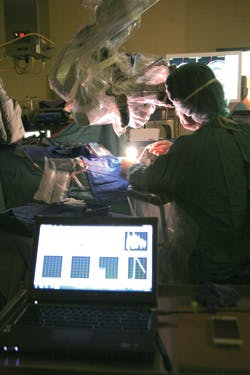

Low light camera assists in cancer treatment

Yoann Gosselin, a graduate in Biomedical Engineering at l'École Polytechnique de Montreal (Montreal, QC, Canada; www.polymtl.ca), has developed a vision system that features a low light camera, a microscope, and a spectrometer to detect a particular molecule that accumulates in malignant brain tissue, accurately revealing cancer cells.

The imaging system is used as part of the surgical process of detecting cancerous cells in the brain and when combined with radiotherapy and/or chemotherapy, will provide a better prognosis for brain cancer patients. In the system, Gosselin combined a neurosurgical microscope from Carl Zeiss (Jena, Germany; www.zeiss.com) with a spectrometer, and coupled the latter to an HNü 512 EMCCD camera from Nüvü Cameras (Montreal, QC, Canada; www.nuvucameras.com). The HNü 512 features a 512 x 512 EMCCD image sensor and achieves a frame rate of 67 fps.

With the addition of the HNü camera, the device detects the concentration of protoporphyrin IX (PpIX), a molecule that specifically accumulates in malignant brain tissue, with increased sensitivity. The camera helps by accurately defining the boundaries between healthy cells for purposes of extracting cancer cells during surgery, while providing a full image of the exposed brain area. In addition, the camera increases the amount of detectable diseased cells, enhancing the procedure's efficiency.

UAVs use hyperspectral sensors for ocean research

Researchers from Columbia University (New York, NY, USA; www.columbia.edu) will use an unmanned aerial vehicle (UAV) to investigate climatological changes present in the Arctic Ocean around Svalbard, Norway. In the remote sensing project, the UAV will assess ocean and sea ice variability in this Arctic zone. The UAV will be fitted with two Micro-Hyperspec hyperspectral cameras from Headwall Photonics (Fitchburg, MA, USA; www.headwallphotonics.com) the first of which - the A-Series - covers the visible near infrared (VNIR) spectrum (400-1000 nm). The camera is fitted with a silicon CCD focal plane array with a 7.4 x 7.4 μm pixel size and features a 1.9 nm spectral resolution, a dynamic range of 60 dB, and a frame rate of 90 fps.

Headwall's Micro-Hyperspec T-Series, the UAV's second camera, covers the near-infrared (NIR) spectrum (900-1700 nm). The TE-cooled camera features an InGaAs infrared focal plane array with a 25 x 25 μm pixel size, a dynamic range of 76 dB and a frame rate of 100 fps. Together, the two sensors, along with Headwall's Hyperspec II airborne software, will be used to measure sea ice and solar warming.

"The UAV allows scientists to measure in places that typically are impossible to reach using ships or manned aircraft," stated Christopher Zappa, a Lamont Research Professor at Columbia's Lamont-Doherty Earth Observatory. "The combination of Micro-Hyperspec and Headwall's Hyperspec III airborne software will allow for the successful collection, classification, and interpretation of the spectral data collected during each flight," concludes Zappa.

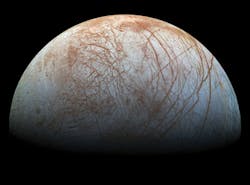

Reprocessed image shows color view of Jupiter's moon

An image captured in the 1990s by NASA's Galileo Solid-State Imaging (SSI) camera has been reprocessed to show a colorful, stunning view of Jupiter's icy moon Europa. Images of the moon were previously released as a mosaic in lower resolution and strongly enhanced color, but the enhanced images have been assembled into a realistic color view that approximates how Europa would appear to the human eye.

The optical system used in the SSI camera is a modified flight spare of the 1500 mm focal length, narrow-angle telescope flown on NASA's Voyager mission. It features a 12.19 x 12.19 mm 800 x 800 CCD image sensor and a field of view of 0.46°. SSI's broad-band filters allowed for the reconstruction of visible color photographs. Four of the filters were chosen to optimize performance of the SSI in the near-infrared (NIR): two for methane absorption bands (727 nm and 889 nm), one for continuum measurements (756 nm), and one to provide spectral overlap with the spacecraft's NIR mapping spectrometer (986 nm). The final filter was a clear filter (611 nm) with a broad (440 nm) passband.

Images taken with this camera through NIR, green, and violet filters were combined to produce the image. Gaps in the images were filled with simulated color based on the color of nearby surface areas with similar terrain types. The scene shows long cracks and ridges across the surface of the moon, interrupted by regions of disrupted terrain where the surface ice crust was broken up and refrozen into new patterns.

The original images were taken on the Galileo's first and fourteenth orbits throughout the Jupiter system in 1995 and 1998, respectively. Image scale is one mile per pixel. Galileo's mission, which launched on October 18, 1989, ended on September 21, 2003.