September snapshots: 3D microscopy, autonomous vehicles, robotics

Microscope measures 3D printed parts

For engineers using 3D printers for rapid prototyping, parts being printed must be verified as accurate and within specification. To do so, Swept Image (Toronto, ON, Canada;www.sweptimage.com) has developed a microscope adapter called the SweptVue system that incorporates an adapter that replaces the observation tube of an Olympus SZX7 stereo microscope, transforming it into a digital line scanning confocal measurement system. The system uses one of the microscope's two independent and parallel optical pathways to illuminate a part with light from a digital light projector from Texas Instruments (Dallas, TX, USA; www.ti.com). The other optical pathway channels scattered light onto a Flea3 industrial camera from Point Grey (Richmond, BC, Canada; www.ptgrey.com)

Light is captured from the sample at different depths by synchronizing the time at which the light is projected across the sample with the position of the rolling shutter in the camera. The SweptVue system then collates the stack of images captured at varying depths to produce a depth map. From this, the system can automatically measure the micrometer-scale features of 3D objects.

"While cameras with rolling shutters have been criticized for their performance when imaging moving targets, these characteristics were used to our advantage in the SweptVue system," says Mr. Matt Muller, co-founder of Swept Image.

To demonstrate the effectiveness of the system as a quality assurance tool, a set of 3D printed sample parts were analyzed to determine its accuracy and precision. In testing, the SweptVue system showed that the tolerances of the parts varied considerably from the original design specification.

University wins autonomous underwater vehicle competition

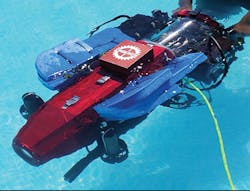

Defiance, an autonomous underwater vehicle developed by San Diego State University (San Diego, CA, USA;www.sdsu.edu), is a vision-guided submarine equipped with robotic grippers and torpedo launchers. Winning the 18th annual RoboSub competition promoted by the Association for Unmanned Vehicle Systems International (AUVSI) Foundation (Arlington, VA, USA; www.auvsifoundation.org), the Defiance is equipped with two cameras for object detection and navigation.

In the design of the system, the team chose two DFK 23U274 color cameras from The Imaging Source (Bremen, Germany;www.theimagingsource.com) for their vehicle's vision system. DFK 23U274 cameras are USB 3.0 cameras with a 1.9Mpixel Sony (Tokyo, Japan; www.sony.com) ICX274AQ CCD image sensor with a 4.4 μm pixel size that achieves 20 fps.

Each camera is located in the frontal hull section of the vehicle, with one camera used for forward vision and the other for downward vision. The forward-facing camera uses a wide-angle lens and is used for object detection and navigation. The downward facing camera is responsible for tracking the depth and course path and detecting the obstacles. Using OpenCV, the team developed its own set of algorithms for detecting objects.

Defiance is equipped with a mini-ITX computer from Asus (Taipei, Taiwan,www.asus.com) with 8GBytes RAM and 256GBytes SSD, USB to RS-232 communications hub, sensor interface board and a backplane with eight daughter cards and terminal block connections. It also features power monitoring and under-voltage detection, two thruster control boards, hydrophones and a direction rendering analysis system (HYDRAS).

In addition to the cameras, the vehicle's sensor payload includes four hydrophones from Sparton (DeLeon Springs, FL, USA;www.spartonnavex.com) and three pressure transducers from Measurement Specialists (Hampton, VA, USA; www.meas-spec.com).

Autonomous truck granted license to operate

Daimler Trucks North America (DTNA; Portland, OR, USA;www.daimler-trucksnorthamerica.com) Freightliner Inspiration vision-guided autonomous commercial truck is the first licensed truck of its kind to drive on U.S. public highways. The truck is designed to reduce accidents, improve fuel consumption and reduce highway congestion. Still only a concept vehicle, the truck was recently unveiled to a group of hundreds of international news media, industry analysts and officials at a ceremony at the Hoover Dam in Nevada.

On board the Freightliner Inspiration is the Highway Pilot, a system that combines camera and radar technology to provide lane stability, collision avoidance, speed control, braking, steering, and a dashboard display to allow for safe autonomous operation on public highways. The system consists of a stereo camera in the center of the windshield, an HMI display and a radar sensor.

The radar unit in the center of the truck scans the road at long and short ranges. The area ahead of the truck is scanned by the stereo camera, which has a range of 328ft and detects lane markings and interfaces with the Highway Pilot steering.

Before the Nevada Department of Motor Vehicles granted the company a license to operate the truck on public roads in the state, DTNA's Freightliner underwent extensive testing. After granting the license, Nevada Governor Brian Sandoval took part in the ceremonial first drive of the truck in autonomous mode. The prototype is a Level 3 autonomous car so while it can perform autonomous tasks including maintaining legal speed, staying in the selected lane, keeping a safe braking distance from other vehicles, and slowing or stopping the vehicle based on traffic and road conditions, the driver can take control at any time.

"The Freightliner it is not a driverless truck-the driver is a key part of a collaborative vehicle system," said Richard Howard, Senior Vice President, Sales & Marketing, DTNA. "With the Freightliner drivers can optimize their time on the road while handling logistical tasks, from scheduling to routing."

Amazon holds robotics picking challenge

Held at the 2015 IEEE's (New York, NY, USA;www.ieee.org) International Conference on Robotics and Automation in Seattle, WA, USA, Amazon's first robotics picking challenge tasked entrants with building a robot that performs the same task as a stock person.

Amazon's automated warehouses eliminate much of a stock person's walking for items within a warehouse, but commercially viable automated picking in unstructured environments still remains a challenge. To advance this technology, 31 entrants were challenged to build a system to pick items from shelves. The robots were presented with a stationary lightly-populated inventory shelf and were asked to pick a subset of the products and place them on a table. The robots needed to perform object recognition, pose recognition, grasp planning, compliant manipulation, motion planning, task planning, task execution and error detection and recovery.

Entrants were allowed to use humanoid robots or other robots of their choice and, for contestants who were unable to bring a robot, Amazon provided PR2 robots from Clearpath Robotics (Kitchener, ON, Canada;www.clearpathrobotics.com) to use as a base platform. The robots were scored by how many items are picked within a fixed amount of time, with $26,000 in prizes being awarded ($20,000 for 1st, $5,000 for 2nd, and $1,000 for 3rd).

Taking home the top prize was Team RBO from the Technical University of Berlin (Berlin, Germany;www.tu-berlin.de) while Team MIT (Cambridge, MA, USA; www.web.mit.edu) took second place. Team Grizzly, from Dataspeed (Troy, MI, USA; www.dataspeedinc.com) and Oakland University (Rochester, MI, USA; www.oakland.edu), took third prize.

"By synchronizing the illumination pattern on the part with the rolling shutter, light returned from different heights on the part is shifted laterally at the sensor, enabling features to be captured at specific depths"