3D vision and industrial robots combine to label fruit products

Ross Horrigan

For many fresh produce wholesalers and supermarkets, the application of price and promotional labels represents a significant effort in manpower and a substantial outlay in expense. Hence, many of the high volume lines used to apply labels to fruit such as apples and oranges have been automated for many years.

For lower volume products such as pomegranates or melons, however, it is a different story. The sheer physical diversity of such products, and the associated complexities of dealing with them, has, until now, remained a process where labels are manually applied before they are subsequently repackaged.

Recently, however, Loop Technology (Dorchester, England; www.looptechnology.com) has developed an adaptable vision-based robotic system that is capable of affixing labels to such produce. Using a 3D camera, the system identifies the produce moving through the cell, prints labels of different shapes and sizes and applies them to a specific location on the surface of the produce using a pair of industrial robots (Figure 1).

Boxes of produce loaded onto a conveyor first pass through a vision enclosure where target fruit are identified. Labels are printed as required to minimize wastage, and the robots optimize the picking and placing of labels for efficient operation. The two robots cooperate to share the workload to apply single or multiple labels to the fruit.

System architecture

The automated labeling system is controlled by a Dell (Round Rock, TX, USA; www.dell.com) PC with an Intel (Santa Clara, CA, USA; www.intel.com) Core i7 processor which runs a Human Machine Interface (HMI), machine vision and machine sequencing software. The PC is interfaced to a CX series embedded PC from Beckhoff (Verl, Germany; www.beckhoff.com) that provides real-time control of the system, while a PLC from Pilz (Ostfildern, Germany; www.pilz.com) monitors the status of key elements around the robotic cell such as emergency stop buttons and a door locking cell entry mechanism.

Once containers of fruit have been loaded into the automated labeling system, they are moved from a roller conveyor to a driven conveyor belt under the control of the Beckhoff CX series embedded PC. The boxes of produce then enter the vision enclosure where a Senz3D time of flight camera from Creative (Singapore, Singapore; www.creative.com) illuminates the produce with a modulated infrared light source and captures the reflected light. Data from the camera are transferred over a USB 2 interface to the multi-core PC where the time of flight data is analyzed to produce a 3D point cloud of the boxes of produce and the conveyor beneath.

Although the 3D data is vital to enable the system software to determine a point on the surface of the produce where the robot should apply a label, high pass, low pass, and scaling filters are also applied to the 3D data captured by the camera to produce a 2D grayscale image. VisionPro software from Cognex (Natick, MA, USA; www.cognex.com) running on the PC then segments the image to differentiate between, and then identify, the various types of fresh produce passing under the field of view of the camera.

Image data is also used to produce targeting information for objects as they move through the system. To do so, the point cloud data for each target is extracted from the image data and processed using a 3D calibration algorithm developed by Loop Technology. This provides 3D surface data for each object in real-world coordinates. The surface data is then analyzed, and user-defined targeting parameters applied to calculate a point in the 3D space and a vector normal to it on the surface of the produce where a robot should apply the label.

Loop Technology's custom robot communication software allows the targeting data to be transferred between the PC and a pair of robot controllers. These control two KR6 R900 Agilus robots rom Kuka (Augsburg, Germany; www.kuka.com) that apply the labels at predefined locations anywhere on the surface of the fruit. The robot controllers track the movement of the conveyor through the use of two encoders, enabling the labels to be applied without the need to slow the labeling process.

3D analysis

Backed by Intel as the future for perceptual computing, the Senz3D is primarily targeted at gesture recognition applications. As such, systems based on the camera respond well to organic surfaces such as hands, faces, and in this case, melon skin. However, perceptual computing is based on relative changes in the scene, whereas robot positioning requires accurate 3D data in real world coordinates.

As such, the depth data obtained by the camera are not immediately useful, since the data are not in real-world coordinates, and so cannot be used to position the robots. To add to the problem, the effects of lens distortion and time of flight distortion (where objects on the peripheral of the image appear further away) come into play, giving the scene a warped curvature when rendered directly.

To overcome this, Loop Technology developed a 3D calibration routine using a 3D checkerboard built using precision-machined blocks. Using the known width height and depth of the blocks, it is possible to calculate correction transforms for the various distortions present in the raw data, and produce a new point cloud in Cartesian space.

Imperfections in the fruits also cause errors resulting in chasms and peaks appearing where none exist. To counteract this, a surface mapping algorithm is applied before the robot positions are calculated to smooth these imperfections and create reliable target positions for the robots.

The 3D coordinates that represent the location on the produce where one or more of the labels are to be placed are transferred over an Ethernet interface to the robot controllers. When a box of produce is in range of the robots, two pneumatically-driven end-effectors on the end of the arms on the robot pick labels from two label printers and apply them to the surface of the produce (Figure 2). The fully-labeled produce then leaves the cell via a roller conveyor.

Training routine

The machine is capable of labeling a diverse product range. Although there are many industrial labeling machines already capable of a faster throughput, they are restricted to specific product types. Loop Technology's machine, however, can switch from avocados to watermelons at the touch of a button, making it ideal for low produce and seasonal items.

The complexities of the system are hidden from the user with a simple software interface that can be used to train the system to identify new products. Using a browser-based setup interface, a new product can be added to a list of products to be identified even while the machine is in operation. Training requires that a lone specimen be placed on the conveyor and imaged by camera.

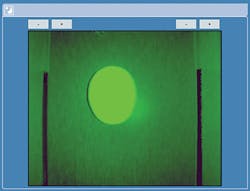

Once an image has been captured, the user can set the depth thresholds so that the specimen stands out clearly from the conveyor and any packaging, after which the system is automatically trained to recognize the produce (Figure 3). To do so, a blob analysis algorithm identifies the point cloud region containing the specimen. This data is then analyzed and quantified to produce a dataset unique to the product.

The set up interface also allows a user to create a library of label types and sizes that can be affixed to the produce by the robot end effectors. In addition, an image of the label can be imported into the system to enable an operator to visualize the characteristics of the label on the system's monitor.

The number and position of labels to be affixed to the produce is also controlled via the software setup interface. Although labels are typically added to the center of the produce, multiple labels can be moved in relation to one another. To enable the position of the label to be selected, a drag and drop interface allows a label to be selected and dragged onto the surface of a model of the produce on the screen and dropped at the required position.

Labeling details are then stored as part of a recipe for that particular item of produce on the system. Each individual recipe determines what type of produce are to be identified, what printers should be used to affix labels to the produce and the specific labels to be applied to them. The position and number of labels to be affixed to the produce are can also be defined (Figure 4).

The approach to training and target identification makes no assumptions about product positioning or presentation. Consequently, the system is impervious to changes in packaging such as the size of boxes, the number of products in a box and damaged boxes.

Ross Horrigan, Software Manager, Loop Technology, Dorchester, UK

Companies mentioned

Beckhoff

Verl, Germany

www.beckhoff.com

Cognex

Natick, MA, USA

www.cognex.com

Creative

Singapore, Singapore

www.creative.com

Dell

Round Rock, TX, USA

www.dell.com

Kuka

Augsburg, Germany

www.kuka.com

Loop Technology

Dorchester, UK

www.looptechnology.com

Pilz

Ostfildern Germany

www.pilz.com