Airborne imager tracks targets

By R. Winn Hardin, Contributing Editor

Unmanned aerial vehicle acquires images of battleground movements that are compared and matched to a database for translation into 3-D views.

To obtain and deliver immediate battlefield reconnaissance information, the US military unmanned aerial vehicle (UAV), Predator, flies unobtrusively and acquires video images of troop, vehicle, and tank ground movements. During 2001, the Predator performed its mission over Afghanistan during the US Enduring Freedom operation.

Predator is one of several US target-tracking vehicles. For example, the X-45 is a tactical UAV under development, while the US Marine Corp.'s Pointer is a portable UAV that enables ground forces to 'see' ahead a few kilometers. Video reconnaissance, however, offers limited usefulness because it tends to give field commanders a limited "soda-straw" view of the battlefield.

In the late 1990s, the success and proliferation of UAV platforms prompted the US Navy to develop a video reconnaissance system that accepts video and telemetry data from nearly all UAVs and combines it with archived elevation maps and reference photogammetry. By combining data streams in a seamless manner, the video system provides huge amounts of command-and-control data in an intuitive method that most field commanders can easily understand.

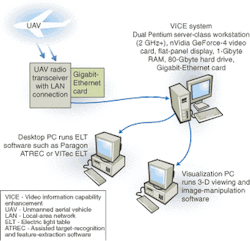

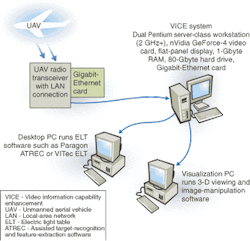

Under the US Navy's Raptor program, Sarnoff Corp. (Princeton, NJ) has field-tested a deployable command-and-control information system called the Video Information Capability Enhancement (VICE) system. Essentially structured as a dual, high-clock-speed, Intel Corp. (Santa Clara, CA) Pentium-processor-based server-class workstation with software and a special image-processing board, VICE accepts NTSC or PAL digital or analog video-signal transmissions from a UAV platform (see Fig. 1).

A Sarnoff-developed video card containing an 80-GFLOPS image-processing ASIC separates the video into background mosaics and foreground targets. This video information is then passed to the dual processors, which register the video data with archived orthogonal images using telemetry data from the UAV and archived elevation maps (see Fig. 2). When used in combination with a Sarnoff three-dimensional (3-D) visualization module or an electronic light-table program from Paragon Imaging (Woburn, MA) or VITec Imaging (Reston, VA), a field commander can visually "fly" through the 3-D reference landscape, maneuver around and through live video overlays, and direct troops, aircraft, and ordinance.

UAV platform

Most UAV platforms contain a few basic building blocks: the aircraft, a pair of cameras (typically visible and infrared); a telemetry/dead-reckoning/global-positioning system (GPS) to position the aircraft's attitude and location, and a radio link to transmit data and receive control commands. The use of two spectrum cameras makes it easier for a target-tracking system to separate close objects, such as a tree from a parked vehicle. To a mobile video camera, all targets appear to move, which excludes target identification by using mainstream machine-vision methods, such as between frame pixel-to-pixel changes. Additionally, objects seen from a relatively short distance exhibit parallax; they move with the camera until the UAV passes overhead and then appear to retreat at different rates than the movement of the background scene—usually the ground in an airborne target-tracking scenario. Parallax is less of an issue from high altitudes where the ratio of the camera-to-object distance to the object-to-background distance is higher; this ratio reduces the appearance of parallax with Earth-bound objects.

Telemetry data are crucial to identifying the location of the UAV and the camera's attitude (direction and angle) relative to the ground. The Raptor program seeks to put this "soda-straw" type of analog or digital video in a wider context of mountains, roads, and other distinct ground features so that field commanders can plan a complete battle plan and not just identify a target as located "somewhere in an area within a certain square mile."

Telemetry and camera-attitude data are crucial to weaving video reconnaissance to larger databases of archived pictures. Satellites and high-flying planes typically collect overhead images that are then processed into orthographic image projections, where each position on the ground appears as it were being viewed from directly above.

Because the ground looks much different from a perspective directly above a position when compared to a 45° observation angle from 30,000 feet, the VICE system must convert the UAV images to a standard coordinate plane to automatically compare the UAV images to reconnaissance image databases. Using the orthographic reconnaissance database in conjunction with digital elevation measurements for various points on the archived image, the VICE system can warp the image so that features appear to be seen from the same direction in which the camera is pointing on the UAV and from the same altitude.

The VICE workstation uses a multiresolution search method to quickly compare the UAV images to the archived images and determine a high probability match by registering the UAV images to the real world. Then, advanced 3-D viewing software can provide a virtual tour for commanders searching for the most up-to-date information of the battlefield.

Data processing

According to Mike Hansen, head of advanced image-processing research at Sarnoff, VICE has been tested with a number of UAV platforms, including Predator and Pointer. Although the specific cameras and optics used on these UAV platforms vary in features, he says that VICE first sees the data as an analog video stream, typically with 720 X 480-pixel resolution at 30 frames/s. From the ground receiver (most UAV data are first beamed to a repeater transmitter on high-flying support aircraft and then beamed to ground stations for review), the pair of uncompressed, video image streams is digitized by an Acadia-I PCI vision processor from Pyramid Vision Technologies (Princeton, NJ). The Acadia-I can perform encoding and decoding at rates to 27 Mbytes/s.

Digital image data are then sent to the Acadia-I processor for a series of image-filtering steps and other transform functions. During operation, this processor takes a frame and produces a pyramid of resolutions for that image. It does this by passing the image through a low-pass Gaussian filter, which essentially averages the neighboring pairs of pixels into a single pixel that represents the resolution of the image. This engine also generates a band-pass Laplacian pyramid, which is a sequence of images that represent the differences between the successive levels of the Gaussian pyramid. These pyramids are used to eliminate the noise and vibration caused by UAV flight movements, separate the background imagery (Earth) from the foreground imagery (targets), and register the UAV video against reconnaissance image databases.

According to Hansen's group, using a least-squares-based comparison between consecutive fields for motion detection induces errors from outliers. However, using consecutive steps of the Gaussian image pyramids with the least-squares-based approach reduces the process' sensitivity to outliers and provides a robust way to identify image regions that exhibit the most motion between frames—an initial step in several processes including target racking, frame alignment, and camera motion correction. Doing the same process with the Laplacian pyramid levels reduces the appearance of motion due to changes in illumination.

After the motion structure between frames is identified, epipolar or global changes are corrected between frames to eliminate "shaky" video received from an airborne UAV. Through this registration process between frames, VICE can combine the video streams from two or more cameras and add consecutive frames to reduce noise and enhance image clarity. Key frames—essentially a sample of frames from the video stream—can be warped to normalize camera perspective and optical distortion and then stitched into larger mosaics through the use of features common to independent frames; the use of the Laplacian values in this process as the basis for a common mosaic coordinate system allows large image features to be merged over a distance comparable to their size without creating seams between images. (Seams with small image features are spread across small areas and vice versa for borders containing larger image features.)

After platform movement has been corrected between frames using the Laplacian coordinates, VICE can automatically identify moving targets using three or more frames and evaluating changes in the bandpass values across multiple frames and at different levels of the Laplacian pyramid (see Fig. 3-When static background features are filtered out, the moving objects, which include the two runners and a light pole exhibiting parallax from the camera's movement, are highlighted by the target-tracking system (d). An algorithm that filters out parallax movements in a 2-D scene using only two frames eliminates the pole and also the runners since they are all moving in the same direction and relative speed as the moving camera (e). The Sarnoff plane-plus-parallax algorithm filters out the pole but tracks the two running figures (f).).

Target identification can be further enhanced by adopting a 3-D algorithm called "plane plus parallax," which establishes ground features as a base plane for analysis and then eliminates the parallax motion caused by the movement of the camera across the sky or in a pan or zoom motion, whichever is taking place.

Regions of the images from multiple frames that do not fall into either epipolar motion or parallax motion are identified as independent motion—in other words, a potential target. To ensure that targets moving in the same direction as the camera are not discounted by the plane-plus-parallax algorithm, each potential target is tagged with three features that make up an object state: motion, appearance, and shape in the image.

Establishing the object state of a potential target allows the VICE system to track the target as it moves behind other objects, stops moving, or is suddenly introduced to the scene, such as a vehicle emerging from under cover. The object state is updated for each frame using an expectation-maximization algorithm for maximum a posteriori estimation.

Where's the target?

Images are stored in 32 Mbytes of SDRAM memory on the Acadia-I processor board while the Gaussian and Laplacian pyramids are built during motion estimation and correction, mosaic stitching, and target identification. As these processes are completed, the mosaic images along with object state and pyramid data are fed across the PCI bus to 1 Gbyte of RAM in the Pentium workstation for processing by the Sarnoff video-GPS software program.

This program overlays the UAV video data on a reconnaissance imagery database. It requires a large amount of memory, hard-drive space, and processor power because the large databases of orthographic imagery must be transformed from the 2-D map seen directly above to a 3-D map as seen from a particular angle (see Fig. 4). "We needed an industrial PC because correlating the UAV imagery with the reference imagery requires a 3-D rendering step using the digital elevation maps. If we didn't have the Acadia processor and the large PC, we couldn't do this all in [nearly] real time," explains Hansen.

The software takes in telemetry data encoded in the vertical blanking interval or closed-captioning channels of the video stream representing the UAV's general position over land and gimbal information related to the camera's position and attitude. It renders the stored reference archive data in 3-D space to mirror the UAV's point of view. To further cut down on processing, the VICE system registers on a few key frames instead of the full 30 frames/s provided by the UAV. Again, by using the Laplacian pyramid, differences between the UAV and reference images based on illumination, weather, and ground cover (such as snow) can be reduced. The Pentium processors then compare the low-resolution UAV imagery with the 3-D-rendered reference imagery using coarse-to-fine resolution search. Once the UAV video is registered against the archive images, the reference database is updated with the UAV video, and the new image data are available to a local-area network through a commercial gigabit-Ethernet card.

In addition to the gigabit-network interface card and the Acadia-I processor, the PC supports a nVidia (Santa Clara, CA) GeForce-4 video card, a commercial 19-in. flat-panel display, and an 80-Gbyte or larger SCSI hard drive. "We need the SCSI hard-drive capacity for our large video reference imagery database," Hansen says. "A high-end video card like the GeForce-3 or 4 card just makes things easier." According to Hansen, the VICE system uses commercial hardware and software with all of the image processing conducted by the Acadia-I processor and firmware and the video-GPS system.