Unterschleißheim, Germany—Today’s industrial robots maneuver heavy and dangerous workpieces at high speeds, and cannot safely operate near humans, which is why most process steps are either fully automated or fully manual. Increasingly, however, it is being recognized that the best choice is to combine the strength, precision, and speed of industrial robots with the ingenuity, judgment, and dexterity of human workers.

This approach, dubbed human-robot collaboration (HRC), was the recent topic of a study at the University of Tokyo. Scientists there sought to develop and evaluate a real-time HRC system that could achieve concrete tasks such as collaborative "peg-in-hole" exercises using an algorithm for visual sensing to control a robot hand interacting with a human subject. Scientists analyzed the stability of the collaborative system based on speed and accuracy, as well as the conditions required for performing tasks successfully from the viewpoints of geometric, force and posture.

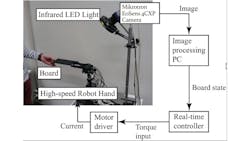

As a start, the scientists devised a vision system consisting of a camera and an image-processing PC. For the camera, they selected a Mikrotron EoSens 4CXP CoaXPress monochrome camera (MC4086) featuring a 4/3’’ CMOS sensor. While the EoSens 4CXP offers 2,336 × 1,728 pixel resolution at speeds up to 563 frames per second (fps), in this experimental setting a higher frame rate of 1,000 fps was required, so it was configured at 1,024 × 768 pixels. An image-processing PC was equipped with an Intel® Xeon® W5-1603 v3 2.8 GHz processor, 16 GB of RAM, a Microsoft Windows 7 Professional (64-bit) operating system, and Microsoft Visual Studio 2017 image-processing software. A CXP frame grabber board acquired the 1,024 × 768 pixel, 8 bit gray-scale raw image data from the Mikrotron camera. After acquiring the image data every 1 ms, the PC measured position and orientation of objects and sent the measurement results to a real-time controller via an Ethernet connection using the User Datagram Protocol.

The robot hand featured three fingers: a left thumb, an index finger and a right thumb with a closing speed of 180° in 0.1 second, which is a level of performance beyond that of a human being. Each finger had a top link and a root link, with the left and right thumbs able to rotate around a palm. Therefore, the index finger has two degrees of freedom.

Manufacturing processes are faster, more efficient, and more cost-effective when humans and robots work together. In a study conducted by MIT, for example, idle time was reduced by 85% when people work collaboratively with a human-aware robot compared to when working in all-human teams. To test their HRC system's speed, efficiency, and accuracy, the University of Tokyo scientists conducted a peg-in-hole exercise at different angles and hand-off speeds. The holed board had retroreflective markers attached at four corners to simplify corner detection by the Mikrotron camera, while the peg itself was simply made of stainless steel and fixed to a frame by a magnet. Motion flow was as follows:

- The human subject moved the board, changing its position and orientation, an action that is captured by the Mikrotron camera.

- Tracking of the markers attached to the four corners of the board was executed by image processing with the board's position and posture calculated based on the information of the marker positions.

- The reference joint angle of the robot hand was obtained by solving inverse kinematics of the robot hand based on the position and posture of the board.

- The torque to be input to the servo motor of the robot hand was generated by proportional derivative control for the reference joint angle.

- The robot hand is moved according to the torque input and assists in placing the board onto the pin.

In the experiment, the collaborative motion was performed in time spans varying from 2 to 15 seconds. Because of the high frame rate of the Mikrotron camera combined with the low latency of the CoaXPress interface, any collaborative error could be successfully suppressed to within 0.03 radian angle even when the board was moved by the human subject at a high rate of speed or in a random fashion. Furthermore, the torque input could be suppressed. As a result, collaborative motion between the human and the robot hand using the proposed method was successfully confirmed.

Applying its new system, the University of Toyko team plans to demonstrate other tasks that cannot currently be achieved with conventional HRC methods and to continue to add flexibility and intelligence to the system.

- Yamakawa, Y.; Matsui, Y.; Ishikawa, M. Development of a Real-Time Human-Robot Collaborative System Based on 1 kHz Visual Feedback Control and Its Application to a Peg-in-Hole Task. Sensors 2021, 21, 663. https://doi.org/10.3390/s21020663