Researchers from the Robots and Perception Group at the University Of Zurich (Zurich, Switzerland; www.uzh.ch/index_en.html) have used a Dynamic Vision Sensor (DVS) from the Institute of Neuroinformatics (Zurich, Switzerland; www.ini.uzh.ch) to transmit event-based, per-pixel data from an unmanned aerial vehicle (UAV).

The team fitted a modified AR UAV from Parrot (Paris, France; www.ardrone2.parrot.com) with the DVS, a 128 x 128 pixel vision sensor that only outputs events should a change in brightness occur at any pixel. Not only does this reduce the amount of power that is needed to be supplied to the device but also lowers the data bandwidth.

Experiments were conducted by the team to see if the DVS would be a viable fit as a vision system to enable high-speed maneuvering of the UAV. An event-based pose estimation algorithm was used to integrate events until a pattern is detected. The system then tracks line segments and the pose of the aircraft as soon as a new event happens.

In the tests, the team found that the rotation of the UAV can be estimated with surprising accuracy. During the experimental flights, the algorithm could track the DVS trajectory for 24 of 25 flips (96%). Position and orientation data was harder to obtain, however, because it produces few events due to very small apparent motion. The team expects that, with a higher resolution DVS, results will significantly improve.

NASA engineer develops printed camera

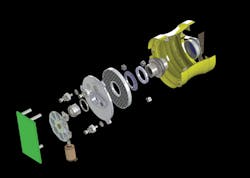

NASA aerospace engineer Jason Budinoff has developed a set of imaging telescopes assembled almost entirely from 3D printed components. Funded by NASA's Goddard Space Flight Center (Greenbelt, Maryland; www.nasa.gov/centers/goddard/home) Budinoff's project involves the construction of a 2in camera with an outer tube, baffles, and optical mounts all printed as a single structure. The 3D printed camera is appropriately sized for a miniature CubeSat satellite. The instrument will be equipped with conventionally fabricated mirrors and glass lenses and will undergo vibration and thermal vacuum testing in 2015.

Although the traditional method of building telescopes involves hundreds of pieces, 3D printing makes this process easier. "With 3D printing", says Budinoff, "we can reduce the overall number of parts and make them with nearly arbitrary geometries. We're not limited by traditional mill- and lathe-fabrication operations."

Specifically, a 3D printed 2in. camera design involves the fabrication of four different pieces made from powdered aluminum and titanium, but a traditionally-manufactured camera would require between five and 10 times the number of parts. In addition, 3D printing these cameras will significantly reduce the overall cost, according to NASA.

Furthermore, Budinoff has his sights set on using 3D printing and powdered aluminum for developing telescope mirrors and experimenting with printing instrument components made of Invar alloy, a material being prepared for 3D printing by Goddard technologist Tim Stephenson.

"Anyone who builds optical instruments will benefit from what we're learning here," Budinoff said. "I think we can demonstrate an order-of-magnitude reduction in cost and time with 3D printing."

Vision system enables autonomous forklift tasks

Researchers from the Department for Conveyor Technology, Material Flow and Logistics at the Technical University in Munich (TUM; Munich, Germany; www.tum.de/en) have developed a vision system that enables forklift trucks to be used in semi-automated warehousing applications.

The project, called "Forklift Truck Eye," involves embedding a camera on a forklift to identify the forks from the image background.

The camera also monitors fork lift height as well as collects 2D data to determine the orientation and motion of the truck.

To enable automatic tracking of material flow, image processing software from Smartek Vision (Cakovec, Croatia; www.smartekvision.com) is used to identify 1D and 2D codes captured by the camera.

TUM researchers chose a GC2441M camera, also from Smartek Vision, for the Forklift Truck Eye project.

The GC2441M is a 5 MPixel GigE Vision camera that features an ICX625 global shutter CCD image sensor with a 3.45 x 3.45 µm pixel size from Sony (Park Ridge, NJ, USA; www.sony.com).

The monochrome camera achieves a frame rate of 15 fps and is GenICam and GigEVision compliant.

To achieve a high degree of insensitivity to ambient light, two LEDs operated by a Smartek Vision IPSC4-r2 4-channel lighting control system was employed in the system.

The forklift truck is currently available to interested parties for assessment purposes.

Imaging system evaluates root systems of plants

Researchers from the Georgia Institute of Technology (Atlanta, GA, USA; www.gatech.edu) and Penn State University (State College, PA, USA; www.psu.edu) have developed a technique that uses digital cameras and image analysis software to measure and analyze the root systems of plants.

Scientists are working to improve food crops to meet the needs of a growing population, but boosting crop output requires improving more than what can be seen of the plants above the ground. Scientists need to understand more about what is happening in the root systems of these plants. To accomplish this, researchers developed an imaging system that uses off the shelf digital cameras and analysis software that enables the evaluation of root systems in plants in field conditions.

The cameras used in the research included a D70 from Nikon (Tokyo, Japan; www.nikon.com) and a Powershot A1200 from Canon (Tokyo, Japan; www.canon.com), as well as an Apple (Cupertino, CA, USA; www.apple.com) iPhone. Individual plants were dug up in the field and the root systems were washed clean of soil. The scientists then captured images of the root systems with these cameras against a black background. The images are then uploaded to a server running software that analyzes the root systems for more than 30 different parameters, including the diameter of tap roots, root density, the angles of brace roots, and detailed measures of lateral roots. Scientists working in the field can upload their images at the end of a day and have spreadsheets of results ready for study the next day.

"We've produced an imaging system to evaluate the root systems of plants in field conditions," said Alexander Bucksch, a postdoctoral fellow in the Georgia Tech School of Biology and School of Interactive Computing. "We can measure entire root systems for thousands of plants to give geneticists the information they need to search for genes with the best characteristics."

Bucksch added, "In the lab, you are just seeing part of the process of root growth," said Bucksch, who works in the group of Associate Professor Joshua Weitz in the School of Biology and School of Physics at Georgia Tech. "We went out to the field to see the plants under realistic growing conditions."

Data and information generated by this research will be used in subsequent analysis to help understand how changes in genetics affect plant growth, with the overall goal being to develop improved plants that can feed increasing numbers of people and provide sustainable sources of energy and materials. The research, which was published in the October issue of Plant Physiology, is supported by the National Science Foundation's Plant Genome Research Program (PGRP) and Basic Research to Enable Agriculture Development (BREAD), the Howard Buffett Foundation, the Burroughs Wellcome Fund and the Center for Data Analytics at Georgia Tech.

Vision-guided robot learns to fly aircraft

Researchers from the Korea Advanced Institute of Science and Technology (KAIST) (Daejeon, South Korea; www.kaist.edu) have modified a small humanoid robot to monitor and control a simulated aircraft cockpit. Dubbed PIBOT, the vision-guided robot can identify and use all of the buttons and controls within a cockpit of a light aircraft.

While most of the inputs such roll, pitch, yaw, airspeed and GPS location are supplied from the simulator; the robot uses a vision system to identify the runway using edge detection. To do this, the robot employs a USB 2 FireFly MV camera from Point Grey (Richmond, BC, Canada; www.ptgrey.com) that features an MT9V022 0.3 CMOS image sensor from ON Semiconductor (Phoenix, AZ, USA; www.onsemi.com) running at 60 fps.

A presentation of the PIBOT system performing a takeoff and landing simulation was showcased at the International Conference on Intelligent Robots and Systems in Chicago. The researchers have also used the PIBOT system to autonomously fly a small-scale model biplane. A video of the system in operation can be found at http://bit.ly/YjnbZs.

UAV uses vision to provide water for lost dummy

During the UAV Outback Challenge, sixteen teams flew their unmanned aerial vehicles (UAV) in a search and rescue mission in which the primary objective was to locate a mannequin named Outback Joe-who was strategically placed within the Queensland, Australia outback-and deliver a bottle of life-saving water.

For the first time in the event's eight-year history, four teams successfully delivered the package to save Outback Joe. Each team was tasked with designing and developing its own UAV and software for purposes of navigating the course, with the winning team taking home $50,000. This year, the winning was CanberraUAV, a non-profit, open source UAV development organization dedicated to developing and promoting the field of civilian UAVs.

For its winning UAV, CanberraUAV chose a Chameleon USB 2.0 camera from Point Grey (Richmond, BC, Canada; www.ptgrey.com). The Model CMLN-13S2C-CS color camera features a 1.3 MPixel 1/3in Sony ICX445 global shutter CCD image sensor that achieves 18 fps at full resolution.

While four teams successfully delivered the water to the mannequin, CanberraUAV (Belconnen, Australia; www.canberrauav.org.au) was awarded the top prize on points given for how closely they could drop the package to the dummy and how well they answered pre-mission questions from judges. Professor Jonathan Roberts from Queensland University of Technology (QUT; Brisbane, Australia; www.qut.edu.au) noted that the completion of the mission was a first of its kind.

"We're not aware of anyone else using UAVs for search and rescue purposes such as this," he says. "Having so many teams complete such a difficult task in one year is amazing - the technology has matured and the teams have had time to practice and innovate over the years."

The event is a joint initiative between the Queensland Government, and the Australian Center for Aerospace (Queensland, Australia; www.arcaa.net), which is a partnership between the Queensland University of Technology and the Commonwealth Scientific and Industrial Research Organization (Melbourne, Australia; www.csiro.au).