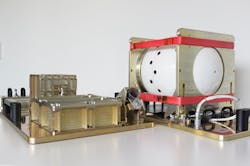

In mid-August, the European Space Agency's (ESA, Paris, Île-de-France, France; www.esa.int) Automated Transfer Vehicle 5 (ATV-5) docked on the International Space Station (ISS) for the purpose of ferrying supplies. In the winter of 2015, however, the ATV-5 will disintegrate as it plunges through Earth's atmosphere. To capture images of this, the ESA has developed an infrared camera called the Break-Up Camera that will join Japan's iBall optical camera and NASA's Re-entry Break-up Recorder to provide a full view of the conditions inside the ATV5 as it disintegrates.

The Break-Up Camera is bolted onto an ATV panel and will capture the last 20s of the vehicle's life as it re-enters the atmosphere. Images will then be transmitted to a spherical capsule called the Reentry SatCom that is coated in a ceramic heat shield. This capsule can withstand the 1500° C temperatures of reentry and is equipped with an antenna to send images to earth via satellite link.

"Once the ATV breaks up it will begin transmitting data to any Iridium communication satellites in line of sight," says project leader Neil Murray. "The break-up will occur at about an 80-70 km altitude, leaving the SatCom falling at 6-7 km/s. The fall will generate a high-temperature plasma around it, but signals from its omnidirectional antenna should make it through any gap in the plasma."

"Additionally, signaling will continue after the atmospheric drag has decelerated the SatCom to levels where a plasma is no longer formed, somewhere below 40 km, at a point where Iridium satellites should become visible to it regardless," he says.

The project is the first to realize a capsule that will survive the 1500°C re-entry and transmit data to the earth, despite altitude or orientation.

Underwater vehicle wins RoboSub competition

Cornell University's (Ithaca, NY, USA; www.cornell.edu) autonomous underwater vehicle (CUAUV), the Gemini, is a vision-enabled submarine that has won the Association for Unmanned Vehicle Systems International (AUVSI) Foundation's (Arlington, VA, USA; www.auvsifoundation.org) RoboSub Competition in five of the last six years. To do so, the Cornell team has made a number of improvements to Gemini, including a stronger frame structures, pressure vessels and improved sensor systems and vision algorithms.

Co-sponsored by the U.S. Office of Naval Research (Arlington, VA, USA; www.onr.navy.mil), the competition sets out to advance the development of autonomous underwater vehicles (AUV) by challenging engineers to perform realistic missions in an underwater environment. Gemini's vision system uses three cameras: two forward facing and one downward facing.

For the two forward facing cameras, F-080C color CCD cameras from Allied Vision Technologies (AVT; Stadtroda, Germany; www.alliedvisiontec.com) were used. These FireWire cameras each feature a 1/3in 1032 x 778 ICX204 CCD image sensor from Sony (Park Ridge , NJ, USA; www.sony.com) with a 4.65 x 4.65 μm pixel size and a frame rate of 30 fps. These forward-facing cameras are used in a stereo vision configuration to determine distances to objects. The downward-facing camera is an IDS-UI-6230SE GigE camera from Imaging Development Systems (IDS; Obersulm, Germany; www.ids-imaging.com).The UI 6230 features a 1/3in Sony XGA CCD image sensor with a 4.65 x 4.65 μm pixel size and a frame rate of 40 fps.

The Gemini submarine is powered by an Express-HL COM Express computer-on-module from ADLINK Technology (San Jose, CA, USA; www.adlinktech.com) that features a 4th generation quad-core Intel Core i7 processor with Mobile Intel QM86 Express chipset. The Express-HL acts as Gemini's on-board computer and is tasked with all vision processing and I/O control required by the AUV. The COM Express module-which features GigE, SATA, and USB 2.0 and 3.0 ports-supports up to 16GBytes dual channel DDR3L SDRAM at 1600 MHz and three DDI channels supporting three indepent displays.

In addition, the Express-HL COM Express carrier board runs its own controller and communicates with micro-controllers on several custom-built peripheral circuit boards.

Gemini's CUAUV Automated Vision Evaluator (CAVE) helps analyze vision performance by keeping a database of logged video and providing a graphical framework for annotation and automated testing. CAVE also organizes captured logs and allows searching metadata such as weather conditions and image location.

The RoboSub competition took place July 28-August 3 at the Transducer Evaluation Center (TRANSDEC) pool of the SSC Pacific Space and Naval Warfare Systems Center Pacific (SSC Pacific; San Diego, CA, USA; http://1.usa.gov/1vITJu7).

Stereo 3D cameras assist in robotic bin picking

Bin picking, the task of picking random objects from a container or bin in vision-guided robotics, presents a number of different challenges for developers. For a robot to accurately detect the shape, size, position, and alignment of an object, a suitable vision system must be selected. One particularly effective method for this is 3D machine vision cameras.

bsAutomatisierung GmbH (Rosenfeld, Germany; www.bsautomatisierung.de/home-en.html), a company that specializes in the development and construction of systems for fast and precise loading and unloading of production machinery and parts handling, recently installed a system that uses Ensenso N10 stereo 3D cameras from IDS Imaging Development Systems (Obersulm, Germany; www.ids-imaging.com) to identify and pick parts. Ensenso N10 cameras feature two 752 x 480 pixel global shutter CMOS image sensors and a USB interface. bsAutomatisierung used the cameras for its bin picking robot cells, which automatically pick individual, randomly aligned parts from a container and pass them on to downstream production processes.

One of the features of the N10 cameras is an infrared pattern projector that projects a random pattern of dots onto the object to be captured, allowing structures that are not visible or only faintly visible on the surface to be enhanced or highlighted. This is necessary because stereo matching requires the identification of interest points in a given image.

Once the interest points are identified, the object is then captured by the two CMOS sensors and 3D coordinates are reconstructed for each pixel using triangulation. Even if parts with a relatively monotone surface are placed in the bin, a 3D image of the surface can be generated.

Two stationary Ensenso cameras are mounted in each cell, which allows both object detection and part placement to occur at the same time. When working with multiple cameras, the N10 software enables the generation of a 3D point cloud. The software also controls the CMOS sensors and random pattern projector and handles the capture and pre-processing of the 3D data. In addition, a calibration plate mounted on the robot gripper enables the calibration of the camera and robot.

Images captured by the cameras are analyzed using HALCON 11 software from MVTec (Munich, Germany; www.mvtec.com). Using these images, along with CAD data from the cell, a collision-free robot path is generated. This path is then transferred to the robot controller. If the robot detects a failure in the bin picking process, it navigates independently from the container and tries to pick another part.

A PLC monitors the process and is responsible for informing the vision system which type of parts are to be picked, when, and from which bin. With this vision system, the robot cells achieve cycle times of less than 10s.

Automated vision system finds burred parts

When a major manufacturing company in the Midwest found itself producing burred components, it focused on finding a vision system that could automatically inspect the parts as they travelled along a conveyor. To accomplish this, Acquire Automation (Fishers, IN, USA; www.acquire-automation.com) developed a system that could identify defects of greater than 1/32in. To do so, the company chose a GT 5000 monochrome smart camera with a GigE interface from Matrox Imaging (Dorval, QC, Canada; www.matroximaging.com). The GT 5000 camera features a 2/3in ICX625AL CCD image sensor from Sony (Park Ridge, NJ, USA; www.sony.com) with a 3.45 x 3.45 μm pixel size and 15fps frame rate.

A ring light from Advanced illumination (Rochester, VT, USA; www.advancedillumination.com) was used to illuminate the inspection area. For image processing and I/O control, Acquire Automation used Matrox Design Assistant flowchart-based software. Design Assistant is an integrated development environment (IDE) that enables image capture, analysis, location, measurement, reading, verification, communication, and I/O operations to be performed within the IDE without conventional programming.

Features within Design Assistant enabled the team to develop the system and the software's human-machine interface (HMI) feature provided the company with a way to edit system properties without needing to connect to a laptop, according to Ross McDonald of Acquire Automation.

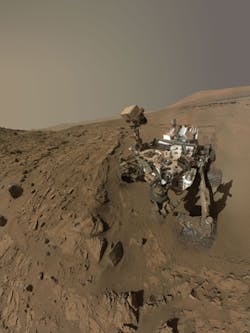

Mars Curiosity rover celebrates with a self-portrait

With a primary objective of determining whether Mars offered environmental conditions suitable for life, NASA's Mars Curiosity rover has now completed a full Martian year, or 687 Earth days, on the red planet. To celebrate, the rover captured a self-portrait to mark a full year exploring the planet.

Of the four cameras on the Curiosity rover, the Mars Hand Lens Imaging (MAHLI) camera was used to capture the image. The MAHLI camera is a focusable color camera located on the turret at the end of a robot arm. It features a 2MPixel KAI-2020 CCD image sensor from ON Semiconductor (Phoenix, AZ, USA; www.onsemi.com) with a 7.4 x 7.4 μm pixel size and a 30 fps frame rate. MAHLI can capture close-up color images of Martian rocks and surface material. The camera system also uses two white LEDs for nighttime imaging and two 365 nm UV LEDs to illuminate materials that fluoresce under UV illumination.

The image captured by the Curiosity isn't quite a self-portrait, but a mosaic of many separate images that are composited, explains Michael Ravine, advanced projects manager at Malin Space Science Systems (San Diego, CA, USA; www.msss.com), the company that developed the rover's camera systems.

NASA's Opportunity rover also recently celebrated an anniversary, having spent 10 years on the red planet. Just like its counterpart, the Opportunity used its panoramic camera to capture a self-portrait.

UV camera system to monitor volcanic eruptions

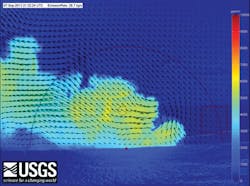

The U.S. Geological Survey's Cascades Volcano Observatory (Vancouver, Washington; http:volcanoes.usgs.gov/observatories/cvo) has teamed with JAI (San Jose, CA, USA; www.jai.com) to develop a rapidly-deployable UV camera-based vision system capable of continuous, real-time monitoring and evaluation of sulfur dioxide emission rates in volcanic eruptions.

The project, which is being funded by the U.S. Geological Survey's Innovation Center for Earth Sciences (Menlo Park, CA, USA; http://geography.wr.usgs.gov/ICES) (ICES), is being developed to allow for the imaging of volcanic emissions in the event of a volcanic crisis. These emissions represent the precursor to a potentially hazardous volcanic eruption and the monitoring of the gases could provide a tool for forecasting these eruptions and improving the safety measures for the surrounding population.

JAI's CM-140GE-UV GigE Vision cameras will be used for the imaging system. These cameras feature a 1/2in progressive scan Sony CCD image sensor that is sensitive to wavelengths below 200 nm. The sensor has a 4.65 x 4.65μm pixel size and a maximum frame rate of 16fps over a GigE Vision interface.

By recording a series of images of the sulfur dioxide gas clouds emitting from volcanic vents at multiple UV wavelengths and tracking their movement with computer vision algorithms, the system will measure the characteristic absorption of sulfur dioxide in scattered solar UV radiation in the images of the volcanic plumes.

Project leader Christoph Kern, of the U.S. Geological Survey's Cascades Volcano Observatory, had previously used a JAI camera to build a permanent sulfur dioxide imaging system for the monitoring of emissions from the Kilauea volcano in Hawaii. In this project, however, Kern is developing a rapidly-deployable version that aims to aid scientists in risk assessment and help advance the understanding of shallow subsurface volcanic processes by correlating degassing with other observable geophysical parameters.

Vision Systems Articles Archives