In the October issue of Vision Systems Design, I wrote an article about how researchers all over the world are working to develop methods such as retinal implants and augmented vision systems to increase the visual acuity of blind people.

One such example is the Retinal Prosthetic Simulator, which Dr. Stephen Hicks at the Nuffield Department of Clinical Neurosciences at the University of Oxford has developed. This system captures an image of a scene and then, by preprocessing the image, extracts salient features within it that are then down-sampled and presented visually to the user through a pair of classes. Truly fascinating and novel work, but Dr. Hicks is not the only person working on such capabilities.

Others are working on similar ideas. First, Eelke Folmer and Vinitha Khambadkar, researchers at the University of Nevada, are developing a system which allows blind people to navigate with a camera around their necks that give spoken guidance in response to hand gestures. The system, called Gestural Interface for Remote Spatial Perception (GIST), uses a Microsoft Kinect sensor to analyze and identify objects in its field of view.

This augmented reality system collects data in response to hand gestures made by the wearer of the device as a way to augment a blind person’s reduced spatial perception. For example, a GIST-wearer can make a sign with the index and middle finger, and the device will identify the dominant color in the area ahead. Or if the user holds out a closed fist, the system will identify whether a person is in that direction, or how far away they are. Check out a video of the system here.

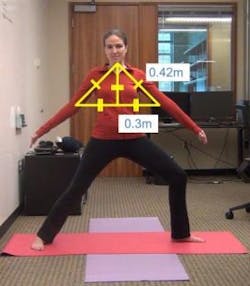

A second system is being developed by University of Washington computer scientists to help blind users do yoga. The software program watches a blind user’s movements and gives spoken feedback on what to change to accurately complete a given yoga pose. The program, called Eyes-Free Yoga, also uses a Microsoft Kinect sensor to track body movements and offer spoken feedback in real time for six yoga poses. It uses geometry and the law of cosines to calculate angles created during yoga to offer proper feedback.

Interestingly enough, the project lead, Kyle Rector, a UW doctoral student in computer science and engineering, worked with 16 blind and impaired-vision people to test the program and 13 of the 16 said they would recommend it, and nearly all of them said they would use it again.

So are we at the brink of providing vision for the blind? Well, not quite. But what happens if multi-sensor systems like the Kinect (or cameras, LIDAR, ultrasound), can be employed in a small vision system that the sight-impaired can use to further increment their perception?