January 22, 4:30 PM ET

2015 North American Vision Market Update | Alex Shikany, Director of Market Analysis, AIA

The AIA’s Director of Market Analysis, Alex Shikany, gave a presentation that provided the latest vision statistics and future projections.

In 2014, the North American vision market experienced the best year since the AIA began recording statistics in 2009. Even since the presentation Shikany gave in Stuttgart (November), numbers have improved, leading to an overall growth of 17% in Q3 of 2014. The statistics from Q4 are not yet finalized, but barring any significant downturn, a 2.4% growth is projected.

On the whole, a 15.3% overall growth is projected, which represents a total of $2.2 billion. Average growth rates for individual products and components since 2010 have been particularly impressive for cameras, smart cameras, and lighting. Imaging boards, optics, software, and application-specific machine vision systems also have a positive growth rate, albeit at a lower rate.

Shikany identifies vision systems as the lion’s share of the market and suggests that smart cameras represent a big part of this category, and a big business opportunity moving forward.

Speaking of moving forward, Shikany also projects a 9.1% growth rate for the industry in 2015. A survey sent out by the AIA indicates that 15% of respondents think the industry will grow more than 10%, while 80% think it will grow at a slightly lower rate. Applications that are expected to drive this growth include factory automation, food and consumer goods, embedded vision, transportation, life sciences, semiconductors and electronics, security, aerospace, energy, and oil and gas.

Concerning expectations for 2015, survey respondents indicated that they think software is the vision technology that is likely to have the largest impact on the market in 2015 (24.32%). This is followed by sensors and smart cameras (both at 16.22%), application specific machine vision systems (13.51%), and components/cameras (10.81%).

Shikany went on to cite healthy manufacturing trends (including automotive manufacturing, robotics, semiconductors), as a positive sign when it comes to 2015 representing yet another record year. He also cited a report from The Boston Consulting Group which indicates that many executives are now looking to automate in order to reduce cost and increase competitiveness and allow them to benefit from being closer to suppliers and customers.

January 22, 4:00 PM ET

Seeing Beyond the Image and Through the Glare | Andrew Berlin & Rajeev Surati, BARS Imaging

Andrew Berlin began the presentation by offering an example of how to obtain an image in a poorly-lit image. In his example, he showed an image of himself in his car in both the normal version, and an enhanced version. In the normal photo, you could not see his face but in the enhanced version, you could clearly see his face.

Berlin explained that the process by which he produced this enhanced image was done with a technology quite similar to a hearing aid, in that a subject is amplified and put into focus, and the background is minimized.. He considered each region of an image as a “Set of sounds,” some of which are background noise, which is minimized in a hearing aid or an image. (A tune algorithm was used to treat reflections as background noise.)

He noted that there is some similarity to “background subtraction” in image/video processing, and provided examples of using multiple layers and learning across multiple frames in order to produce an image. This particular technique can extend into such applications as scanning electron microscopy, biomedical imaging, and surveillance, among others. Making invisible features visible will improve object recognition and inspection and enable users to see through packaging, suggested Berlin.

Surati then took over and provided some examples of the hardware that can be used to accomplish these imaging applications. He also provided an example of how he used machine vision technology to create a 24 MPixel array of HD Projectors in the MIT Lincoln Lab Decision Room. He then explained that the two of them were most interested, in terms of being at the show, in soliciting feedback from the machine vision community about their technology and research.

January 22, 3:30 PM

Modern Watchmaking Today in Switzerland: Reshoring and Automating Manufacturing | Tony Arquisch, CPA Group SA

Tony Arquisch provided an overview of the role of vision and automation when it comes to watchmaking.

Swiss watchmaking requires a number of components, many of which are developed in other countries. However, laws are changing when it comes to a watch being “Swiss made,” and 60% of a Swiss watch must now be made in Switzerland. As a result, this puts a strain on watchmaking manufacturers.

Manual processes need to be performed by robots and other automation tools. As a result, high-end automated systems and technologies have been implemented in order to meet these demands. Arquisch provided some examples of these automation solutions, including a system that sorts and picks and places these into a tube, where they are then brought to another location for the manufacturing process.

Another example was the pick and place of the time (number) indicators for the watch face. This system identifies the numbers places them in the correct location for the creation of the watch face. Other applications Arquisch covered were the automated aesthetic inspection of components, laser engraving of the brand name in watch housing, and more.

All of the examples he provided were done as a result of much of the work coming back from places like China, where it was cheaper, to Switzerland. By turning to automation and machine vision, these Swiss watchmakers were able to keep up with their manufacturing needs.

“The manual processes are being and will continue to be automated,” he noted.

Today’s watchmakers are able to concentrate on workmanship and on processes that should not be automated due to costs. Machine vision, robotics, and inspection software are able to assist the craftsman watch maker, he concluded.

January 22, 2:30 PM

Opportunities and Challenges for Vision in Automotive Applications: GM and Ford’s Vision Wish List for the Future

Steve Jones, General Motors, & Frank Maslar, Ford Motor Company

Automotive powertrain manufacturing environments require a great deal of engineering discipline. This includes the role of machine vision in automotive applications. Such technologies as vision processing, 3D data visualization, intelligent image acquisition, vision sensor fusion, and robot guidance are all imperative when it comes to keeping these manufacturing lines running 24/7 (which they do!)

Steve Jones of GM explained that “teaching vision application development as an iterative process does not work for everyone” and that many things need to be factored in.

"We must have better preparation to understand and handle variation and differences in components," he said.

Factors that must be considered in vision in automotive manufacturing include:

- Lighting and illumination. This includes wavelength, intensity of light, intensity uniformity across the field of projection, and the impact of uniformity at standard working distances.

- Powertrain reflection characteristics vary with surface finish, which can cause issues with reflectivity when it comes to lighting and image acquisition.

- The need for intelligent image acquisition. Most smart cameras utilize “one and done” techniques. Specifically, dynamic exposure can improve the contrast in an image of one gear (the perfect image) but what about a tray of several parts? (No perfect image exists).*

An ideal environment, explained Jones, would be a system that includes 150 GigE cameras, multiple GigE networks, multiple PLC trigger requests, distributed computing power in multi-core processors, and a single software development environment with all the tools available at every station.

*Jones notes that a smart camera that could take several images with different exposures, combine high contrast portions of several images, and analyze a collage of best contrast possible contrast portions would be the ideal solution.

Jones then turned it over to Maslar, who discussed the limitations and potential improvement areas for a few key technologies:

- 3D data visualization. Issues that exist include the fact that some 3D details are lost in the conversion, and that traditional 2D image processing tools do not take advantage of true 3D data.

- Vision sensor fusion. The need is the combines these data types into a unified workspace. New image analysis tools are required to analyze the data in this workspace, and these tools need to be intuitive and work seamlessly with all data types.

- 3D robot guidance. 3D robot guidance is difficult to implement and is prone to errors. Improvements are required, in this space, in eye-hand calibration, and the need for a standard vision system to robot controller communication protocol. Off-line programming would also be something that the industry would benefit from, according to Maslar.

January 22, 2:00 PM

Machines That See: New Opportunities in Embedded Vision|Jeff Bier, Embedded Vision Alliance

Machines are useful, mainly, to the extent that they interact with the physical world. With visual information being the riches source of information about the real world, vision is the highest bandwidth way for machines to obtain information from the real world. Thus, embedded vision can boost efficiency and quality, enhance safety, simplify usability, and enable innovation, explained Bier.

The evolution of vision technology, as Bier broke it down, basically started with computer vision (research and fundamental technology for extracting meaning from images), and moved to machine vision in factory automation applications. From here, however, is a new world of opportunities in embedded vision, which includes things like consumer goods, automotive, medical, defense, retail, gaming, security, education, transportation, and more.

Embedded vision is realized via hardware (processors, sensors, etc), and software (tools, algorithms, libraries, and APIs).

Bier provided such real-life, modern examples as a commercial embedded vision system that provided a way for a severely vision-impaired who, with this device, is able to do such day-to-day tasks as identify street signs, read from a menu, identify the difference between a $1 bill and a $5 bill, and so on. Another clever commercial product that uses embedded vision is the Dyson 360 Robot Vacuum, which utilizes a 360° vision system to navigate a home and clean in an efficient and systematic manner.

Bier also outlined some of the challenges of implementing embedded vision. These include such factors as:

- For widespread deployment, vision must be implemented in small, low-cost, low-power designs

- These constraints, combined with the high-performance demands of vision, create challenging design problems

- Algorithms are diverse and dynamic

- Modern embedded CPUs may have the muscle, but are often too expensive and consume too much power

- Many vision applications require parallel or specialized hardware (GPU, FPGA, etc.)

- Many product developers lack experience in embedded vision

To tackle these issues, the Embedded Vision Alliance was formed in order to “inspire and empower product creators to incorporate visual intelligence into their products.”

View more information on the Embedded Vision Alliance.

January 22, 1:30 PM ET

Impact of Vision in Drone Applications|Dave Litwiller, Aeryon Labs Inc.

Dave Litwiller of UAV developer and manufacturer Aeryon Labs, Inc. provided an overview of the role machine vision and computer vision in the unmanned aerial vehicle (UAV) space.

To begin, he discussed the significant growth and evolution of UAVs since 2005. Today, not only are UAVs much smaller and easier to operate, they are significantly cheaper. In addition, a huge shift from fixed wing UAVs to vertical take off and landing models took place. This sector is greatly outperforming the fixed wing sector and will continue to do so, according to Litwiller.

A quick encapsulation of how much the industry has grown in recent years is the fact that Aeryon Labs achieved 100% year-over-year growth in 2014.

Litwiller then outlined a number of factors that he identifed as commercial business catalysts, which included the ability to put airborne imaging tools safely and effectively into the air. Such applications include oil and gas, power generation, transportation infrastructure, building and industrial inspection, photography, cinematography, and agricultural imaging.

When it comes to opportunities for imaging and machine vision, Litwiller noted that roughly 1/5 of the merchant value of a UAV is in its imaging system.

"The role for computer vision and imaging is already significant, and is about to get much larger," he said.

The automation of imaging and computer vision in UAVs depends on its capability in:

- Natural and varying lighting

- Object occlusions and perspective changes

- Shadows from objects and surroundings

- Twilight and night imaging

- Aggregating contiguous discrete images

- User designated object tracking

Future opportunities for vision and imaging, according to Litwiller, include the integration of more sensory data than just visible and infrared imaging, with corresponding expansion of pattern analysis and decision making. This includes:

- Depth sensing

- Visual odometry

- Digital image stabilization

- Hyperspectral

- Ultraviolet

- LIDAR

- Chemical sensors and spectrometers

- Microphones

- RFID readers

In the long term, the ultimate goal of UAVs is the ability to perform far field sense and avoid techniques. Without these, UAVs will never be fully autonomous. In that sense, computer vision is essentially the main component for the biggest UAV opportunity going forward.

One interesting item of note that Litwiller identified is the fact that the United States lags behind other industrialized countries when it comes to UAV opportunties, due to the heavy regulations imposed by the FAA. (Something to keep an eye on moving forward.)

January 22, 11:00 AM ET

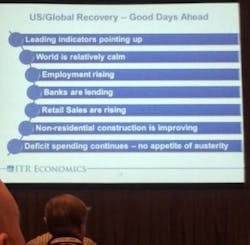

2015 Global Economic Forecast|Alan Beaulieu, Institute for Trend Research

Alan Beaulieu, Institute for Trend Research, provided a general overview of the 2015 global economic forecast, which on the whole, was thoroughly positive.

“The economy is going to be good in 2015, 2016, and 2017. You do not want to miss the next few years.”

The US economy, which is the largest in the world, has a lot going for it right now, Beaulieu suggested. Seventy-five percent of people in the US think the economy is still in a recession, which is just not the case. Factors for this healthy economy include indicators pointing up, "relative calm" in the world, rising employment numbers, bank lending, healthy retail sales, improvements in non-residential construction, and deficit spending.

Year over year growth rate for industrial production in the US is forecasted for 2.4%. Japan’s economy also trends upward, similar to the United States. Brazil, however, does not provide a favorable economic outlook in the coming years. And as mentioned in last year’s presentation, Mexico has become increasingly important to the United States economy and will continue to do so going forward.

There is a recession coming in 2019, Beaulieu cautioned, so business owners and the like should prepare for this. Rising interest rates willl contribute to this, which will lead to lower car sales, home sales, and an overall negative impact on the economy. Ongoing concerns for the global economy include:

- S&P 500 gives way to steeper-than-median decline

- Fear of instability from low oil prices

- Europe slowing down

- South America

- Higher Affordable Care Act costs sap the consumer

- China's debt problems

For the sake of validation of these forecasts, here were the results from the Institute for Trend Research’s 2014 projections:

- 98.5% accuracy for US GDP

- 99% accuracy for US employment

- 97.3% accuracy for US industrial production

- 99% accuracy for European industrial production

View more information on Beaulieu and ITR.