MACHINE VISION SOFTWARE: Vision algorithms added to graphical programming software

In his presentation at this year's NI Week, held from the 5-8th August, Dr. Dinesh Nair, Chief Architect and R&D Manager with the Vision Development Group at National Instruments (Austin, TX, USA; www.ni.com) highlighted developments in pattern matching and object tracking algorithms.

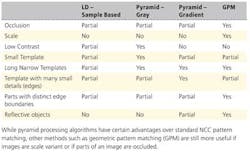

While the company already offers normalized gray scale cross correlation (NCC) and geometric pattern based matching techniques in its NI Vision software packages, the company has now extended these to include two different types of NCC-based pyramid processing-based pattern matching techniques.

"In standard NCC algorithms," says Nair, "a template image of the pattern to be matched is correlated across the image and the correlation value used to determine the best match." Instead of using all the pixels in the pattern, the existing pattern matching offering uses a small sample of grey-scale values and/or edge features to describe the template. While such templates can be learned quickly and the pattern matching process that results is relatively fast, such algorithms may fail when very small templates are used; the template pixel intensities are pretty homogenous or when the search image is of low contrast.

To overcome these limitations, NCC-based techniques that employ pyramid processing can be employed. In this technique, a multi-scale image representation of the target image is computed by successively applying a low pass filter and down sampling of the image pixels, resulting in a number of different images in a pyramid. In the same manner, the template image is also smoothed and down sampled.

To perform pattern matching, the top-level of the image in the search pyramid is convolved with the top-level of the template using the NCC technique. This results in a number of likely matches. For each of these likely matches, the same search procedure is then performed at each level of the pyramid, with the highest correlation at the bottom of the pyramid representing the best possible match.

Two implementations of this algorithm have been developed by NI: grayscale and gradient pyramid matching. In grayscale matching, grayscale values of all the pixels in the template are used for matching. "This," says Nair "is useful when the template does not contain structured information, but has intricate textures with dense edges." Alternatively, in gradient pyramid matching, only prominent edge pixels are used for matching. To detect these edges, a normalized Sobel filter is applied to the template and source image pixels and only prominent edge pixels are selected for matching. "This algorithm is more resistant to occlusion and intensity changes compared to the grayscale value pyramid processing approach," says Nair.

Certainly, while such pyramid processing algorithms have certain advantages over sampling-based NCC pattern matching, other methods such as geometric pattern matching (GPM) are still more useful if images are scale variant or if parts of an image are occluded.

While such pattern matching techniques are used to locate specific features in an image, they are not deployed in NI's latest object tracking algorithms. Rather, the company's object tracking algorithms employ a mean shift algorithm that requires the location of an object to be previously known. The starting location of the object can be found using pattern matching techniques.

To accomplish object tracking, information from an object reference model – the feature that is required to be tracked – must first be computed. To perform this task, the grayscale or color histogram of the object is determined and the influence of any background pixels attenuated. Once this histogram has been determined, it can then be represented as a kernel that represents the probability density function of the object. After this kernel is determined, it can be used to search for any regions in the image that may belong to the object. This is accomplished by using the kernel to assign to each pixel in the image a probability value of it belonging to the object.

When an object moves from its current location to a new location, the mean shift algorithm computes the new location by running the kernel over the image and continuously computing the centroid of the probability image until convergence.

However, because the mean shift algorithm is not scale or geometrically-shift invariant, it cannot be used to track objects that change in size or shape. To do so, the shape adapted mean shift algorithm is required. In this approach, an asymmetric kernel - such as an ellipse - is used to more properly describe the shape of the object within the image. In a similar manner to the mean shift algorithm, this kernel is then run over the image and the new location of the image computed. After this is accomplished, the target model is then updated with new scale and shape information.

Vision Systems Articles Archives