Headlines were made earlier this spring when a US federal judge dismissed the Federal Aviation Administration's (FAA) case against Raphael Pirker, the first person the FAA tried to fine for flying a commercial UAV.

Now, BP (London, UK;www.bp.com) and UAV manufacturer AeroVironment (Monrovia, CA, USA; www.avinc.com) have been granted permission for a commercial UAV to fly over land to perform aerial surveys of Alaska's North Slope.

AeroVironment flew its Puma AE UAV on its first flight on June 8 to survey BP pipelines, roads and equipment at Prudhoe Bay, the largest oil field in the United States. As a result of the test flights, the FAA said that the Puma demonstrated it could fly safely over land.

To date, the FAA had only approved the use of UAVs for public safety or academic research, on a case-by-case basis. But a Bloomberg report surfaced in May, indicating that the FAA was considering a streamlined approval process for flights of small UAVs for filmmaking, utilities inspection, agriculture and other low-risk operations.

The vision-enabled Puma AE UAV is a small, hand-launched UAV that is about 4.5ft long with a wingspan of 9ft. The UAV has a battery life of about 3.5 hours and typically flies less than 45 mph between 200 and 400ft from the ground. The UAV will perform tasks such as road mapping, pipeline inspection, analysis of the volume of gravel pits, monitoring wildlife and ice floes and assisting in search-and-rescue operations.

Curiosity Rover captures images of Martian mountain

NASA has released a mosaic of images captured by the Curiosity Rover's Mast Camera (Mastcam) instrument which shows Mars' three mile-high Mount Sharp.

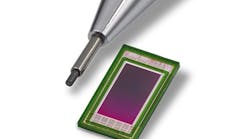

Curiosity's MastCam is comprised of two separate cameras with different focal lengths and color filters allowing detailed images to be captured of objects both near to and far from the Rover. The Mastcam-34 has a 34 mm focal length, f#/8 lens that captures a 15° square field-of-view (FOV) and the Mastcam-100 has a 100 mm focal length, f#/10 lens that captures a 5.1° square FOV. Both cameras feature a KAI-2020 1600 x 1200 pixel interline transfer CCD image sensor from ON Semiconductor (Phoenix, AZ;www.onsemi.com).

To capture images of Mount Sharp, images from the Mastcam-100 were mosaicked from dozens of images taken on September 20th, 2012.

Mount Sharp, also known as Aeolis Mons, is a layered mound in the center of Mars' Gale Crater. The "mountain" rises more than three miles above the crater floor, where the Curiosity Rover has been operating since landing in August 2012.

The lower slopes of Mount Sharp are a major focus point of the mission, but the Rover will first spend more weeks around a Martian location known as Yellowknife Bay, where evidence has been found of a past environment that may have supported microbial life.

The Jet Propulsion Laboratory (Pasadena, CA, USA;www.jpl.nasa.gov) built the rover and manages the project for NASA's Science Mission Directorate in Washington. Curiosity's Mastcam was built and is operated by Malin Space Science Systems (San Diego, CA, USA; www.msss.com).

Vision visualizes high-speed jet engine burn

The Bloodhound Project (Bristol, UK;www.bloodhoundssc.com) has an ultimate goal of attaining a 1,000 mph world land speed record with its Bloodhound SSC supersonic car. The car, which is currently in development, recently had its first phase of rocket plume imaging tests conducted to determine the best wavelength range to use to visualize plumes from the car's jet engine..

Tests were carried out by Dr. Adam Baker at Kingston University using cameras capturing three different wavelengths supplied by Stemmer Imaging (Puchheim, Germany;www.stemmer-imaging.com).

Visible wavelength images were captured using a Genie M640 camera from Teledyne DALSA (Waterloo, ON, Canada;www.teledynedalsa.com) while a CM140GE camera from JAI (San Jose, CA, USA; www.jai.com) was used to capture images in the ultraviolet spectrum. Finally, a Goldeye P032 camera from Allied Vision Technologies (Stadtroda, Germany; www.alliedvisiontec.com) captured infrared images.

All of the cameras were interfaced to an EOS embedded vision system from ADLINK (San Jose, CA, USA;www.adlinktech.com) equipped with an Intel Core i7 processor. The EOS 1200 features four independent GigE ports for multiple GigE Vision device connections with transfer rates up to 4.0 Bytes/s.

To accommodate the high volume of data generated by the system, a custom version of the Gecko recording software from Vision Expert's (Surrey, UK;www.visionexperts.co.uk) was used for real-time video compression.

Gecko records images in standard formats such as AVI and MPEG and enables the viewing, controlling, and recording of up to four cameras.

According to a video posted by Stemmer Imaging at http://bit.ly/1v4es8D, it was found that the ultraviolet cameras returned the best quality of image data for testing the rocket plume.

Robotic arm catches fast moving objects

Researchers from the Learning Algorithms and Systems Laboratory (LASA) at École polytechnique fédérale de Lausanne (Lausanne, Switzerland;www.epfl.ch) have developed a robotic system that uses infrared cameras, motion analysis software and non-linear regression methods to enable a robotic arm to catch flying objects of various shapes in less than 50ms.

The 1.5m KUKA Robotics (Shelby Township, MI, USA;www.kuka-robotics.com) arm used in the research has three joints with seven degrees of freedom and a hand with four fingers and stays in an upright resting position. It was programmed and designed to test robotic solutions for capturing moving objects of irregular shapes.

As objects are thrown in the robot's direction, a series of S250e global shutter infrared cameras from Optitrack (Corvallis, OR, USA,www.optitrack.com) track the object at 250 Hz.

Optitrack's ARENA motion analysis software, which is based on 3D marker tracking, was then used to measure the position and orientation of the object.

The method for real-time motion tracking was tested using objects which included a ball, a fully-filled bottle, a half-filled bottle, a hammer and a ping pong racket.

When an object is thrown, the cameras capture the object's trajectory, speed and rotational movement.

Using regression analysis, this data is then used to predict the grasping point of the object.

Vision Systems Articles Archives