Google makes 3D sensing Project Tango tablet available to public

In early 2014, Google announced Project Tango, which involved a partnership with universities, research institutions, and industrial partners to give mobile devices an understanding of space and motion. More than one year later, the device is now available to the public.

The project specifically concentrated all of the technology gathered from its various partners into a single mobile phone containing customized hardware and software designed to track the full 3D motion of the device while simultaneously creating a map of the environment. Sensors in the device update its position and orientation and combine the data into a single 3D model of the space around the user.

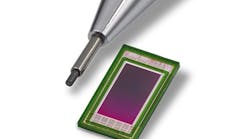

OmniVision Technologies (Santa Clara, CA, USA; www.ovt.com) supplies its OV4682 and OV7251 CMOS image sensors for the device. The OV4682 records both RGB and IR information, the latter of which is used for depth analysis. The 1/3in, 4MPixel color sensor features a 2µm pixel size, a frame rate of 90 fps at full resolution and 330 fps at 672 x 380 pixels.

The OV7251 global shutter image sensor is used for the device’s motion tracking and orientation. The 1/7.5in VGA OV7251 sensor features a 2µm pixel size and a frame rate of 100fps. Both sensors feature programmable controls for frame rate, mirror and flip, cropping, and windowing.

“Project Tango aims to change the way mobile devices interact with the world by tracking and mapping the full 3D motion of the device in relation to its environment. As such, computer vision is the crux of this device,” says Johnny Lee, Technical Program Lead, Advanced Technology and Projects at Google.

In addition, the Myriad 1 computer vision processor from Movidius (San Mateo, CA, USA; www.movidius.com) helps to process data for the Tango device.

The tablet was offered for $1,024 solely to developers, with the understanding that the device was little more than a proof of concept and an opportunity for developers to use 3D technology to create applications such as indoor navigation/mapping, gaming, and the development of algorithms for processing sensor data. Now, the device is available to the public for $512. With a purchase, however, comes a warning from Google:

“The Project Tango Tablet Development Kit is intended for developers, not consumers. Please be aware that the tablet is not a consumer-oriented device. The tablet has known issues and will receive regular updates which may modify the device’s functionality as the platform evolves. By purchasing this device, you acknowledge you understand this risk.”

Supersonic car vision system tested in South Africa

With the ultimate goal of attaining a world land speed record, the Bloodhound Project (Bristol, England; www.bloodhoundssc.com) has seen its Bloodhound SSC supersonic car’s (future) vision system tested and updated in the recent months, in an effort to provide live video transmission from the car traveling at up to 1,000 mph.

Recent tests in the desert at Hakskeen Pan, South Africa, where video from the vision system installed on a Jaguar (Whitley, England; www.jaguar.com) F-type vehicle was transmitted to a jet aircraft, have shown successful video and audio communication transmissions. This is in readiness for the next phase of trials that will integrate the system into the Bloodhound car, according to Stemmer Imaging (Puchheim, Germany; www.stemmer-imaging.com),

In the design of the system, three compact EOS embedded multi-core vision systems from ADLINK Technology (San Jose, CA, USA; www.adlinktech.com) accept up to four independent HD feeds from GigE cameras in any of the 25 camera locations around the car, so that data from 12 cameras can be acquired on any one run. Each recorder also provides a single H.264 video stream from any of its four inputs for live transmission to the control center.

The video data stream output will be connected to the Bloodhound’s cockpit instrument panel computer and the vehicle’s radio modems via a router. The independent channels from each recorder can be simultaneously transmitted in real time and the cockpit instrument panel computer can also display one of these channels on one of the cockpit instrument displays.

Five of the car’s 25 camera locations are safety-critical locations, and camera feeds from these will be used on all runs. These cameras monitor the instruments and controls, forward facing and rear facing fin tops, the rocket fuel connection hose and the rocket plume.

In addition, further developments in the wing camera mounting and optics are also underway to minimize distortion. The viewing angles and mountings for the cameras that will be monitoring the wheel/ground interface are also being re- designed.

Last year, small scale rocket plume imaging tests were carried out, which indicated that the UV range of the spectrum provides useful information of the rocket output. A CM-140 GE-UV camera and a CB-140 GE-RA color camera from JAI (San Jose, CA, USA; www.jai.com) have been selected to monitor the rocket on the Bloodhound car.

The CM-140 GE-UV camera features a UV-sensitive 1/2in monochrome ICX407BLA 1.4MPixel CCD image sensor from Sony (Tokyo, Japan; www.sony.com) with a 4.65µm pixel size and operates at 16fps at full resolution.

The JAI CB-140 GE-RA camera features a 1.4 MPixel Bayer mosaic color progressive scan Sony ICX267 CCD with a 4.65µm pixel size and operates at 31fps in full resolution. The GigE Vision camera features external trigger modes, and higher frame rates are attainable by using partial scan or vertical binning modes.

The next stage of testing will be on the rocket used on the Bloodhound. The rocket plume will be recorded in Finland using a high-speed camera from Optronis (Kehl, Germany; www.optronis.com).

iPhone microscope automates parasite detection

Researchers from the University of California, Berkeley (Berkeley, CA, USA; www.berkeley.edu) have developed an iPhone-based microscope that uses video and a motion analysis algorithm to automatically detect and quantify infection by parasitic worms in a drop of blood.

Teaming up with Dr. Thomas Nutman from the National Institute of Allergy and Infectious Diseases (Rockville, MD, USA; www.niaid.nih.gov) and collaborators from Cameroon and France, the UC Berkeley engineers conducted a pilot study for the device, called the CellScope, in Cameroon, where health officials have been battling the parasitic worm diseases onchocerciasis (river blindness) and lymphatic filariasis.

River blindness is transmitted through the bite of blackflies and is the second-leading cause of infectious blindness worldwide. Lymphatic filariasis, spread by mosquitoes, leads to elephantiasis, a condition marked by painful, disfiguring swelling. It is the second-leading cause of disability worldwide and, like river blindness, is highly endemic in certain regions in Africa.

An antiparasitic drug called ivermectin, or IVM, can treat these diseases, but mass public health campaigns to administer the medication have been stalled because of potentially fatal side effects for patients co-infected with Loa loa, which causes loiasis, or African eye worm. When there are high levels of microscopic Loa loa worms in a patient, treatment with IVM can potentially lead to severe or fatal brain or other neurological damage. Side effects of Loa loa and the difficulty of rapidly quantifying Loa levels in patients before treatment make it too risky to broadly administer IVM, representing a major setback in the efforts to eradicate river blindness and elephantiasis.

As a result, the team is testing its latest generation of the smartphone microscope, called CellScope Loa. The device pairs an iPhone with a 3D-printed plastic base where a sample of blood is positioned. This base includes an LED array, an open-source microcontroller board from Arduino (www.arduino.cc), gears, circuitry and a USB port.

The CellScope Loa is controlled through a smartphone application developed by the researchers specifically for this purpose. Healthcare workers tap the screen and the phone communicates via Bluetooth to controllers in the base to process and analyze the sample of blood.

Gears move the blood sample in front of the iPhone camera and an algorithm automatically analyzes the "wriggling" motion of images of the worms captured by the phone. On the iPhone's display, the worm count is then displayed.

Daniel Fletcher, associate chair and professor for bioengineering at UC Berkeley, says previous field tests have revealed that the unit helps reduce the rate of human error. In total, the procedure takes two minutes and this allows health workers to determine on site whether it is safe to administer IVM.

"We previously showed that mobile phones can be used for microscopy, but this is the first device that combines the imaging technology with hardware and software to create a complete diagnostic system," said Fletcher. "The video CellScope provides results that enable health workers to make potentially life-saving treatment decisions in the field."

Vision-guided robot tandem provides automation for logistics industry

Fetch Robotics (San Jose, CA, USA; www.fetchrobotics.com) has developed a vision-guided robot tandem called "Fetch and Freight," which are designed to autonomously complete various tasks in logistics and warehouse operations. The system is comprised of a mobile base (Freight), and an advanced mobile manipulator (Fetch), and is designed to assist workers in warehouse environments.

Fetch and Freight are built upon the open-source robot operating system (ROS) and include software to support the robots and integrate them within a warehouse environment. The robots are designed to work alongside humans, performing such tasks as warehouse delivery and pick and pack operations.

Each of the two robots features different sensors for purposes of autonomous navigation. Fetch has a modular gripper and includes an Ethernet connection so that developers can integrate a vision camera. In addition, it has a PrimeSense RGB 3D depth sensor from Intel (Santa Clara, CA, USA; www.intel.com) that is capable of pan and tilt. Additionally Fetch has a number of mounting points for additional sensors, should this be required. In Fetch's base, there is a charging dock, 25m range laser scanner for navigation, and an obstacle avoidance laser from SICK (Waldkirch, Germany; www.sick.com). Freight also features a 25m range 2D laser scanner used to detect workers and follow them at speeds of up to 2m/s.

Both robots can autonomously recharge via a common dock, which is designed with a distinctive shape to help the duo locate it with lasers. The docks can charge the robots in 20min bursts to maximize uptime, but during less busy times, the docks can perform three-hour deep cycle charges.

Fetch Robotics has yet to release pricing for the robots but plans to ship both this year. Fetch and Freight may initially be deployed in warehouses within the next few months.