People with severe or crippling disabilities often find it difficult to steer motorized wheelchairs in confined environments. In a house with limited maneuvering space, someone with diminished visual skills or unsteady hand coordination can face challenges. Such actions as approaching furniture without collisions or crossing the threshold of a doorway require a certain level of hand-eye coordination that handicapped individuals may not have. For some of these patients, learning how to use a motorized wheelchair can take months or years.

To help such individuals, Steve B. Skaar and Guillermo Del Castillo at the Dexterity, Vision and Control group at the aerospace and mechanical engineering department of the University of Notre Dame (Notre Dame, IN, USA; www.nd.edu) and Linda Fehr at the Rehabilitation Research & Development and Spinal Cord Injury Service at the Hines Veterans Administration Hospital (Hines, IL, USA; www.research.hines. med.va.gov/) have borrowed a page from industrial robotics by developing a trainable voice-activated wheelchair. Trained by teaching the system paths that connect wheelchair positions and orientations, data collected from ultrasound and visual sensors are used to develop trajectories that the disabled rider can activate on command. According to Del Castillo, use of wall-mounted visual reference cues during both teaching and tracking events eliminate the need for globally accurate mapping.

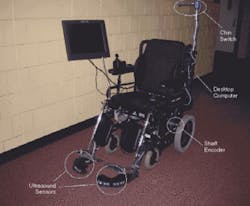

The disabled rider activates the taught trajectories by selecting the desired destination from an audio menu using only a binary switch, such as a chin switch, compatible with the user's abilities. This switch is connected through the parallel printer port of an onboard computer, which then pieces together taught segments to connect the chair's current location to the selected destination. "The rider can also use the same switch to halt the chair, resume travel, or reverse course at any point midmaneuver," says Del Castillo.

To measure wheel rotation of the Sabre power wheelchair, the vehicle has two rotary incremental encoders, connected to a four quadrature encoder input board. This is installed in an Optiplex GX240 desktop from Dell (Austin, TX, USA; www.dell.com) running Windows XP Pro. To digitize reference images, two CV-M50 cameras from Costar Video Systems (Carrollton, TX, USA; www.costarvideo. com) are mounted below the seat of the vehicle and connected to a Data Translation (Marlboro, MA, USA; www.datx.com) DT3162 frame-grabber board. This board can capture 640 × 480-pixel monochrome images of the video input and make them available to the host PC.

These cameras detect a series of landmarks or cues that are fixed to the wall in known locations to infer the vehicle's position and orientation. Two kinds of cues are used, a white cue consisting of a black ring over a white background and a black cue made up of a white ring over a black background. When a cue is detected, an observation equation incorporates the visual information to correct for estimates of the position of the system.

Having an estimate of the vehicle's position on the floor, the system can obtain an observation from the location of the cues in the image. This observation is then used to correct the position of the vehicle. "Without observations, the system is simply dead-reckoning, and its estimate of the position, along with its ability to track a path, deteriorates quickly," says Del Castillo.

To perform obstacle detection, eight 40-kHz ultrasound sensors are connected to a module that measures the time elapsed between the sound emission and the detected echo of each device and connects to the serial port of the PC. "This range sensing was only used for obstacle detection and not as the main means of navigation," points out Del Castillo. In its current implementation, the system uses these ultrasound sensors to distinguish whether an object not present in the teaching stage has been introduced into the environment. Should such a disturbance be detected, the wheelchair is programmed to halt.

Algorithm development for the system was performed in C++ using Microsoft Developer Studio 6.0, the Standard Template Library, and Microsoft Foundation Classes. Voice recognition and interaction is performed with DragonDictate from ScanSoft (Peabody, MA, USA; www.dragonsys.co). This program is interfaced with Visual C++ using the DragonXTools speech-recognition development tools. ..