[Updated 5/9]: Software reportedly at fault in Uber’s fatal self-driving accident

Were the autonomous vehicle’s cameras,sensors, or softwareat fault?

On March 19, 49-year-old pedestrian Elaine Herzberg was struck by an Uberself-driving car in Tempe, AZ, USA, later succumbing to the injuries. As expected, this has created a whirlwind of reactions and opinions on the topic.

First, the details of the accident, which is believed to be the first self-driving car accident that has resulted in a pedestrian fatality. NBC News reported that the vehicle, a gray 2017 Volvo XC90 SUV, had an operator in the driver’s seat and was traveling at about 40 miles per hour in autonomous mode when it struck Herzberg, who was walking her bicycle across the street—outside the crosswalk—late at night. Onboard video footage of the accident was released by Tempe police.

Chief of Police Sylvia Moir told the San Francisco Chroniclethat a preliminary investigation shows that Uber is unlikely at fault for the crash.

"It's very clear it would have been difficult to avoid this collision in any kind of mode [autonomous or human-driven] based on how she came from the shadows right into the roadway," Moir told the paper, adding that the incident occurred roughly 100 yards from a crosswalk."

"It is dangerous to cross roadways in the evening hour when well-illuminated managed crosswalks are available," she added.

In addition to pausing self-driving car operationsin Phoenix, Pittsburg, San Francisco, and Toronto; Uber issued a statement: "Our hearts go out to the victim's family. We are fully cooperating with local authorities in their investigation of this incident."

Reactions

Here is where all the speculation, reactions, and strong opinions on the issue come into play. Missy Cummings, an engineering professor and director of the Humans and Autonomy Laboratory at Duke University, told CNN that the video released of the accident shows that the autonomous technology fell short of how it should be designed to perform.

"This video screams to me there are serious problems with their system, and there are serious problems with their safety driver not being able to pay attention, which is to be expected," said Cummings, who has testified on Capitol Hill about autonomous systems. "[The pedestrian] wasn't jumping out of the bushes. She had been making clear progress across multiple lanes of traffic, which should have been in [Uber's] system purview to pick up."

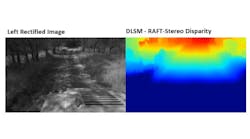

Bryan Reimer, research scientist in the MIT AgeLab and the associate director of the New England University Transportation Center at MIT also told CNN that it is possible the video represents an "edge case," which is a rare situation the autonomous system was never trained to handle. He also noted that the accident could have been the result of a failure of a vehicle sensor or its algorithms, but its unknown at this time if that’s what happened.

Meanwhile, John Krafcik, CEO of Waymo, told Forbes at the National Automobile Dealers Association conference in Las Vegas on March 24 that this accident could have likely been prevented if it were in one of their cars.

"What happened in Arizona obviously was a tragedy. It was terrible. You couldn't watch that video and not be impacted by it," he told Forbes. "We're very confident that our car could have handled that situation. We know that for a lot of different reasons. It's what we have designed this system to do in situations just like that," he said, prior to sharing that view in front of hundreds of dealers at the conference.

Others such as Matthew Johnson-Roberson, an engineering professor at the University of Michigan who works with Ford Motor Co. on autonomous vehicle research, are more confident that the issue here likely lies in an issue with the autonomous vehicle software.

"The real challenge is you need to distinguish the difference between people and cars and bushes and paper bags and anything else that could be out in the road environment," he told Bloomberg. "The detection algorithms may have failed to detect the person or distinguish her from a bush."

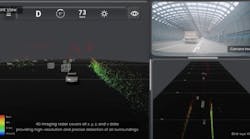

Raj Rajkumar, a professor of electrical and computer engineering at Carnegie Mellon University who works on autonomous vehicles, offered a similar sentiment in the Bloomberg article, noting that LiDAR actually functions better in the dark because the glare of sunshine can sometimes create interference. Because of this, the LiDAR would certainly have detected an obstacle, and any shortcoming would likely be ascribed to classification software "because it was an interesting combination of bicycle, bags and a pedestrian standing stationary on the median," he said. Had the software recognized a pedestrian standing close to the road, he added, "it would have at least slammed on the brakes."

In terms of what this means going forward, IEEE Spectrum raises an excellent point. Through this tragic accident, the autonomous vehicle industry can potentially learn from and improve on their failures to decrease or remove the likelihood that something like this could ever happen again. But for now, anyone who was already skeptical of the idea of driverless cars on our public roads, especially—of course— those outside of the industry, the level of trepidation has been officially raised.