Researchers from Nanyang Technological University, Singapore (NTU Singapore) have developed a robot that uses 3D vision along with robotic arms and grippers to autonomously assemble a chair from IKEA.

To assemble a STEFAN chair kit from IKEA, Assistant Professor Pham Quang Cuong and his team used two six-axis Denso VS-060 robot arms, two six-axis force sensors from ATI Gamma, and two 2-Finger 85 parallel grippers from Robotiq. The robot was designed by the team to mimic how a human assembles furniture, with the "arms" being capable of six-axis motion and equipped with parallel grippers to pick up objects. Mounted on the wrists are force sensors that determine how strongly the "fingers" are gripping, and how powerfully they push objects into each other.

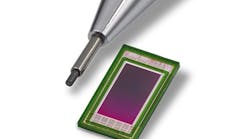

For the robots "eyes," an Ensenso N35-804-16-BL 3D camera from IDS Imaging Development Systems was used. This camera features two 1.3 MPixel monochrome global shutter CMOS image sensors, blue (465 nM) lights, GigE interface, and Power over Ethernet. The projected texture stereo vision camera can achieve a frame rate of 10 fps in 3D, with 30 fps in binning mode. Additionally, the camera features a "FlexView projector," which operates a piezoelectric actuator and doubles the effective resolution of the 3D point cloud for more exact contours and more robust 3D data.

The team coded algorithms using three different open-source libraries to help the robot put the chair together. OpenRAVE was used for collision-free motion planning, the Point Cloud Library (PCL) for 3D computer vision, and the Robot Operating System (ROS) for integration. The robot began the process of assembly by taking 3D images of the parts laid out on the floor to generate a map of estimated position of different parts. Using the algorithms, the robot plans a two-handed motion that is "fast and collision-free," with a motion pathway that needs to be integrated with visual and tactile perception, grasping and execution, according to NTU Singapore.

To ensure that the robot arms can grip the pieces tightly and perform tasks such as inserting wooded plugs, the amount of force exerted has to be regulated. Force sensors mounted on the wrists helped to determine this amount of force, allowing the robot to precisely and consistently detect holes by sliding the wooden plug on the surfaces of the work pieces, and perform tight insertions, according to the team.

It took the robot 11 minutes and 21 seconds to independently plan the motion pathways and 3 seconds to locate the parts. After this, it took 8 minutes and 55 seconds to assemble the chair.

"For a robot, putting together an IKEA chair with such precision is more complex than it looks. The job of assembly, which may come naturally to humans, has to be broken down into different steps, such as identifying where the different chair parts are, the force required to grip the parts, and making sure the robotic arms move without colliding into each other," said Cuong. "Through considerable engineering effort, we developed algorithms that will enable the robot to take the necessary steps to assemble the chair on its own."

He continued, "We are looking to integrate more artificial intelligence into this approach to make the robot more autonomous, so it can learn the different steps of assembling a chair through human demonstration or by reading the instruction manual, or even from an image of the assembled product."

Now that the team has achieved its goal of demonstrating the robot’s ability to assemble the IKEA chair, they are working with companies to apply this form of robotic manipulation to a range of industries, according to a press release. This research took three years and was supported by grants from the Ministry of Education, NTU’s innovation and enterprise arm NTUitive, and the Singapore-MIT Alliance for Research & Technology

View the NTU Singapore press release.

Share your vision-related news by contacting James Carroll, Senior Web Editor, Vision Systems Design

To receive news like this in your inbox, click here.

Join our LinkedIn group | Like us on Facebook | Follow us on Twitter