MIT researchers developing algorithm to improve robot vision

MIT researchers are developing a new robot-vision algorithm based on the Bingham distribution statistical tool which could help robots understand the objects in the world around them by 15%.

The Bingham distribution is a type of probability distribution on directional data, which promises greater advantage in contexts where information is patchy or unreliable, according to MIT. Glover has been using Bingham distributions to analyze the orientation of ping pong balls in flight as part of a project to teach robots to play ping pong. In cases where the visual information is poor, the new algorithm offers an improvement over existing algorithms, according to MIT.

Alignment presents many problems in robotics, and ambiguity is the central challenge to getting good alignment in highly-cluttered scenes. Bingham distribution allows the algorithm to obtain more information out of each ambiguous, local feature, says Glover, who has developed a suite of software tools that greatly speed up calculations involving them.

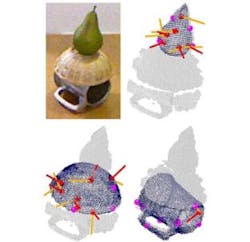

To determine an object’s alignment, a geometric model of the object is superimposed over visual data captured by a camera. MIT explains that rotating sets of points of the model and the object might move other pairs of points farther away from each other. Glover, using a Microsoft Kinect camera for image capture, showed that rotation probabilities for any given pair of points can be described as a Bingham distribution, which allows the new robot-vision algorithm to explore possible rotations in a principled way, quickly converging on the one that provides the best fit between points. In other words, the algorithm identifies an objects orientation faster, and using fewer data points than previous algorithms.

In experiments using visual data about particularly cluttered scenes, Glover’s algorithm identified 73% of the objects in a given scene, compared to 64% from the best existing algorithm. Glover says that this is due to the algorithm’s ability to better determine object orientations. With further research and sources of information, Glover believes the algorithm’s performance can be improved even more.

Jared Glover, a graduate student in MIT’s Department of Electrical Engineering and Computer Science, along with Sanja Popovic, MIT alumna and current Google employee, have written an academic research paper, which they will present at the International Conference on Intelligent Robotics and Systems, on the algorithm.

View the MIT article.

Also check out:

MACHINE VISION SOFTWARE: Vision algorithms added to graphical programming software

IMAGE-PROCESSING SOFTWARE: Scientists advance object recognition and scene-understanding algorithms

Kinect-based sign language interpretation

Share your vision-related news by contacting James Carroll, Senior Web Editor, Vision Systems Design

To receive news like this in your inbox, click here.

Join our LinkedIn group | Like us on Facebook | Follow us on Twitter | Check us out on Google +

About the Author

James Carroll

Former VSD Editor James Carroll joined the team 2013. Carroll covered machine vision and imaging from numerous angles, including application stories, industry news, market updates, and new products. In addition to writing and editing articles, Carroll managed the Innovators Awards program and webcasts.