Packaging Automation: Vision system helps sort, orient and collate mixed product

Consumer packaged goods (CPG) manufacturers must profitably meet, on an order-by-order basis, the increasingly varied packaging needs of retail customers and consumers. People want custom orders and mass customization, but that's not how manufacturers make or package goods. Rather, profit margins depend on production efficiencies gained from larger batches and longer production runs. So, after producing products in a range of sizes and formats, stored bulk totes containing the same type of product are often broken up into individual packages, which are sorted, re-oriented, and collated into point-of-purchase displays, variety packs, and custom orders of mixed-products.

Until recently, the only alternative to manual sorting by humans were highly engineered package feeding conveyor systems. Such equipment has a large-footprint because it relies on continuous motion conveyors with individually controlled, servo-driven sections interspersed for singulation.

Sensors above each singulation section view passing product and provide signals used to independently slow down or speed up specific rollers on the conveyor with the aim of adequately separating products for further processing by pick-and-place robots. While these systems can evenly space and line up product on a conveyor, actual throughput tends to be inconsistent and somewhat random and manual intervention may be required for products that are incorrectly oriented or flipped upside down.

Today, the CPG industry has a faster, more compact and efficient option thanks to secondary packaging solutions provider Douglas Machine Inc. (Alexandria, MN, USA;www.douglas-machine.com) and one of this year's Vision Systems Design's Bronze Innovators award honorees-Datalogic (Bologna, Italy; www.datalogic.com). Engineers at both companies recently collaborated to develop a vision-guided robotic pick and sort system that also re-orients and collates mixed-product packaged in flexible bags. To achieve an upper throughput limit of about 100 picks per minute (ppm), the vision system relies on an MX80 vision processor running the IMPACT software suite with the recently awarded Bronze-status Pattern Identification (ID) Tool.

Quickly sorting a haphazard pile of mixed-product from bulk storage into an orderly stream of product that can be readily processed into custom orders by automated equipment requires a vision system with fast and robust object recognition.

"In this application, the machine vision system has to be able to identify each product even if the package is partially hidden in a cluttered field of view," says Philip Falcon, program manager at Douglas Machine Inc. "It also has to be able to identify both sides of the product, and tolerate 360° pattern rotations, perspective distortions, different scales and lighting variations because it's very difficult to consistently present flexible packages such as this to the vision system."

There were several design challenges according to Adnan Haq, Principal engineer at Douglas Machine. First, touching and overlapping packages tend to get disturbed with each pick, so a new image must be acquired each time the robot picks up a bag.

Also, because the robot's end effector moves in and out of the field of view of the camera, the image acquisition window is tight and must be carefully synchronized with the robot's position. Combine that with a 0.6 second cycle time requirement and that leaves less than 100 msec from camera trigger to result output for the vision system to provide the x-y location of the next pick to the robot controller. Finally, the glossy and flexible nature of the packaging complicates image formation because of light reflections due to curves, warps or wrinkles that randomly occur on the glossy package surface, as well as crumpled or buckled bags that can distort the patterns that the vision system uses to identify, locate and sort product.

"All of the robot manufacturers were eager to get involved in this project with their own vision systems until we described the application challenges, and then, no one was certain that they could do it," explains Josh Klein, Senior mechanical engineer at Douglas Machine. "But Datalogic stepped up and offered real value in terms of the speed and the robustness of the pattern sort tool, which can quickly identify the flexible packages even if they are morphed, bunched up, flipped and rotated."

During system operation, various bulk storage bins containing different types of product continuously enter the system upstream from the vision-guided robotic sorting system.

Before each container is automatically dumped at the infeed, a barcode on each bin is scanned to provide barcode data, pick height and product dimensions to the main machine controller.

The machine controller passes the recipe change output down to the vision system and robot controller. Alternatively, automatic recipe changes could be controlled by an operator on an HMI or some other system input.

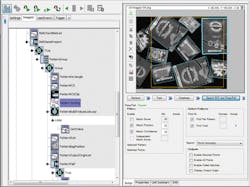

The vision-guided robotic pick-and-sort system uses a Datalogic E151, 2MP, monochrome, GigE camera mounted above the conveyor between the infeed and a delta-style robot. The camera, robot and conveyor all reside in a dome that is designed to block out ambient lighting and reflect light from two 30-inch, white, LED bar lights mounted on either side of the conveyor, providing indirect, diffuse and consistent illumination for the vision system. The camera must be calibrated for the vision system to provide accurate pick location and package height to the robot controller. Conveyor speed is also varied depending on package size to maintain specific product density flowing through the pick station.

"The barcodes at the infeed let us know what's coming so we can change a variable recipe input that adjusts the vision system and conveyor speed for different size products, different height products and products with different air-fill levels as they continuously enter the system," Haq explains. "Based on conveyor speed, the new recipe is triggered when that batch arrives at the robot. It's automatically done on the fly."

Before a product can be sorted by the system, the Pattern ID tool must be trained on the specific set of patterns that will be recognized on the captured images during operation. Because each package in this application has two sides, training requires acquisition of two images, one for the front face and another for the back face. Creating a model for each image allows the system to automatically identify the products regardless of which side is presented to the camera at runtime. A region of interest (ROI) is defined on the current image which will be searched for the trained patterns. The tool is then linked to a pattern database containing two models, and ROI for every product to be sorted.

"The recognition is robust and very high speed," notes Klein. "Recognition time depends mainly on the image resolution and is independent of the size of the pattern database, which is great if you have a lot of different products to identify." In operation, the inspected image ROI is compared with the trained database to find one or more matches with the stored patterns. To optimize the processing speed of the application and maximize throughput, engineers developed function blocks and sequenced them within the IMPACT software suite's integrated development environment (IDE).

"The Datalogic IMPACT software suite's IDE had all the tools we needed to optimize our application and customize our machine," says Haq. "We just chose how we wanted to implement them, then applied the tools such as ROI, blob, and Pattern ID and put them together in building blocks to run the program and sequence the image analysis operations to achieve maximize throughput."