October 2018 snapshots: Artificial intelligence in people detection, machine learning thermal dataset, vision-guided robots

Artificial intelligence software suite enables advanced people detection capabilities

Altia Systems (Cupertino, CA, USA; www.panacast.com) has launched its Intelligent Vision 2.0 suite of artificial intelligence software products for its PanaCast 2 panoramic camera, which will be used to automate workflows by accurately counting people and creating “big data” for data science applications through industry-first Intel Architecture-based intelligence, according to thecompany.

Intelligent Vision 2.0 is designed for the PanaCast 2 camera, which is a panoramic camera with three separate imagers. To capture images from all three imagers and to provide panoramic video, the PanaCast features a patent-pending Dynamic Stitching technology, which does an analysis of the overlapping image. Once the geometric correction algorithm has finished correcting sharp angles, the algorithm creates an energy cost function of the entire overlap region and comes up with stitching paths of least energy, which typically lie in the background, according to Altia Systems.

The computation is done in real time on a frame-by-frame basis to create the panoramic video. Each video frame is 1600 x 1200 pixels from each imager and joining these frames from three imagers together creates a 4800 x 1200 image. Each imager in the PanaCast 2 camera are 3 MPixel CMOS image sensors that can reach up to 30 fps in YUV422 and MJPEG videoformat.

Intelligent Vision 2.0, which was developed in collaboration with Intel (Santa Clara, CA, USA; www.intel.com), was trained with a dataset containing more than 150,000 people and is capable of detecting and counting more than 100 people up to 40 ft. away from the camera. This was developed, according to Altia Systems, to automate workflows and help businesses with resource management, such as buying less real estate after your camera tells you that 35% of your current office space is unused.

The Intelligent Vision 2.0 product suite includes features such as built-in people detection that can detect people up to 12 ft. away and can simultaneously count up to 40 people and an advanced people detection feature that—developed in cooperation with Intel—uses a CNN network architecture to detect people from all directions including partially-visible heads and extends the range up to 40 ft. in crowded scenarios. The suite also includes Intelligent Zoom 2.0, which uses AI technology to automatically zoom the video frame in and out to include all the meeting participants.

Vision-guided autonomous underwater vehicle discovers holy grail of shipwrecks

Researchers from the Woods Hole Oceanographic Institution (WHOI; Woods Hole, MA, USA; www.whoi.edu) were recently granted permission to release details from the discovery of the San José shipwreck using a vision-guided autonomous underwater vehicle (AUV).

The San José was a 62-gun, three-mast Spanish galleon that sank during a 1708 battle with British ships in the War of Spanish Succession. Often referred to as the “holy grail of shipwrecks,” the ship sunk with treasure believed to be worth as much as $17 billion (http://bit.ly/VSD-SHP). The ship was discovered on November 27, 2015 off the coast of Cartagena, Colombia, but the WHOI team needed to obtain authorization by Maritime Archaeology Consultants (MAC; www.marinearchaeology.ch), Switzerland AG, and the Colombian government to release details, which they recentlyreceived.

Key to the discovery of the ship was working with Woods Hole Oceanographic Institution, which MAC’s Chief Project Archaeologist Roger Dooley said, “has an extensive and recognized expertise in deep waterexploration.”

After an initial search in June with its REMUS 6000 AUV came up empty, the WHOI team returned in November. It was then, according to WHOI engineer and expedition leader Mike Purcell, that the team got the first indications of the find from the side scan sonar images of thewreck.

“From those images, we could see strong sonar signal returns, so we sent REMUS back down for a closer look to collect camera images,” hesaid.

To confirm its identity, REMUS descended to just 30 ft. above the ship, where it captured photos of a key distinguishing feature of the ship, its cannons. Subsequent missions at lower altitudes, according to WHOI, showed engraved dolphins on the bronzecannons.

“The wreck was partially sediment-covered, but with the camera images from the lower altitude missions, we were able to see new details in the wreckage and the resolution was good enough to make out the decorative carving on the cannons,” said Purcell. “MAC’s lead marine archaeologist, Roger Dooley, interpreted the images and confirmed that the San José had finally beenfound.”

Capable of conducting long-duration missions (up to 22 hours) over wide areas, REMUS 6000 can operate in depths ranging from 82 ft. (25 m) to 6,000 m (3.73 miles) and measures 12.6 ft. (3.84 m) in length. The AUV’s survey instruments include a custom digital camera with strobe light, dual-frequency side-scan sonar, multibeam profiling sonar, sub-bottom profiling sonar, and conductivity, temperature, and depth (CTD) sensor.

Once data from a survey is analyzed and smaller fields of interest are identified, the REMUS 6000 robot can gather more detailed, up-close images using high-resolution imaging systems located on the bottom of the vehicle.

High-speed vision system helps accelerate human cell analysis

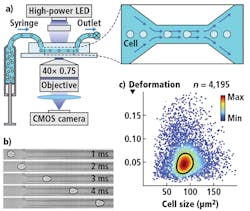

Scientists at the Dresden University of Technology (Dresden, Germany; http://bit.ly/VSD-TU) have developed a system based on a high-speed camera and microscope that accelerates human cell analysis by a factor of 10,000.

To analyze cell properties in minutes, the scientists developed the AcCellerator system, which is based on Real-Time Deformability Cytometry. In the setup, a stream of deformed cells flows through microfluidic channel structure at a speed of 10 cm/s and passes the field of view of a microscope with 400X magnification.

The system is based on an AxioObserver microscope from Zeiss (Oberkochen, Germany; www.zeiss.com), and also utilizes a syringe pump with syringe modules and a sample holder. Additionally, the AcCellerator has a computer and frame grabber, and a camera used to capture images of each individualcell.

Connected directly to the microscope is an EoSens CL Base camera from Mikrotron (Unterschleissheim; Germany; https://mikrotron.de/en.html). This camera features a 1.3 MPixel CMOS image sensor with a 14 µm pixel size. At a reduced resolution, the camera can capture images at a speed of up to 4,000 fps in the setup. All images are transferred to the computer via Camera Link interface and a custom program based on LabVIEW software from National Instruments (Austin, TX, USA; www.ni.com) is used for the analysis of each cell’s deformation. The camera also controls the 1 μs short LED light impulse sent out for each imageacquisition.

Analyzing a single image, according to the researchers, takes less than 250 μs.

“This enables us to measure the mechanical properties of several hundred cells per second. In one minute, this permits us to carry out analyses that would take a week in technologies we used before”, says Dr. Oliver Otto, CEO of Zellmechanik Dresden (Dresden, Germany; https://www.zellmechanik.com/Home.html). Within just 15 minutes, a precise characterization of all blood cell types is analyzed.

FLIR releases machine learning thermal dataset for advanced driver assistance systems

FLIR Systems, Inc. (Wilsonville, OR, USA; www.flir.com) has announced the release of its open-source machine learning thermal dataset for advanced driver assistance systems (ADAS) and self-driving vehicle researchers, developers, and automanufacturers.

Featuring a compilation of more than 10,000 annotated thermal images of day and nighttime scenarios, the dataset is designed to accelerate testing of thermal sensors on self-driving systems, according to FLIR. The dataset includes annotations for cars, other vehicles, people, bicycles, and dogs, and enables developers to begin testing and evolving convolutional neural networks (CNN) with the FLIR Automotive Development Kit (ADK).

FLIR’s ADK includes the company’s Boson thermal camera core in an enclosure, a mountain bracket, and a 2m USB cable. The Boson is available with either a 320 x 256 or 640 x 512 uncooled VOx microbolometer with a 12 µm pixel pitch and spectral sensitivity from 8 to 14 µm. The IP67-rated ADK provides a detection range of >100 m and a selectable field of view (24°, 34°, or 50°) for flexibility in range and awareness.

The new dataset, according to FLIR, will enable users to quickly evaluate thermal sensors on next-generation algorithms.

“This free, open-source dataset is a subset of what FLIR has to offer, and it provides a critical opportunity for the automotive community to expand the dataset to make ADAS and self-driving cars more capable in various conditions,” said Frank Pennisi, President of the Industrial Business at FLIR. “Furthermore, recent high-profile autonomous-driving related accidents show a clear need for affordable, intelligent thermal sensors. With the potential for millions of autonomous-enabled vehicles, FLIR thermal sensor costs will decrease significantly, which will encourage wide-scale adoption and ultimately enable safer autonomous vehicles.”

Vision-guided drone from Intel aids in restoring the Great Wall of China

As part of a collaboration with the China Foundation for Cultural Heritage Conservation (CFCHC), Intel (Santa Clara, CA, USA; www.intel.com) will deploy vision-guided drones to help restore the Jiankou section of the Great Wall of China.

In its more than 450 years of existence, this section of the wall has been affected by natural erosion and human destruction. Portions of the wall that are popular with tourists have been renovated over time, but the 12-mile Jiankou section is one of the most dangerous to access and has not been preserved for hundreds of years.

An Intel Falcon 8+ drone will be used for inspection and surveying of this area, and will capture tens of thousands of high-resolution images of areas proven to be too difficult or dangerous for human access, according to Intel. To do so, the drone has several sensor payload options. While not specifically named by Intel, one such option is the company’s InspectionPayload.

Within this payload option are two cameras: a Panasonic (Osaka, Japan; www.panasonic.com) Lumix RGB camera and a Tau 2 infrared camera from FLIR (Wilsonville, OR, USA; www.flir.com). The Panasonic camera features a 12.1 MPixel CMOS sensor and 30x zoom. The Tau 2 640 from FLIR features a 640 x 512 uncooled VOx microbolometer detector with a 17 µm pixel size and a spectral band of 7.5 to 13.5µm.

Captured images are processed into a 3D model, which provides preservationists with a digital replica of the wall. Traditionally, surveys of the Great Wall are a long, manual process.Intel’s technology enables the same inspections to be achieved in a matter of three days, producing more accurate data that helps conservationists develop an informed and effective repairschedule.

With the images, the research teams will use artificial intelligence technologies from Intel to help analyze the types of repairs needed while calculating the time, labor, and cost of materials for repair.