Deep learning promises automotive inspection improvements

Combining traditional machine vision technology with deep learning algorithms helps fill gaps in manufacturing inspection

Nuria Garrido López

Quality control and camera-based machine vision inspection have a long tradition at the Chassis & Safety Division and Vehicle Dynamics (VED) business unit of Continental (Hanover, Germany: www.continental-automotive.com). For example, electronic brake systems, the most important element of active safety in a car, rely heavily on 100% in-line inspection as an essential part of manufacturing the complex electro-mechanical systems that are supplied to OEMs in an increasing number of product variations.

Miniaturization, higher levels of integration, and functional enrichment make the production process ever more demanding. The need to reduce vehicle mass to achieve better fuel efficiency while increasing system performance results in dense integration of electronics, hydraulics and mechanics in electronic brake systems. For example, the MK 100 unit (Figure 1) requires visual inspection of tiny valve port orifices, O-rings, and the inside of the valve, which challenge the lenses, lighting and feature extraction of machine vision systems (Figure 2).

Figure 1: Inspection of the MK 100 electronic brake system challenges the capabilities of traditional rules-based machine vision systems.

Simple inspection tasks such as presence/absence, correct positioning or assembly of parts, and dimensional correctness are easily quantified. However, not all parameters used to determine whether a product is OK or NOK (not OK) are quantifiable. Some potential NOK criteria vary strongly and defy a quantification by numbers, shape or the like.

Figure 2: Four images of typical industrial optical inspections captured by the camera are shown here.

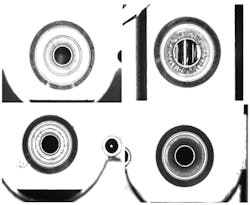

Another challenge is that many components come from different suppliers yet need to be handled on the same production line with the same machine vision set-up. Often, supplier-specific component surface machining leaves different patterns, changing the background and challenging feature extraction (Figure 3).

Figure 3: Four images of OK parts with greatly-varying appearance are accepted by the system.

New inspection tasks on the horizon

Still, probably 95% of the inspection tasks on worldwide VED lines can be solved with the business unit’s advanced standardized 2D machine vision systems. Some require 3D systems, but increasingly, others require a completely new answer. Typical rules-based machine vision, where one knows what to look out for and the system can identify extracted features from suitable images and compare them to reference parameters, makes part classification as OK or NOK straight forward. But what if the defects are not as easily quantified?

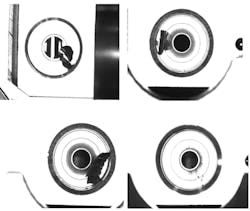

Such new challenges may require revolution instead of evolution. Identifying varying types of contamination on customer parts is one example of a new challenge. Contamination can be in the form of grease, dust or chips varying in size, shape, appearance, and location make quantification and inspection extremely challenging (Figure 4).

Figure 4: Four images of NOK parts with different types of contamination or defect are rejected by the system.

Combining the strengths of man and machine

That is why the VED machine vision team is looking into the potential of deep learning and different types of algorithms for machine vision. In a way, the first tests of deep learning-based inspection seek to combine the speed, repeat-precision, and exactness of traditional machine vision with capabilities typically attributed to the human mind only – giving the system artificial intelligence. Just like an experienced production worker will recognize contamination if he/she sees it in whatever form, a well-trained neural network shall also be able to identify contamination and may even be able to classify it. The contamination on the thread of a hydraulic component, for example, needs to be classified as critical or not critical depending on the material and size.

Continental’s VED business unit is evaluating—among others—a standard neural system from an expert supplier. This system has a proven architecture and the necessary library of tools for machine vision applications. The neural network requires a supervised learning phase. During this phase, many part images are fed to the system and the layers of the deep neural network extract features from these images that distinguish between OK and NOK. Very much like the human mind, the selection of OK and NOK images can therefore prejudice the neural system’s classification performance.

Outperforming rule-based inspection

Images acquired during actual production help generate data for deep learning-based solutions. The existing experience generating suitable images and highlighting relevant features, such as cracks—via lighting and contrast, the core skills of classical machine vision continue to apply. However, a deep learning-based system will not differentiate between background and defect in the same way as a traditional machine vision inspection system. In such systems, the hidden layers between the input and output layers of a deep neural network extract features by a mechanism that is ultimately unknowable.

However, confronted with complex situations that break the boundaries of rule-based algorithms, deep learning systems perform much better than rule-based software. That is why Continental as a corporation is beginning to use artificial intelligence as much for product development as for manufacturing purposes. Automated driving (AD), for example, provides one use case where artificial intelligence will be needed to enable a control unit to handle new and unexpected traffic situations which cannot be described by rules.

Getting prepared for the unexpected

That is what VED is looking for: A new tool that is prepared for the unexpected. Many challenges wait alongside the road to that new tool. The first is the database for the supervised learning phase during which the neural system is fed a massive amount of image data.

Another challenge lies in the timing. If a new part goes into production, images of OK and potential NOK parts are hard to come by. So how will the deep learning-based algorithm go through its learning curve?

One solution is to develop a machine vision system that learns very quickly. Such a system could be ready for mass production after collecting images during a short pilot production phase.

The VED organization’s absolute quality dedication is reflected in the requirements to the performance of a deep learning-based vision system: A false negative is not considered that much of a problem. If a part is wrongly classified as NOK, this will increase cost but will not impact safety. A false positive, however – a NOK part classified as OK – is totally unacceptable.

To get the statistical background data to evaluate the performance of a deep-learning-based algorithm, test runs in the VED machine vision lab will replicate a previously-successful daily manufacturing situation. The test consists of OK and NOK production parts that go through a deep learning-based vision inspection system to check whether any false negatives or false positives occur. Once possible faults in classification can be observed, it will also be possible to learn from them and to amend the supervised learning phase. This serves to develop trust in deep learning-based machine vision systems by thoroughly evaluating the system performance and demonstrating that no NOK parts will slip through.

The challenge of solder joints

One area where VED may benefit from deep learning-based vision is the inspection of solder joints. This is a particularly tricky task as the many possible defects vary greatly in appearance while the OK solder joints always look quite similar. Singling out NOKs is not that difficult but classifying the type of defect is very complex with conventional algorithms. Even getting the lighting right for solder joint inspection is a challenge. However, information on the exact damage mechanism would be valuable information for optimizing the solder process in a continuous feedback loop.

Another use case is the inspection of fiber-reinforced black rubber bellows. To ensure the required durability of this type of component, the correct fiber distribution and orientation needs to be verified despite next-to-no contrast. Deep learning is expected to handle lower levels of contrast better than conventional machine vision.

Another challenge along the road to deep learning-based vision inspection is the question of re-training a system: the operator authorized to re-train a system and the set of image data used must be defined. After the re-training there must be a standardized validation process.

Co-existing solutions

One thing is certain: Assembly of electronic brake systems is not getting any easier and new tools for machine vision will be needed as traditional solutions begin to reach their limits in some areas. New inspection tasks are now beginning to show and will gain importance in the future. Deep learning-based machine vision systems will provide a new type of tool that can fill in some of the foreseeable gaps of manufacturing inspection.

Nuria Garrido López, Senior Expert Machine Vision, Vehicle Dynamics business unit, Continental (Hanover, Germany: www.continental-automotive.com)