Deep learning tools inspect food and organic products

Mateusz Barteczko and Michał Czardybon

Traditional rule-based machine learning inspection systems work well for many industrial automation applications because the parts being inspected are meant to be uniform in dimension and the requirements for a pass result are therefore relatively easy to program.

For applications like feature detection, packaging completeness without pre-determined object positions, or foreign body detection with a very large number of objects in each image, however, traditional machine vision may not have the potential to produce reliable, repeatable results.

The food and organic products industries in particular may not be well-served by traditional machine vision in part due to a lack of product uniformity. A bushel of apples likely does not contain even two perfectly identical pieces of fruit, for example. Deep learning techniques that better handle product variance more appropriately serve these industries.

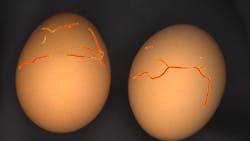

Feature detection tools, for instance, can identify simple deviations. Conveyor belt systems moving chicken eggs to a packing station may accidentally crack some of the eggs. A deep learning model can learn to detect a specific feature, in this case the cracks in the shell of an egg. Drawing tools can highlight all visible cracks on multiple training images. With as few as 15 images produced in this manner and using a tool such as DetectFeatures (bit.ly/VSD-DTFT), part of the Adaptive Vision Studio software library, a model can learn how to detect cracked eggs with only 10 minutes of training.

Detecting pieces of onion skin among peeled onions, or the presence of feathers on a chicken meat packaging line, are other examples of food inspection tasks where feature detection tools prove useful. These tools can even detect the presence of sunflower hulls in images of hundreds of sunflower seeds, with as few as 20 labeled training images.

Anomaly detection deep learning systems use tools like DetectAnomalies (bit.ly/VSD-DTAN) that train on a series of fault-free examples. A discrepancy of expected pixel values in any portion of an image examined after training suggests the presence of an anomaly. These anomalous pixels, highlighted by a heatmap, show the location of the anomaly even if the inspection program does not know precisely what the object ought to look like.

Anomaly detection may not serve some packaging completeness quality applications, however. A boxed airline meal will contain a set number of objects, but without pre-defined positions. In this case, image segmentation tools may better serve the application. These tools locate single or multiple objects within an image, segment the image to isolate those objects, and then classify the objects.

An airline meal may have to include a cup of yogurt, granola bar, block of cheese, and a bag of nuts, for example. A tool such as SegmentInstances (bit.ly/VSD-SGMT) can be taught to look for these specific objects via training images of the objects taken from varied angles. Then, when analyzing an image of a set of products prior to packaging, the image segmentation model can look for the four shapes – the yogurt, granola bar, cheese, and nuts.

Point location tools, similar to image segmentation tools, can apply to counting applications. A company that sells potted flowers may only want to use plants that have a certain number of flower buds. A deep learning tool such as LocatePoints (bit.ly/VSD-LOCP) can teach a model how to identify the general shape of a flower bud and pinpoint the center position of each bud. Each center position generates a point. By counting the number of points, the system can determine the number of flower buds present. The potting machines will then only select plants that meet the defined criteria.

Quality grading applications for food and organic products may also utilize object classification tools. To follow a previous example, while a bushel of apples may not contain identical objects to make possible precise definitions of good and bad results, each apple should exhibit common characteristics, for instance a particular color depending on the variety.

Different object classifications for the total amount of yellow or green on a banana may correspond to levels of ripeness, for another example. When combined with an automated picking routine, object classification tools can ensure the delivery of only fresh fruit to stores.

In some of the use cases discussed above, traditional machine vision may accomplish the task but with much greater and more difficult preparation than a deep learning method requires.

- Mateusz Barteczko, Lead Machine Vision Engineer, and Michał Czardybon, CEO, Adaptive Vision