How AI vision systems can succeed with human input

Daniel Bibireala

Artificial intelligence (AI) technologies help automate diverse tasks in manufacturing and beyond more than ever before. A recent survey by Landing AI and the Association of Advancing Manufacturing (A3) shows that 26% of manufacturing businesses currently use AI for visual inspection—a number that should grow in the near future. Before trusting an AI model to make critical decisions such as defect detection however, one must subject it to robust testing and validation.

In successful AI application deployment, the proof of concept stage comes first. In this stage, engineers identify a use case and build an AI model on a laptop or workstation. Once the model performs accurately, engineers run the model in a live environment. The model may then scale up to multiple production lines across multiple factories. Many teams may succeed in building a proof of concept, but when the AI system deploys in a live production environment, issues arise. In fact, respondents to the recent survey cited “achieving lab results in production” as one of the top three challenges of AI projects.

Many may wonder why the AI system achieves positive results in the lab, but not so much in production. It turns out that a production environment, being live and dynamic, introduces unexpected cases to the AI model for which it is not prepared. For example, the performance of an AI system developed to detect scratches of a certain color could start degrading if a new manufacturing process causes darker-tinted scratches to appear or a stained camera lens causes the images to suddenly blur.

Deploying an AI-based vision system in production is an iterative process rather than a single event. Instead of risking performance failure by forcing a model to survive on its own when it goes live, successful teams set up the system to work collaboratively with humans in what’s known as the human-in-the-loop strategy. This strategy breaks down into the following three steps, which are designed to build human experts’ trust in AI without interrupting the normal operations of a production line.

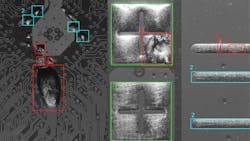

AI shadows the human experts. Run the AI system in a live production environment but let human experts make all decisions independently. In doing so, the human can observe the AI’s performance and make improvements. When the AI’s output disagrees with the human expert’s decision; analyze, label, and retrain the system with these hard cases to refine the AI’s decision making.

Human experts shadow AI. As the system improves, let AI propose decisions to a human expert, who then makes the final determination. Like the first step, when the human expert rejects the AI’s decision, capture that data, use it to retrain the system, then perform over-the-air updates.

Scale the system. Let the AI start to decide high-confidence cases while letting the human expert decide difficult cases. Scaling can take place with a collaborative workflow between the AI and the human in place. At this stage, each human expert can work with multiple AI systems (e.g., one expert could supervise 10 systems that control 10 different production lines). An AI team should have the tools to track the AI fleet performance and keep the system up to date.

The human-in-the-loop strategy leverages the advantages of both human experts and AI systems. AI systems consistently and effectively execute repetitive tasks that may leave human beings unfocused and fatigued — and consequently prone to error. By contrast, human beings are better at adapting to changing conditions and making judgements on difficult cases that fall outside the norm.

Working together, humans and AI can create a system that not only delivers better results in the short term but creates additional data that improves the AI’s performance over time. Improved performance also means tangible benefits. Of the 110 companies that took the survey, 62% said improved accuracy represents the top benefit of using AI-based machine vision.

When it comes to developing an AI-based visual inspection system, do not wait to implement the human-in-the-loop strategy. The sooner the AI system is exposed to real-world conditions, the faster its performance can improve—along with its chances of long-term success. With the right processes in place, an AI system can perform well, scale quickly, and generate economic benefits.

This article was written by Daniel Bibireata, Principal Engineer at Landing AI (Palo Alto, CA, USA; www.landing.ai) with contributions from Alejandro Betancourt, former Machine Learning Tech Lead at Landing AI.