FPGA-based neural network software gives GPUs competition for raw inference speed

The raw computational power of a processor, a measure of how quickly it can perform complex calculations, may be expressed in tera operations per second (TOPS). When neural networks (NNs) are the context, TOPS also can speak to inference speed and accuracy or how quickly the NN can correctly guess what a collection of pixels represents.

When the application is an Advanced Driver Assistance System, and the inference predicts whether or not a group of pixels in an image represents a person in a crosswalk, speed and accuracy are everything. Industrial inspection applications may have less dire consequences if accuracy is not up to snuff but obviously is still extremely important.

Graphics processing units (GPUs) have become centerpieces in the development of artificial intelligence (AI), both for training deep learning models and also for deploying those models on embedded systems. A GPU is a parallel processor, meaning it can simultaneously perform many complex computations. As each layer of a NN is essentially a series of parallel computations, GPUs are a natural platform for these applications.

There is a price to be paid for deploying GPUs, however. First, literally—GPU technology continues to advance at a rapid pace, and the hardware upgrade cycle may be expensive. GPUs also may generate heat and require power supplies that create engineering challenges.

Machine learning software developer Mipsology (San Francisco, CA, USA; www.mipsology.com) thinks that none of those prices have to be paid for quick, accurate NN performance. The company believes that comparatively modest field programmable gate arrays (FPGAs) can run NNs just as well if not better in some cases than a GPU, and the company has benchmark data to argue the point.

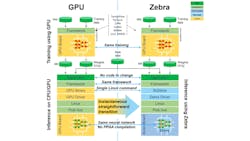

Mipsology’s flagship software, Zebra, designed by scientists and engineers with experience developing FPGA-based supercomputers, is designed to accelerate NN inference on FPGAs. The goal is to be for NNs on FPGAs what GPU libraries and drivers are for NNs running on GPUs (Figure 1). Mipsology wants Zebra to be software that is generic by design and can run “anywhere” on an FPGA that can support a NN.

Where the CUDA programming language and associated cuDNN libraries are used to program a GPU to run a trained model, a developer can instead use Zebra to implement the same trained model onto an FPGA, without having to change the trained NN (Figure 2).

The key difference between how an FPGA using Zebra and how a GPU often runs deep learning models is the use of 8-bit integers versus floating points. An 8-bit integer has 256 potential values. A 32-bit floating point has more than two billion potential values. This means 32-bit floating point-based calculations can be much more precise and detailed than the 8-bit integer-based calculations. Depending on the application, that level of precision may not be required.

Zebra is programmed around an algorithm that took four years to develop and uses 8-bit values to mimic 32-bit floating point calculations, reducing precision but without changing accuracy.

“Imagine that you want to draw a line between points on a piece of paper, and each point represents a potential answer, blue or red. That’s very easy to do,” says Ludovic Larzul, Founder and CEO of Mipsology. “Now, try to draw that line on a 1 x 1-in. grid. It’s very hard to draw a line when you have to align on a grid, because you have to pause at every intersection of the lines and decide what to do [draw a straight line to the next intersection] until you get to the other point.”

The line drawn on the grid is a metaphor for a 32-bit floating point calculation. The line drawn on the plain piece of paper represents an 8-bit integer-based calculation. Both lines still terminate on a point labeled blue or red. The metaphor is very simplified, Larzul admits, but demonstrates the idea. The NN’s conclusion is the same on both GPU and FPGA. It’s just a matter of how many steps it takes to arrive at the same place and with how much detail the line is drawn.

Switching from floating points to integers also effectively increases speed, says Larzul. “If you want to do multiplication using floating points, you are using a lot of silicon and packing less operation on the same surface of silicon than if you’re using integers. By switching to integers, you increase the level of throughput using the same silicon.”

Related: Sparse modeling software offers novel approach to machine vision inspection

Running NNs on FPGAs using Zebra also has its trade-offs. There may be applications for which precise results are critical and therefore demand the raw power of a GPU. Larzul acknowledges this. That said, according to Mipsology, Zebra running on FPGAs has been able to provide benefits for certain applications that GPUs cannot, such as real-time processing of cameras at full speed for some 6-axis robot operations or computing multiple NNs in parallel without reload delay. The company cannot share specific details about these tests, however, as the data is still protected by NDAs.

The demonstration of Zebra’s practical value for machine vision and imaging lies in A/B comparisons of speed and accuracy when performing the same application on both an FPGA and a GPU. These demonstrations will draw another kind of line, between the applications where an FPGA is adequate replacement for a GPU and where it is not.

Mipsology does have another set of empirical data, now, that carries weight. The MLCommons Association is the home of the MLPerf Inference v0.7 benchmarking suites for the accuracy, speed, and efficiency of machine learning software. Since 2018, the organization regularly releases these benchmark results .

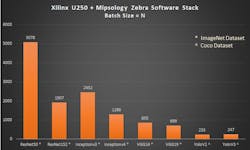

Xilinx (San Jose, CA, USA; www.xilinx.com), the pioneer of FPGA technology, participated in MLPerf testing in 2020 for the first time, running Mipsology’s Zebra on an Alveo U250 accelerator card. The relevant benchmarking test used the Resnet-50 model to execute image classification tasks. According to the MLPerf results, the performance of the U250 measured in image classifications per second was superior to several GPU-based systems.

While FPGAs may not be able to substitute for GPUs in all inference applications, for instance if the accuracy of a 32-bit floating point calculation specifically is required, the MLPerf results demonstrate that FPGA technology and 8-bit integers, with the right software stack, can run inference efficiently and well.

About the Author

Dennis Scimeca

Dennis Scimeca is a veteran technology journalist with expertise in interactive entertainment and virtual reality. At Vision Systems Design, Dennis covered machine vision and image processing with an eye toward leading-edge technologies and practical applications for making a better world. Currently, he is the senior editor for technology at IndustryWeek, a partner publication to Vision Systems Design.