Processing Gigapixels in Real Time

David Yakar

Driven by the latest CMOS sensor technology, machine vision cameras deliver multiple gigapixels per second deriving from higher resolutions at higher frame rates. But, processing the resulting 10 to over 100 Gbps in real time is a challenge. Can novel hardware architectures with the latest high-performance FPGAs be the solution?

Image Sensors are Setting the Pace

Thanks to advancements in CMOS technologies, the trend toward multi-megapixel sensors with frame rates of hundreds to thousands of frames per second (fps) has accelerated. Companies like Gpixel (Changchung, China; www.gpixel.com), Luxima Technology (Pasadena, CA, USA; www.luxima.com), Teledyne e2v (Milpitas, CA, USA; imaging.teledyne-e2v.com), AMS, onsemi (Phoenix, AZ, USA; www.onsemi.com), and Sony (Minato City, Tokyo, Japan; www.sony.com) are contributing to this development. The next image sensor generation will generate data rates of 160 Gbps and beyond.

Figure 1 charts the resolution and maximum frame rate of current 8- to 12-bit high-end image sensors over the effective bandwidth of standardized camera interfaces. The figure shows that existing camera interface technologies are not sufficient to address the bandwidth requirements of the latest sensors. In view of this challenge, the machine vision industry is pursuing higher bandwidth interfaces such as the nbase-T GigE Vision standard and fiber-optic-based CoaXPress-12.

In addition to the high-bandwidth capabilities of individual sensors, multicamera applications, combining multiple such high-end sensors has become ubiquitous, from virtual reality to broadcasting, surveillance, medical imaging, and 3D quality inspection. A 3D sports broadcasting system, for example, may comprise more than 30 cameras of 65-MPixel resolution each, running at 30 fps.

Such high-end multicamera applications delivering several 100 Gbps need to be captured, preprocessed, analyzed, and often also compressed and stored in real time with high-precision synchronization and low latency—a requirement that exceeds the capabilities of traditional CPU-based architectures.

Alternatively, heterogenous processing solutions leveraging the parallel processing capabilities of FPGAs and GPUs combined with CPUs offer the power and flexibility required for such high-bandwidth applications.

High Bandwidth Challenges

To transmit sensor data rates beyond 20 Gbps, few options among standardized camera interfaces exist: 25, 50, or 100GigE Vision, multi-link CoaXPress v2, or PCIe. Moreover, at 20+Gbps, MIPI are generally limited to 15 cm up to a few meters, depending on the implementation. At such high transmission rates, copper cables are also a limiting factor in transmission distance. For GigE Vision, for example, replacing copper with fiber optic cables extends the transmission distance from 25 m to virtually unlimited distance. The CoaXPress standard has recently also introduced fiber optic interface as a means to overcome the transmission distance limitation.

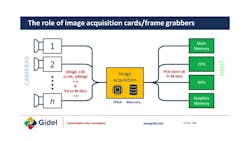

An additional challenge of high-bandwidth imaging lies in transferring the video stream to the host processing unit, be it a GPU and/or a CPU. Video capture cards typically interface via PCIe Gen. 3 x8 with an effective bandwidth of 48 Gbps. Moreover, within the host processing system, the CPU/GPU and the RAM bridge between the graphics card and the main memory must operate sufficiently fast to avoid frame loss. Smart NICs succeed in distributing the PCIe load and significantly reducing the workload on the host CPU, yet it often comes at the expense of lost image frames due to insufficient processing power.

Advanced Image Processing on FPGA-based Frame Grabbers

At data rates of 10 to 100+ Gbps, to avoid bandwidth bottlenecks and meet low-latency requirements, most applications require performing part of the processing at the image source. This means shifting complex image processing tasks from the host to the image acquisition processing pipeline. Such solutions need to go far beyond the traditional frame grabber basic preprocessing capabilities. They must be able to perform complex imaging algorithms, from wavelet transformations all the way to deep learning inference and real-time compression. Compression is a mandatory feature to overcome the aforementioned PCIe and host bandwidth bottlenecks.

Apart from expensive dedicated ASICs, FPGA-based architectures with powerful transceivers, fast on-board memory access, and powerful DMA offload engines provide sufficient performance power for high-bandwidth real-time image acquisition and processing. FPGAs can easily grab and process data streams at rates exceeding 100 Gbps without any data loss.

FPGA technology offers tremendous flexibility, not only due to its reconfigurable nature, but also because of its inherent ability to process multiple data streams concurrently, thus making it ideal for multicamera applications.

Traditionally, implementing FPGA applications has been limited to FPGA programmers. However, in recent years, new tools have been introduced with the aim of making FPGAs accessible to software and algorithm developers. Moreover, within the vision market, there are several open FPGA-based frame grabbers that enable embedding the user image processing block, allowing the developer to focus strictly on developing the image processing FPGA block without having to worry about developing the entire system acquisition path.

Typical processing tasks for an FPGA would be image preprocessing, extracting region of interest (ROI), and/or compression to reduce the amount of data that needs to be transmitted to the PCIe host interface. Real-time preprocessing enables system-level distributed pipeline processing, significantly reducing the host GPU or CPU processing load.

FPGA based architecture can be very beneficial, for example, in embedded computer vision systems on the edge where processing and compression must be performed close to the image source without any frame loss and at low latency. On such applications, the FPGA ideally is combined with an embedded host processor and GPU for heterogeneous coprocessing.