IMAGE PROCESSING AND neural networks CLASSIFY COMPLEX DEFECTS

IMAGE PROCESSING AND neural networks CLASSIFY COMPLEX DEFECTS

By Andrew Wilson, Editor at Large

In many industrial, medical, and scientific image-processing applications, feature- and pattern-recognition techniques such as normalized correlation are used to match specific features in an image with known templates. Despite the capabilities of these techniques, some applications

require the simultaneous analysis of multiple, complex, and irregular features within images. For example, in semiconductor-wafer inspection, discovered defects are often complex and irregular and demand more "human-like" inspection techniques. By incorporating neural-network techniques, developers can train such imaging systems with hundreds or thousands of images until the system eventually "learns" to recognize irregularities.

Unlike conventional image-processing systems, neural networks are based on models of the human brain and are composed of a large number of simple processing elements that operate in parallel. In the human brain, more than a billion elements or cells, called neurons, are individually connected to tens of thousands of other neurons. Although each cell operates like a simple processor, the massive interaction among them makes vision, memory, and thought processes possible (see "Understanding the biological basis of neurons," p. 64).

Seed analysis

In many image-processing applications, the attributes of shapes within images must be extracted and classified. In biomedical microscopy, for example, different staining techniques can identify a variety of different cell types. By using image processing, shape attributes can be initially extracted for these cells and then neural networks can be used to classify them. In an automated process, such cells can be classified without using a specific staining technique.

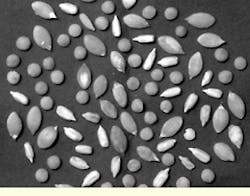

To test this automation process, John Pilkington, technical manager for image-analysis products at Scientific Computers (Crawley, England), developed a combined image-processing/neural-network system using off-the-shelf hardware and software. Instead of using different cell types, Pilkington chose to identify seeds in a mixture of pumpkin seeds, pine kernels, and lentils. To classify these seeds, images of unmixed seed types were first captured using a monochrome CCD camera. They were then digitized using an MV1000 frame grabber from MuTech (Billerica, MA). In the final system, classification was performed on images with mixed seed types.

After images were captured into system memory, the seeds were segmented from the background image, and shape and intensity information was then extracted. To accomplish this, Pilkington used the Aphelion Image-Processing and Image-Understanding software from Amerinex Applied Imaging (Northampton, MA).

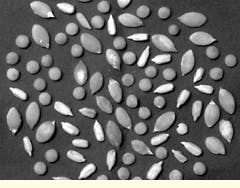

"Using a constrained watershed technique, accurate seed-shape information was derived from Prewitt edges in the image," says Pilkington. The constrained watershed required markers for each seed in the image and for the background region. "To compute these markers, Aphelion was used to perform a threshold operation, followed by a border kill of seeds intersecting the image edge. An erosion operation then highlighted the seed markers," he adds.

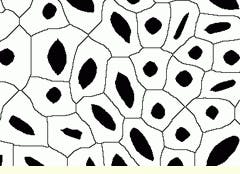

To obtain a background marker, the binary image from the threshold operation was inverted, and the distance from every pixel in the background to the nearest pixel inside a seed was computed. These maximum distances form a meshwork of ridges between the seeds that define a region of influence for each seed. By isolating this meshwork using an auxiliary watershed operation followed by a threshold, a binary image of the background marker was computed. The seed marker and the background marker binary images were then added together and a frame was added around the image. The final binary image acted as the constraint for the constrained watershed operation (see Fig. 1 on p. 63).

Data analysis

After the shape information was computed, AspenTech NeuralSim software from AspenTech (Cambridge, MA) was applied to classify the seeds. As a data-analysis tool, NeuralSim can build a neural network and perform data analysis, transformation, and variable selection. In addition, this package can check that the architecture of the neural network is appropriate. In developing the classifier, NeuralSim discovered that classification could be performed with relatively few errors.

"This level of performance was achieved with just three inputs: line fit error, line length, and perimeter data," says Pilkington. "And because all of these are shape attributes, no gray-level attributes were used." At first, when applied to the image, the neural-network classifier made only three misclassifications from more than 80 seeds, mistaking three pumpkin seeds for pine kernels. "By including a gray-level variable, the classifier was rebuilt. When re-applied to the image, two of the three small pumpkin seeds that were previously misclassified were correctly identified," adds Pilkington.

Recognition techniques

Many digital image-processing, analysis, and compression fingerprint and face-recognition systems require sophisticated algorithms that mimic, in some fashion, the operation of the human brain. To extract high-level information from images using neural networks, systems are generally trained using sets of user-supplied data to adjust the internal configuration of the processors until they provide the desired response.

In most imaging applications, the neural network computes relevant characteristics such as the similarity of a sequence to a given template. To perform such functions as pattern recognition, neural networks are capable of adapting to their own responses by automatic adaptation of the weights associated with each parallel-processing element during a "learning" phase.

To speed such functions, many companies such as datafactory GmbH (Leipzig, Germany), IBM (Essones, France), and IMS (Stuttgart, Germany) have developed VLSI ICs and board-level products to provide the large amount of multiply-accumulates (MACs) needed to perform neural network analysis (see Vision Systems Design, Oct. 1998, p. 50).

Now, Neuricam (Trento, Italy) has introduced the NC3001, a highly parallel, full-custom VLSI computing engine with simple fixed-point processing units and on-chip weight memory optimized for the computation of neural networks based on multilevel perceptrons. To perform image computations, the NC3001 architecture uses a single-instruction, multiple-data structure that allows multiples of a basic processing element to be used with a high degree of parallelism.

To implement the multilayer perceptron the NC3001 circuit contains an array of parallel-processing elements connected together by a broadcast bus that feeds features to the neurons and reads the results of the neurons (see Fig. 2). Each neuron contains a MAC unit and an output result register to allow straightforward implementation of multilayer networks. This fully parallel multiplier architecture provides one operation per clock cycle, whereas the time required for a full MAC depends on the number of input features. By using localized internal memory for the weights, input/output throughput has been increased at the expense of increased silicon real estate.

During the learning phase, the infrequent changes in the weights during the learning phase are delegated to the host processor. Address generator blocks for the weight memory and the result registers allow the integrated circuit to perform fuzzy inferences, finite impulse response filters, and infinite impulse response filters. To scale the results of the processing elements, a 32- to 16-bit barrel shifter is used.

Capable of performing 1 billion multiply-accumulates per second, the IC is supplied in single- and double-processor configurations on PCI, VME, and ISA boards with a direct interface for digital cameras. According to Neuricam, both Compact PCI and PC/104 versions are under development for the embedded systems market.

FIGURE 1. Off-the-shelf image-processing hardware and software can be used to classify object such as seeds or cells. After the original image (top) is binarized, the data are used to perform a constrained watershed operation on the original image (middle). After line-fit-error, line-length, and perimeter data are computed, they are fed to a neural network that classifies the seed types (bottom). (Images courtesy John Pilkington, Scientific Computers, Crawley, England)

FIGURE 2. At Neuricam, a multilayer perceptron structure (a) has been implemented as a series of massively parallel processing elements, each of which contains its own weight memory (b). In operation, the device can perform 1 billion multiply-accumulates per second.

Understanding the biological basis of neurons

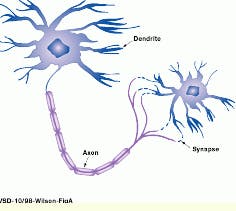

After numerous studies, the biological nature of action potentials in neurons is still not well understood. Indeed, the complex nature of the biochemical reactions in brain cells can only be approximated in simple form. And, as such, neural-network designs based on such models are at best approximations. Simply described, single neurons consist of a cell body or soma, a number of dendrites connected to other neurons via synapses, and a single nerve fiber called the axon that branches from the soma (see Fig. A).

Each neuron`s axon is connected via synapses to the dendrites of other neurons and transmits electrical signals when the cellular potential in the soma exceeds a certain threshold value. Whether the neuron transmits these electrical signals or not depends on the synapse. In a living neuron, there are two types of synapses: excitatory and inhibitory. While activation of excitatory synapses increases the voltage in the neuron, the voltage is decreased in inhibitory synapses. At the soma, this activation energy is compared with the threshold value and if it exceeds the threshold, an electrical signal is sent along the axon to connected neurons. Because the connections between neurons are adaptive, their connection structure changes dynamically, giving such interconnected networks their learning capability.

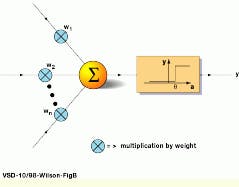

By describing neuron behavior in this way, computer researchers can model pattern-recognition functions using hardware and software models of neural networks. As early as 1943 at the University of Chicago (IL), Warren McCulloch and Walter Pitts in their coauthored paper A Logical Calculus of Ideas Imminent in Nervous Activity, proposed a synthesis of neurophysiology and logic by describing an artificial neuron known as the threshold logic unit (TLU). In their model, incoming information appears at the neuron`s inputs or synapses where each signal is multiplied by a weight vector that represents the strength of the synapse (see Fig. B).

To model the effects of the cell body, weighted signals are then summed to produce an overall unit activation. If this activation exceeds a certain threshold modeled by a non-linear gain function, the neuron produces an output response.

In 1949, the late psychologist Donald "D. O." Hebb in his book The Organization of Behaviour formulated a hypothesis describing a learning scheme for modification of the synaptic weights between neurons. This hypothesis, now known as Hebb`s rule, states that the strength or weight of the synapse increases when pre- and postsynaptic elements are active. In this manner the neuron is able to learn. When there is presynaptic activity without postsynaptic activation, the synaptic weights might decrease in strength in a process of "forgetting."

Differentiating nets

In 1958, Frank Rosenblatt in his paper, "The perceptron: a probabilistic model for information storage and organization in the brain" (Psychological Review 65, p. 386), described the first neural network that was capable of learning through a simple reinforcement rule. In operation, the network learns by changing the weight applied to the input signal by an amount proportional to the difference between the desired and actual outputs. Although the neural network had only two neuron layers and accepted binary input and output values, Rosenblatt showed that the network could solve basic logic operations such as AND or OR.

In 1969, Marvin Minsky and Seymour Papert of the Massachusetts Institute of Technology (Cambridge, MA) extended the concept of Rosenblatt`s Perceptron in a neural network that consisted of an input layer, where data were fed into the network, one or more hidden layers, representing the internal neurons in the brain, and an output layer that produced the result of the computation (see Fig. C). The ability of the neural network to produce a desired result depended on the number of neurons in the network, how the neurons were connected, and the values of the input weights.

Since the introduction of such feed-forward networks as the multilayer Perceptron model, where a layer of neurons receives input from only previous layers, several researchers have developed other models in which the neurons are connected in different ways. These include recurrent, laterally connected, and hybrid neural nets, each of which can be used to better model specific applications such as logical operation, pattern classification, and speech recognition.

A fully connected model was introduced by physicist John Hopfield (see Fig. D and "Neural networks and physical systems with emergent collective computational properties," Proc. National Academy of Sciences of the USA, 79, 2588, 1982). In this model, the inputs to a neuron come both from the inputs themselves as well as from feedback from the network`s previous outputs. Such thermodynamically based models are useful in storage and pattern-recognition applications.

Laterally connected neural networks, such as the Kohonen feature map, were introduced by Prof. Teuvo Kohonen of the University of Helsinki (Finland) in 1982. Because this neural network consists of feed-forward inputs and a lateral layer or feature map where neurons organize themselves according to specific input values, it is considered as both feed-forward and feedback.

A. W.

FIGURE A. Neural networks are based on biological models of neurons. Such neurons consist of a cell body, dendrites, and an axon that branches from the soma. To allow the transmission of electrical signals between neurons, each neuron`s axon is connected via synapses to the dendrites of other neurons. When the cellular potential in the cell body exceeds a certain threshold value, an electrical signal is sent along the axon to connected neurons.

FIGURE B. In 1943, Warren McCulloch and Walter Pitts at the University of Chicago (IL) described an artificial neuron known as the threshold logic unit (TLU). In their model, incoming information appears at the neuron`s inputs or synapses, where each signal is multiplied by a weight vector that represents the strength of the synapse. To model the effects of the cell body, weighted signals are then summed to produce an overall unit activation. If this activation exceeds a certain threshold, the neuron produces an output response.

FIGURE C. At the Massachusetts Institute of Technology, Marvin Minsky and Seymour Papert extended the concept of the Perceptron in a neural network that consists of an input layer, where data are fed into the network, one or more hidden layers, representing the internal neurons in the brain, and an output layer that produces the result of the computation.

FIGURE D. In thermodynamically based recurrent neural networks, such as the fully connected John Hopfield model, the inputs to a neuron are derived both from the inputs themselves and from feedback from the network`s previous outputs.