Compression standard uses wavelets

The new international JPEG 2000 standard for still-image reduction coding opts for a discrete wavelet transform methodology.

By Andrew Wilson,Editor

Last July, the ISO Joint Photographic Experts Group (JPEG) convened in Arles, France, to resume its work on JPEG 2000, the emerging standard for image compression. Publication of the final draft of the international standard was scheduled for last month, and final release for publication as an international standard is anticipated in December 2000. Work on Part 3 of the standard, known as motion JPEG 2000, reached a working-draft standard and is planned to become an international standard in November 2001.

Created in the late 1980s, JPEG image compression originally defined both lossless and lossy coding techniques. In lossless or L-JPEG mode, image compression is based on a predictive scheme that calculates a projected error by means of Huffman coding. In lossy mode, images are divided into 8 x 8 blocks and transformed by a discrete cosine transform (DCT). Transformed blocks are quantized with a uniform scalar quantizer, zigzag scanned, and entropy coded with Huffman coding. The quantization step size for each of the 64 DCT coefficients is held in a quantization table, which remains the same for all blocks. The DC coefficients of all blocks are coded separately using a predictive scheme.

Other modes defined in JPEG provide variants of the previous two basic modes, such as progressive bitstreams and arithmetic entropy coding. One mode—progressive JPEG—sends quantized data in sequence by a mixture of spectral selections and successive approximations.

"The original JPEG standard featured options many of which were mutually exclusive, and some of which required costly licensing," says Richard Clark, managing director of Elysium Ltd. (Crowborough, East Sussex, England). "But as requirements constantly changed, the standard developed as a shopping list of compression components rather than as an integrated whole," he adds. The JPEG group countered these problems by extending the standard, providing for so-called pyramidal coding, improving compression options via variable quantization, and offering a new lossless standard, JPEG-LS. "Despite this," notes Clark, "the marketplace reacted with stoical indifference, and there was little option than to take a radical step forward."

null

Because fingerprints contain tiny details that are used in legal and law-enforcement cases, the removal of these details and the resulting blocking artifacts of the JPEG algorithm are unacceptable. Consequently, the FBI and NIST standardized on a wavelet scalar quantization (WSQ) gray-scale fingerprint image-compression algorithm. This lossy compression method is well suited to preserving the details of gray-scale images while maintaining compression ratios of 12:1 to 15:1.

Slated as the next ISO/ITU-T standard for still-image coding, the baseline JPEG 2000 algorithm performs a dyadic wavelet decomposition using a floating-point or an integer wavelet filterbank depending on whether lossy or lossless compression is desired (see figure above). The output, organized into blocks, then creates a bitstream containing a number of independent layers that can be given different priorities during transmission to achieve differing image build-up features. Because the output coefficients are quantized, the process can be controlled to provide differing compression rates and, therefore, differing output file sizes. Finally, the delivered bitstream is coded. To decode JPEG 2000 images, the stages are simply reversed.

In operation, the JPEG 2000 transform performs a wavelet transform and creates a bitstream of several independent layers that can be given different priorities during transmission to achieve differing image build-up features. Because output coefficients are quantized, the process can be controlled to provide differing compression rates and, therefore, differing output file sizes. Last, the delivered bitstream is entropy coded.

Estimates of the increase in hardware complexity introduced vary, but tests based on software implementations indicate about four to six times. Other works suggest a complexity factor of two to four in hardware complexity; this is better by an order of magnitude.

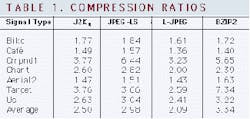

IMAGE TESTINGTo test the effectiveness of the JPEG 2000 standard, professor Touradj Ebrahimi of the Signal Processing Laboratory at the Swiss Federal Institute of Technology (EPFL; Lausanne, Switzerland) and his doctoral students Diego Santa Cruz and Raphael Grosbois evaluated the standard against three other image-compression standards. These standards included Version 1.0 of the Lossless JPEG (L-JPEG) Codec from Cornell University (Ithaca, NY), Version 2.2 of the SPMG JPEG-LS implementation from the University of British Columbia (Vancouver, BC, Canada), and the freely available UNIX compressor BZIP2 (see sources.redhat.com/bzip2/). All the results were generated by a 550-MHz Pentium III PC with 512 kbytes of cache and 512 Mbytes of RAM running under Linux 2.2.12.At the Sept. 2000 European Signal Processing Conference in Tampere, Finland, EPFL researchers demonstrated that for lossless compression, JPEG-LS provides the best compression ratio for almost all images, and JPEG 2000 achieves competitive results (see Table 1). One exception is the "cmpnd1" image, in which JPEG-LS achieves a much larger compression.

"Because this image contains a lot of black text on a white background," says Ebrahimi, "its statistics are best captured by JPEG-LS. With L-JPEG, compression performance is not very good and shows a lower adaptability to different image types." A similar but more extensive test with the same conclusions has been carried out by the EPFL team in coopertion with Joel Askelof, Mathias Larsson, and Charilaos Christopoulos of Ericsson Research (Stockholm, Sweden) and was reported at the recent SPIE 45th Annual Meeting in San Jose, CA (July/Aug. 2000).

Surprisingly, the freely available BZIP2, achieves impressive results given the fact that it does not take into account that the data represent images. This is especially true for synthetic images, such as "target," which contain mostly patches of constant gray levels as well as gradients. On average, BZIP2 appears to perform best, but this is due to the very large compression ratio it achieves on the "target image," says Ebrahimi. Generally, JPEG-LS provides the best compression ratios.

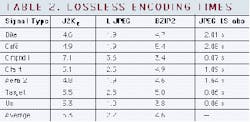

Table 2 shows the lossless encoding time relative to JPEG-LS for compression. "This [information] shows that JPEG-LS, in addition to providing the best compression ratios, is the fastest algorithm, and therefore presumably the least complex," Ebrahimi says. While JPEG 2000 is considerably more complex, L-JPEG performance lies somewhere between the two standards but does not provide any compression efficiency advantage.

For lossy compression, the JPEG 2000 algorithm provides the best results among existing standards while offering several useful features in multimedia and mobile applications, such as error resiliency, flexible scalability, and region of interest coding, among others.

AVAILABLE PACKAGESTo ensure proper verification testing and promote JPEG 2000 as a still image-compression standard, EPFL (Lausanne, Switzerland), Ericsson (Stockholm, Sweden), and Canon Research Center France (CRF; Cesson-Sevigne, France) have developed a Java implementation of JPEG 2000 known as JJ2000 that provides cross-platform interoperability. According to the partners, the JJ2000 software promotes JPEG 2000 by providing the first publicly available implementation of the JPEG 2000 standard. The selection of Java as the development language for JJ2000 was based on the language's platform independence and portability.This cross-platform support is expected to speed testing and accelerate adoption of JPEG 2000 as a still image-compression standard. The Java implementation also eases JPEG 2000 integration in browsers and its use in Internet applications, which are key to a successful standard. In addition, faster, easier, and more efficient integration with various graphic user interfaces in Java can be achieved for demonstrations and prototyping.

"This software implements completely the JPEG 2000 standard and has gone through extensive testing to ensure its compliance with the standard. It is now publicly available on the Web at jj2000. epfl.ch and can be downloaded freely," says Ebrahimi, the initiator of the idea of JJ2000 and its technical manager.

Rather than choose Java, Michael D. Adams, a doctoral candidate with the Signal Processing and Multimedia Group of the University of British Columbia (Vancouver, BC, Canada), chose to write a software-based implementation of the JPEG 2000 standard in C-language called JasPer. "C-language was chosen due to the availability of C-based development environments for most of today's computing platforms," says Adams.

In the JasPer implementation, there are two executable programs, an encoder and a decoder. According to Adams, JasPer software can handle image data in a number of formats including PGM, PPM, and Sun Rasterfile. Although the software is freely available for academic, demonstration, and research purposes from www.ece.ubc.ca/~mdadams/jasper/ #software, it cannot be sold for profit without first obtaining a commercial usage license from Image Power (Vancouver, BC, Canada).

Although the software is intended to support all of the functionality described in Part 1 of the JPEG 2000 standard, more work is still required to achieve this goal. "In the current version of the software, there are still a few missing and incomplete functions," admits Adams.

Company InformationCanon Research Centre France35517 Cesson-Sevigne, FranceWeb: www.crf.canon.fr/CMAP

91128 Palaiseau, France

Web: www.cmap.polytechnique. fr/~mallat/

Cornell University

Ithaca, NY 14853

Web: www.cornell.edu/

Elysium Ltd.

Crowborough, East Sussex

TN6 1lB, England

Web: www.elysium.ltd.uk

Image Power

Vancouver, BC, Canada V6C 3C9

Web: www.imagepower.com

JJ2000 JAVA Implementation

Web: jj2000.epfl.ch or jpeg2000.epfl.ch

JPEG and JBIG Committees

Web: www.jpeg.org/

National Institute of Standards and Technology (NIST)

Gaithersburg, MD 20899

Web: www.nist.gov

Princeton University

Princeton, NJ 08544

Web: www.princeton.edu/~icd/

Ricoh Silicon Valley

Menlo Park, CA 94025

Web: www.crc.ricoh.com/CREW/ CREW.html

Swiss Federal Institute of Technology (EPFL)

CH-1015 Lausanne, Switzerland

Web: www.epfl.ch

Telefonaktiebolaget LM Ericsson

SE-126 25 Stockholm, Sweden

Web: www.ericsson.com

University of British Columbia

Vancouver, BC, Canada V6T 1Z4

Web: www.ece.ubc.ca/