Snapshots

Software extracts image content

A team of engineers, computer scientists, and physicists at Lawrence Livermore National Laboratory (LLNL; Livermore, CA, USA; www.llnl.gov) has developed an extraction system, the Image Content Engine (ICE), that allows analysts to search volumes of data in a timely manner by guiding them to areas that likely contain the objects for which they are searching. The ICE can accommodate images acquired with different types of sensors and at varying resolutions. The software can account for the fact that images are taken at different times, during different seasons, and under changing weather conditions. “Finding specific objects, such as particular types of buildings, in overhead images that cover hundreds of square kilometers, is a difficult task,” says David Paglieroni, technical lead and coprincipal investigator of ICE.

The ICE architecture can run on different computing platforms and operating systems, such as Windows or Linux, laptops or powerful clusters of computers, and isolated or networked processors. The ICE software contains a library of algorithms, each of which focuses on a specific task. The algorithms can be chained together in pipelines configured through a graphical user interface. Each pipeline is designed to perform a specific set of tasks for extracting specific image content.

The ICE also provides tools for extracting regions, extended curves, and polygons from images. The region-extraction tool breaks images into small, adjacent square tiles containing one or more pixels. For each tile, the algorithm searches for spectral or textural characteristics and then groups tiles with similar features into regions. This tool is useful for separating distinct areas such as clusters of buildings from an image background.

Vision assists dementia sufferers with personal tasks

Older adults living with cognitive disabilities such as Alzheimer’s disease or other forms of dementia have difficulty completing activities of daily living (ADLs). They forget the proper sequence of tasks that need to be completed, or they lose track of the steps that they have already completed. The current solution is to have a human caregiver assist the patient at all times. Dependence on a caregiver is difficult for the patient and can lead to anger and helplessness, particularly for private ADLs such as using the bathroom.

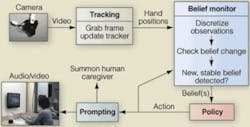

A real-time system has been devised by Jesse Hoey and colleagues at the University of Dundee (Dundee, UK; www.computing.dundee.ac.uk) to assist these people when washing their hands. Assistance is given in the form of verbal and/or visual prompts, or by enlisting a human caregiver’s help. The system uses only video inputs and combines a Bayesian sequential estimation framework for tracking hands and towel, with a decision theoretic framework for computing policies of action-specifically a partially observable Markov decision process. A key element of the system is the ability to estimate and adapt to user states, such as awareness, responsiveness, and overall dementia level.

In operation, video is grabbed by an overhead Dragonfly II 1394 camera from Point Grey Research (Vancouver, BC, Canada; www.ptgrey.com) and fed to a hand-and-towel tracker. The tracker reports the positions of the hands and towel to a belief monitor that tries to estimate where in the task the user is currently: what they have managed to do so far and their mental state. The belief about the user’s state is then passed to the policy site, which maps belief states into audio-visual prompts or calls for human assistance.