FOURIER DESCRIPTORS ALLOW web-inspection system TO CLASSIFY PLASTIC SHAPES

FOURIER DESCRIPTORS ALLOW web-inspection system TO CLASSIFY PLASTIC SHAPES

By Oliver Sidla

In Europe, countries such as Germany and Austria are adapting industrial machine-vision and image-processing systems to protect and sustain their natural resources and environments. In these countries, all businesses and households are dumping different garbage types into separate waste disposers. Although much of the garbage sorting and recycling is being done by machines, some industries, such as the plastic industry, still employ workers to grade and sort incoming waste products for recycling. The material sensors in use can distinguish between various types of plastic base materials, but they work well only on clean and presorted garbage. In practical disposal, however, garbage contains a mixture of items. Typically, businesses and households are inadvertently mixing empty soft-drink bottles, composite-plastic milk cartons, cleaning-material containers, dirty cloths, and even metal parts.

Recently, a contract was awarded to a leading worldwide builder of waste-processing and recycling plants--Binder+CO AG (Gleisdorf, Austria)--to construct a new plastic-waste-recycling plant that would incorporate the latest machine-vision and image-processing technologies into a web-inspection system. The plant requirements were to process plastic waste more accurately, faster, and with minimum human help, thereby saving costs. The image-processing system requirements were subcontracted to Joanneum Research, Institute for Digital

Image Processing Research (Graz, Austria).

The plant plans called for a garbage-processing system that could image and recognize common plastic materials, such as bottles and milk cartons, out of a stream of moving raw plastic garbage. The vision concepts were based on imaging and recognizing the specific contours of known plastic products, ranging from popular rectangular shapes to curved bottles.

The raw garbage stream would be initially prefiltered by metal detectors and sieves, then fed onto a moving conveyor belt. The machine-vision system called for an overhead camera with lighting and electronics support that would scan the moving plastic waste. The computer-based image-processing system would detect, recognize, and classify the plastic waste. In addition, the image-analysis system would energize an ejection-type mechanism that would toss the salvageable plastic products into a collection bin.

System overview

At the start of the waste-plant system design, several image-processing system platforms were developed, evaluated, and eventually discarded because they could not meet all of the divergent vision requirements. After several system studies, LabVIEW graphical instrumentation software, IMAQ Vision 4.0 machine-vision and image-processing software modules, and PC-TIO-10 digital input/output hardware and solid-state relay I/O modules from National Instruments (Austin, TX) were found to meet the system requirements using a standard personal computer (PC). The system design team had previous experience with LabVIEW G-source code, which eased the integration tasks.

During initial system performance-evaluation tests, the IMAQ software worked successfully with off-the-shelf computer hardware and Microsoft Corp. (Redmond, WA) Visual C++ 5.0 software for interrupt handling running under the Windows NT 4.0 operating system. This system design delivered the necessary pixel throughput for the processing rate demanded by the fast speed of the conveyor belt.

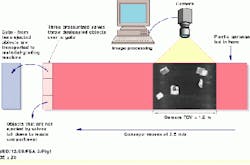

During waste plant operation, plastic-product garbage is fed onto a moving conveyor belt (see Fig. 1).The garbage is moved at a speed of 2.5 m/s by the conveyor belt under an overhead CCD camera (see Fig. 2). Fluorescent lights from Philips Components (Slaterville, RI) are used to brightly illuminate the conveyor belt area under the camera.

Color capability was determined not to be important for this system. The camera design choice was therefore made for a standard commercial black-and-white video camera that operates in interlaced mode with an exposure time of 1 ms. A survey of available cameras was made, and a Sony XC75 camera from Sony Electronics Image Sensing Products Division (Montvale, NJ) was chosen because of its cost/performance ratio. The camera`s infrared cutoff filter was removed to permit IR sensitivity.

Prior to image processing, the camera image is immediately reduced to half of its original size (from 768 ¥ 576 pixels to 389 ¥ 288 pixels) to save computer-processing time without sacrificing any object-recognition capability. For image capture, the PCVision frame grabber from Imaging Technology Inc. (Bedford, MA) was selected because it best satisfied the system design requirements. It was also picked because the system design team had prior favorable experience with this frame grabber and decided to go with a known acceptable part.

The height of the camera above the conveyor belt is set at 1.7 m. This height made it necessary to use a 8.5-mm lens from Pentax Corp. (Englewood, CO) to obtain a field of view (FOV) of approximately 1.2 m. Based on this FOV and the predefined speed of the conveyor belt, the image-processing system had to process three images per second minimum to accurately display the moving conveyor belt. This frame rate assures that the conveyor belt is being continuously monitored by the camera and that about a 50% overlap is obtained between images.

The relatively small image size allowed the use of a standard Pentium II-based, 233-MHz computer with 64 Mbytes of RAM from Dewetron (Graz, Austria). Depending on the number of plastic objects on the conveyor belt, this computer system can process and evaluate up to six images per second. It can segment the plastic objects from the belt background and evaluate their different shapes. Each object is image-inspected and a processing decision is made on whether to save it as a recyclable object or to ignore it as a nonusable waste object.

If a plastic object is determined to be recyclable, the computer activates one of three pressurized valves, after about a 2-s delay for conveyor-belt travel time, located at the exit end of the conveyor belt. Upon triggering, the actuator-type valves from Airtec (Kronberg, Germany) are impulsed to eject the recyclable products by pressurized air; these products are tossed over a short open-gap area into a collecting bin as they pass over the valves. Those objects determined not to be recyclable remain untouched and drop off the conveyor belt into a collecting bin.

The valve timing and control signals are commanded by the system computer. The PC uses information obtained from a rotary encoder that measures the conveyor belt speed. This information is used in turn to program the PC-TIO-10 input/output boards and relay modules to generate delayed pulses that activate the pressurized-air valves.

The image-processing system must detect and classify recyclable plastic products of different sizes as they move along the conveyor belt according to shape-imaging criteria. In addition, the conveyor belt accumulates dirt, dust, and liquids over time and heavy usage. Therefore, the image-processing system must also correctly recognize the plastic objects from the belt background by means of sophisticated software and hardware.

Consequently, the camera was adjusted so that it was sensitive to both visible and infrared light. This adjustment provides better contrast between the foreground (the objects) and the background (the conveyor belt) because of the light-absorption characteristics of the conveyor belt material and the plastic objects.

The software was designed for robustness against signal noise and to overcome the plastic imaging-shape distortions due to the effects of dirt or fluids on the conveyor belt. It provided an accurate segmentation procedure in combination with a flexible shape classification algorithm. In this approach, LabVIEW and IMAQ software proved convenient to quickly modify and test different image-processing alternatives.

The garbage-sorting image-processing application is coded in LabVIEW software using the NI-DAQ data-acquisition driver functions and the IMAQ Vision image-processing library. The user interface is designed as a state machine that calls several submodules depending on the current state, error codes, and the output of a previously called module. The elements of the user interface were created from available LabVIEW controls and indicators, which simplified the programming functions under Windows NT.

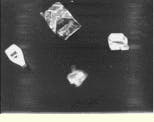

After image preprocessing and segmentation, object blobs are computed using single-pixel levels (see Fig. 3). Then, to classify whether a blob coincides with a predesignated material, a heuristics algorithm is applied. This algorithm sorts abstract binary objects based on the use of the IMAQ Complex Particle tool and the system design team`s programming experience. It performs and makes the following decisions:

If the shape is rectangular with smooth borders, it is classified as positive.

Circular objects are classified as positive.

An object is accepted if its shape is similar to one of the reference shapes stored in the database.

An object is unacceptable if its length/width exceeds a given threshold.

An object is rejected if its area exceeds an established threshold.

To compare the actual object contour with the reference shapes stored in the database, a shape-description method has been implemented. This method is designed to be completely independent of object position, scaling, and rotation and also to be robust against small shape distortions. These capabilities are needed because the plastic bottles and miscellaneous fluid containers are usually bent and twisted during their temporary storage in local garbage dumps.

The shape-description method uses the LabVIEW analysis and array manipulation functions and relies on elliptic Fourier descriptors (see "Fourier descriptors speed garbage sorting," p. 26). In this method, the contour of each object is resampled to an array of 128 (x, y) coordinate pairs. All of the points are fed into a complex Fourier transform that yields complex spectral components. Removing the phase and normalizing the magnitude of all the spectral components results in a scale and rotation invariant description of the object`s contour.

The normalized spectral components are stored in the reference shape database using 17 floating-point numbers per shape. Newly acquired object contours are classified by a nearest-neighbor algorithm: the sum of squared differences of each spectral component`s reference object versus actual object are taken as measurements for the similarity of the two shapes. If the sum is less than a predefined (but user controllable) threshold, the new object is classified as positive; otherwise, it is rejected.

Object movements

After a plastic object has been recognized as recyclable, it is separated from the other waste products by ejecting it into a container bin. At this stage, the NI-DAQ driver software and the capabilities of the PC-TIO-10 hardware were incorporated. The basic control approach is to blow pressurized air from actuator valves at the selected plastic objects just as the objects reach the end of their travel on the conveyor belt. The impulse of pressurized air propels the objects upward and outward into a trajectory that carries the objects into a nearby collecting bin for further sorting and classification.

Because the conveyor belt moves at 2.5 m/s and the average plastic object size is on the order of 20 cm, a precision valve activation time of 5 ms is required. Initially, this value was not easily accomplished within the Windows NT software environment. The horizontal distance between the camera and the valves is about 5 m. At any time over that distance, approximately 80 objects are being moved by the conveyor belt.

After close study, the programming of the valves and the activation of the ejection valves were accomplished by interrupt handler circuits. The NI-DAQ software supports the interrupts on the PC-TIO-10 hardware, including a software method to implement interrupt request (IRQ) handlers within a Windows

Dynamic Link Library (DLL; see Fig. 4).

During conveyor-belt operation, the LabVIEW image-processing platform creates a list of objects that also contain information about the length of the valve impulse and the activation time of the valve. This list is then passed via a Windows DLL function call to the global list under the protection of a semaphore. Then, either an insertion function (if the list is empty) or the interrupt handler programs a PC-TIO-10 counter to open and close the valve via an I/O module. The falling edge of the valve activation signal triggers an IRQ within the PC-TIO-10 board that, in turn, evokes a callback function to examine the global object list and to program the next event, if available.

The plant operator has full control of the image-processing system and can enter new reference object shapes via computer screens and a graphical user interface. In addition to displays of real-time inspections, this interface allows easy and fast screen modifications of the system control parameters.

The user interface is divided into LabVIEW-based front panels, each of which includes a set of similar tasks. These panels include a real-time display (see Fig. 5), nonexpert control, system control and test (see Fig. 6), camera position and brightness calibration, expert control screen to set up the shape-recognition and ejection parameters, and object teach-in.

FIGURE 1. A PC-based vision system can sort plastic waste that is moving at 2.5 m/s on a conveyer belt. Images are acquired at a rate of 3 frames/s. An analog CCIR camera and off-the-shelf fluorescent lighting, plug-in data-acquisition board, frame grabber, and LabVIEW software are used for acquiring and processing the images. After image processing using Fourier descriptor algorithms, the data-acquisition hardware activates pressurized-air valves for ejecting the recyclable plastic trash into a collecting bin.

FIGURE 2. With a field of view of 1.2 m, the overhead-mounted analog camera scans a flow of plastic garbage waste on a moving conveyor belt. To provide homogeneous lighting, fluorescent lamps strongly illuminate both sides of a partially enclosed surveillance area. The enclosure helps to eliminate ambient lighting. The garbage is freely dumped on the conveyor belt from above and just in front of the enclosed area.

FIGURE 3. The plastic-waste-identification image-processing system uses LabVIEW IMAQ Vision library preprocessing, morphology, and segmentation algorithms. Initially, the system acquires and scales the image of the moving objects (a). Then, a gradient function extracts the exterior object contours, followed by a threshold function that highlights the contours (b). Next, a morphology close function fills in the interior of the objects to create blobs (c). Lastly, small particles (noise) and objects that extend beyond the borders of the image are removed.

FIGURE 4. National Instruments NI-DAQ driver software and timing input/output board (PC-TIO-10) are used to control the pressurized-air valves for ejecting the recyclable plastic garbage at the proper time. The length of the timing impulse and the valve`s open duration are calculated for each piece of plastic garbage based on the object`s size and location. These calculations are used to generate the timing pulses for the counter timers on the PC-TIO-10 board.

FIGURE 5. The real-time display is updated at a rate of three images per second. Panel controls include a statistic display (upper right corner}, a status information line, a gauge that measures conveyor-belt speed (lower left corner), and several control buttons (lower right corner). Access to the other front panels and system controls are password-protected.

FIGURE 6. By using the system control and test front panel, an expert user can test the actuator valves and the camera-support fluorescent lighting, as well as modify various system conditions. The current settings for plastic-product shape recognition, which includes data about all the objects in the database, can be saved to disk or loaded from disk. These data enable the system user to set up plastic-product recognition parameters for different tasks.

Fourier descriptors speed garbage sorting

The problems of shape description and recognition are being actively researched for machine-vision and image-processing technologies. In these technologies, the boundary of a binary object can be regarded as a sequence of N complex numbers z(k), which are obtained by traversing its border in a clockwise direction and interpreting each coordinate pair x, y as z(k) = x(k) + i * y(k). Applying a discrete Fourier transform on z(k)--its number is preferably a power of two--yields the complex coefficients a(u):

The a(u) coefficients are called elliptical Fourier descriptors. They are by themselves complex numbers, each with a phase and a magnitude component. The set of each a(u) is another representation of the object contour and contains the same information as the set of initial points z(k); see figure.

Each a(u) contains, from lower to higher order, more and more details of the original shape, similar to a one-dimensional approach. If fewer than N coefficients of a(u) are used for the inverse transformation, the resulting object contour will be smoothed, depending on the number of coefficients omitted. This property is used in the garbage-sorting application for shape comparison. It is not necessary to match shapes exactly, only classes of similar contours have to be recognized. Using only a limited number of low-frequency Fourier descriptors allows unwanted details to be ignored.

Descriptor position and size

However, the Fourier descriptors must be processed to make them invariant of object position and size. Depending on the starting point on the object boundary, rotation, and scale, the Fourier descriptors can look different for the same object shapes.

In the garbage-sorting image-processing system, plastic object recognition is implemented by storing reference shapes in a database and comparing the shapes with the actual objects in an image. To implement this process, the coefficients a(u) are normalized so that they describe a shape independent of its position and size within an image. Translation independence is obtained by ignoring a(0). Rotation is coded in the phase of the a(u)s, therefore by using only the magnitude of each a(u) the descriptor becomes invariant of rotation. Research experiments have shown that the phase information contained in each a(u) is noisy and not suited for shape comparison; consequently, the phase information is omitted. To make the object description independent of size, the Fourier descriptors are normalized by dividing their magnitude by that of component a(1).

Shape recognition

A reference database of object shapes to be recognized is accumulated by creating the normalized shape descriptors of those objects. A two-dimensional array of coefficients, one row for each object consisting of a_ref(2) - a_ref(18) is stored in memory and used for later comparison.

To classify an unknown shape, its normalized Fourier descriptors are created from 128 equally spaced object boundary points. The nearest neighbor distance between the object [a_object(u)s] and each reference [a_ref(u)s] in the database is sufficient to decide whether the shape at hand is similar to the database shape or not:

The measured distance (D) is the sum of the squared distances in the 17-dimensional coefficient space between a reference object [a_ref] and the current object shape [a_object]. The distance is computed for each object in the database. If it is smaller then a predefined threshold for an entry in the database, then the actual shape is regarded as recognized.

To transform a binary object (a) into Fourier descriptors, the boundary points of the object are extracted first. The Fourier descriptors (a[0]...a[N-1]) are obtained by interpreting the x/y-plane pixel coordinates in the boundary-point image (b) as a complex number, where the `x` coordinate is the real part and the `y` coordinate is the imaginary part, and then applying a Discrete Fourier Transform to this one-dimensional array. The boundary can be represented as a signal. The more curves that appear in the boundary result in more high-frequency components (Fourier descriptors) in the frequency domain (c).