Fuzzy logic extends pattern recognition beyond neural networks

Fuzzy logic extends pattern recognition beyond neural networks

By Charles Hooge

Although many commercial hardware and software vendors provide libraries of image-processing algorithms, few have tackled the difficult task of pattern recognition. Inherent recognition problems force available image-processing systems into complicated trade-offs in hardware, development costs, maintenance of training sets, and accuracy. To overcome these limitations, several companies are turning to more-novel approaches to pattern recognition such as including neural networks and fuzzy logic. By incorporating both of these approaches into its video-recognition system (VRS), BrainTech Inc. (North Vancouver, BC,

Canada) has developed a PC-based system for extracting meaning and recognizing objects in a stream of video data.

Imaging systems can mimic learning by using neural networks (see Vision Systems Design, Oct. 1997, p. 28). These systems use training sets made up of inputs and outputs and adaptively varying weighting factors (or weights) to find the optimal match between the outputs of the neural network and the actual outputs from the training set. Often, such systems are composed of many layers of neutrons where most of the layers are hidden.

The primary advantage of neural networks is that, through unsupervised learning, they provide an optimal solution to a problem with little or no system developer (or user) intervention. Better still, specific knowledge of the physical process that leads to a result is not necessary. The system will "discover" the pattern itself while minimizing the error between the output it generates and the output desired.

Developed in the 1960s by Prof. Lotfi Zadeh at the University of California at Berkeley, fuzzy-set mathematics is a superset of conventional (Boolean) logic that has been extended to handle the concept of partial truth--truth values between "completely true" and "completely false." It is introduced as a means to model the uncertainty of natural language.

Fuzzy theory is a way to generalize a specific theory from a crisp (discrete) to a continuous (fuzzy) form. Fuzzy subsets and logic are a generalization of classical set theory and logic. Consequently, all crisp (traditional) subsets are fuzzy subsets of a special type. A fuzzy expert system is one that uses a collection of fuzzy membership functions and rules, instead of Boolean logic, to rationalize data.

Combining methods

To build a pattern-recognition system (called Odysee) based on these techniques, BrainTech used a design method known as the Olsen Reticular Brainstem (ORB). This method is derived from a set of rules originated by Willard W. Olsen in 1988 while he was working as a consultant in the semiconductor industry. Olsen combined his knowledge of neuro anatomy and biochemistry to create a biochemical model of neurons in which each synapse of the neural network defines a template for adapting a fuzzy membership function. As a new input gets used to adapt a particular synapse, the range of its fuzzy membership function stretches or contracts to accommodate its most recent value. Moreover, the global settings for response sensitivity provide a means of working feedback into the system, thereby making ORB an adaptive control system.

The fundamental nature of fuzzy-set mathematics allows cost-effective computation, which has promoted the popularity of fuzzy-logic systems in consumer electronics over the last decade. Moreover, because the vectored components within the ORB act similarly and independently of each other, the system can take advantage of parallel-processing methods. This type of processing allows the system to adapt and respond in real time for many kinds of applications.

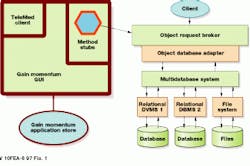

In the Odysee system, pattern recognition is divided into image-processing modules (see Fig. 1). The major modules include data acquisition; preprocessing, which is further divided into filtration, segmentation, and transformation; training; and match- ing. The data-acquisition mod- ule involves sensor-independent data acquisitions and digitization. In the data-acquisition process, multiple inputs (for example, several cameras) and multimodal sensors (for example, fusing data from both visible and infrared cameras) are accommodated.

In the preprocessing module, potential objects are isolated and identified in the image. These potential objects are then transformed to yield a one-dimensional (1-D) feature vector or signature. To perform image segmentation, the system developer needs to ascertain which features will uniquely characterize all the possible classes of objects. These features could include the radial cross section of the object, a statistical measure (for example, a histogram of gray-scale intensity), or a complicated measure such as a Fourier power spectrum or a wavelet transform decomposition.

Signature vectors are an array of numbers that represent the characteristics of the specific class of objects to which they belong. Note that the determination of the optimal transform (actually, sensor-transform pairing) is critical to the performance success of the vision system. Knowledge of the specific physical problem is useful in determining possible sensor-transform pairings.

For instance, in a military application where objects (targets) are often obscured, size-independent measures (such as color or thermal signature) would be most useful. Moreover, in an industrial application where nearly identical objects are moving on an assembly line, faults could be found by using the deviation from a regular pattern of the object`s outer contour.

Less than optimal choices seen in other applications are shape measures that are not rotationally invariant despite the objects being found in many different orientations. A more difficult application involves the use of only gray levels where all the objects are virtually identical in intensity (but not in color); in this case, a color determination would be a better approach.

Once determined, these signatures (feature vectors) can easily be concatenated to create a single signature vector that might correspond to one object and incorporate many physical parameters such as power spectrum, color (RGB) histograms, and radial cross-section.

In the case of a binary decision (that is, pass or fail), the training of both acceptable and unacceptable results is obtained by transforming the acceptable and unacceptable objects (which are detected in two-dimensional [2-D] scanned image data) into 1-D vector data.

After preprocessing, the vectors are fed into the dedicated classifier, in this case, the BrainTron classifier, to perform ORB pattern-matching. In a more complex classification scheme, the acceptable result can be used with results containing various known errors. In an industrial application, the classification can lead to improved quality control if the cause of the defect can be connected to a particular cause or process.

Vectors presented to the processor generate a set of fuzzy results that are then used to create probability distributions for lists of class-vector associations. These probability distributions are then combined statistically to classify objects in the input image.

The BrainTron classifier is available in either a hardware or a software version. The software version can be used for initial testing of sensor-transform pairing, whereas the hardware version is typically used in a final-application vision system. Running on a dedicated 200-MHz Pentium Pro-based computer, the software version is currently generat- ing 200,000 pair-wise matches per second. On the same computer, the hardware version operates approximately at the same speed but works independently of the main processor (freeing it to work on, say, CPU-intensive image preprocessing).

In practice, for 1000 previously trained feature vectors, each of length 100, the BrainTron classifier can yield a best match in 0.5 seconds. This power is satisfactory for most vision applications. If higher throughputs are needed, the hardware version can be customized in various parallel configurations. It is implemented with field-programmable gate arrays on a half-length PCI-based add-in board with 1-Mbit SRAM. Each board also contains a bus-interface IC and a microprocessor control unit for signal processing and coordination of parallel recognition modules, should the system developer want to implement parallel processing. In such cases, multiple boards could be used and the pattern recognition problem linearly segmented across multiple boards.

Driver software for Windows-based applications supports multiple board configurations as well as software-only emulation and performance evaluation. Application programmable interfaces for the BrainTron driver manage the parallel execution of the pattern recognition process depending on the number of boards configured in the system.

Real-time classification

In addition to the classifier and the 200-MHz processor, the basic BrainTech VRS contains a frame-grabber board, 64 Mbytes of RAM, a 2-Gbit IDE disk drive, a video recorder board running at up to 30 frames/s, a 24-bit color video camera generating 720 ¥ 480 pixels per frame, and custom software for both image pre-processing and object classification, all packaged under a Windows NT platform (see Fig. 2). This system works in real time (at 1 frame/s) to automatically recognize, track, and classify single and multiple moving objects.

To perform real-time classification and object recognition, image frames are first captured and preprocessed for background removal, noise filtering, and boundary detection, among other functions. This stage uses adaptive background-removal techniques to separate moving objects from the background and to define a boundary for each object for later classification. Background removal also corrects for frame-to-frame variations in illumination of the scene.

After processing each frame, the system computes several distinct 1-D vectors for each moving object found in the scene. For example, a shape is determined as a radius vector, based on the object`s centroid, and is run through a low-pass filter to smooth the boundary. Another shape-analysis algorithm measures the variation in the unfiltered boundary. Red-green-blue histograms can be determined and normalized to describe the distribution of color intensity found inside the boundary. Notably, other measures could also be added. For instance, texture analysis could be measured using wavelet-transform techniques.

After all the various characteristics have been measured for each moving object, feature vectors are presented to the classifier for matching and training. Because the fuzzy results create probability distributions of symbol-vector associations, they can be combined statistically with position tracking data to classify each moving object in the video frame.

Immediate learning

In building vision systems, the Odysee system provides a capability not found in most neural networks --immediate and continuous learning. In a neural network, the training set, that is, both the input and the output vectors, must be defined beforehand. With the Odysee system, however, the system developer (or user) can begin training the BrainTron classifier to recognize classes with the first signature vector. The system developer can then use subsequent vectors to add to the existing class(es), create a new class, or ignore the vector. From that point onward in time, the signature vector (and, therefore, the object) is recognized immediately.

Typically, as the number of learned vectors increases, more of the sample space becomes "known." The system then requires fewer and fewer system developer interventions to accurately classify the incoming vectors; that is, the system becomes more "knowledgeable." Furthermore, the system developer can teach the classifier, and the combined expertise could be consolidated in a database. In this manner, system developer knowledge can be subsequently transferred to the classifier.

The system also provides for self-correction by means of a training set editor. If a vector has been misclassified, the system developer can be prompted to reconsider the decision. In addition, the system developer can "scroll back" into the image data from which the vector was derived. This capability permits training in manual classification. The trainee is presented with the original image and the collateral information on classification and confidence (see Fig. 3).

Combining seemingly disparate technologies of neural networks and fuzzy logic has allowed sophisticated pattern matching recognition systems to be built. Now, recognizing and classifying objects in video images is a task that can be accomplished by PC-based systems at affordable cost. Due to the flexibility of the architecture, vision systems are expected to find greater use in image-processing and machine-vision systems where pattern recognition is needed.

CHARLES HOOGE is manager, research and development, at BrainTech Inc., North Vancouver, British Columbia, V7P 3N4 Canada; e-mail: [email protected]; Web: www.bnti.com.

FIGURE 1. The Odysee architecture includes the (sensor-independent) data-acquisition and digitization module, the preprocessing module (which prepares data for the BrainTron classifier), and the adaptive training and matching module.

FIGURE 2. In object tracking applications, images are first preprocessed for background removal and noise filtering. Image feature vectors are then computed and applied to the fuzzy-neural BrainTron processor where such features are used to classify data sets.

FIGURE 3. Accessing the input image and training and classification panels allows system developers to properly classify acquired images. The chart panel displays the signature of the image using the selected transform (in this case, a composite of the radial, color, and cross-section transforms) and the fuzzy signature to which it is being compared.