IMAGE CAPTURE: Camera uses depth maps to focus near and far objects

In machine-vision or image-processing-based systems, the depth of field (DOF) of a camera/lens system determines the part of the image that appears acceptably sharp. Since a lens can only focus at a single distance, it is often important to have a relatively large DOF so that objects appear sharp across a range of distances from the camera/lens system.

For a given object distance, lens focal length, and format size, decreasing the aperture of a lens will increase the DOF. While decreasing the aperture of the lens may increase the DOF, illumination may need to be increased to compensate for the decrease of light sensitivity in the camera/lens system.

To extend this DOF without requiring increased illumination, Keigo Iizuka of the department of electrical and computer engineering at the University of Toronto (Toronto, ON, Canada; www.utoronto.ca) has developed a multicamera system that simultaneously focuses on near and far objects.

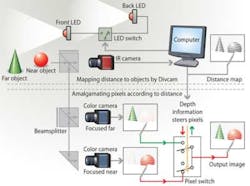

Known as the Omni-focus video camera, the prototype system optically couples two XC-555 color CCD cameras and an XC-EI50 IR camera from Sony Electronics (Park Ridge, NJ, USA; www.sony.com/videocameras) through multiple beamsplitters (see Fig. 1). Each of the color CCD cameras in the system is focused at different positions near and far in the scene. To generate a composite image that contains both near and far information, the distance of each object in the scene must be computed.

The infrared (IR) camera dubbed Divcam is also used to image the scene. To generate a depth map, the scene is first illuminated with an IR LED placed in front of the system and the captured image (I1) stored in an IMAQ PCI -1411 frame grabber from National Instruments (Austin, TX, USA; www.ni.com) in the host PC. A second image (I2) is then generated by illuminating the same scene with a second IR LED placed behind the IR camera and stored in the frame grabber.

Since the images are identical except for their optical density, the ratio R = SQRT (I1/I2) taken for each pixel in the image can be used to calculate image distance. This ratio is used as it removes the difference in reflectivity that may be caused by objects of different color in a scene. When this ratio is large, the object is close. Similarly, as objects are imaged further from the camera system, this ratio decreases monotonically.

After the depth map is calculated, it can be used to select individual pixels from the multiple video outputs to generate the final, single composite image. Using software developed by David Wilkes, president of Wilkes Associates (Maple, ON, Canada), the final image combines both near and far objects into one scene. Although at present the prototype only employs two color video cameras, increasing the number of color video cameras will improve the quality of the image.

Using this two-camera prototype, the Omni-focus video camera was used to capture images of a toy rubber duck fastened to the pendulum of a moving metronome (see Fig. 2a). In the demonstration, the metronome was placed close to the camera system with a Daruma doll placed in the background.

FIGURE 2. a) A toy rubber duck fastened to the pendulum of a moving metronome was placed close to the camera system with a Daruma doll in the background. While the details of the doll taken by the standard camera (c) are almost unrecognizable, the images taken by the Omni-focus camera (b) clearly show the details of the duck and doll.

For comparison, the same scene was taken by a standard color video camera (see Fig. 2c). While the details of the Daruma doll taken by the standard color camera are almost unrecognizable, the images taken by the Omni-focus Video Camera clearly show the details of the Daruma doll without additional noise or seams between the near and far images (see Fig. 2b).