Biological techniques inspire novel imager design

Andrew Wilson,Editor,[email protected]

A jumping spider, commonly found in the world's tropical regions, uses its eyes in a time-delayed movement to capture images. It sweeps its long and narrow retina back and forth while slowly rotating it in its own plane. According to Ania Mitros, a graduate student at the Koch Laboratory of the California Institute of Technology (Pasadena, CA), and Oliver Landolt, now with Agilent Laboratories (Palo Alto, CA), man-made sensors built around such principles could lower the complexity, cost, and weight of vision sensors for robot navigation systems.

To test this theory, Mitros and Landolt designed and implemented a 32 x 32-pixel image sensor with a photoreceptor spacing of 68.5 µm coupled to a mechanical vibrating platform. As each photoreceptor element scans over part of the image, it senses light-intensity changes in space and encodes them as signal amplitudes over time. As long as the element gathers more than a single intensity measurement before the image changes, the information-collection capacity of each photoreceptor is increased.

The sensor operates at a scanning frequency of 300 Hz in a circular scanning path. According to Mitros, this technique produces an effective spatial resolution in the image plane along the scanning path of approximately 6.5 µm, a resolution close to the diffraction limit of the optics used. The 300-Hz scanning frequency is high enough so that a local area can be scanned before the image changes, but not so rapid as to blur the features being detected. And because spatial intensity gradients are captured as temporal intensity fluctuations that are detected by every pixel independently, fixed pattern noise is virtually eliminated.

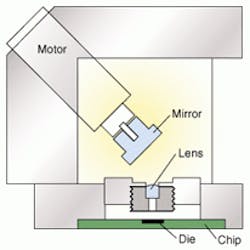

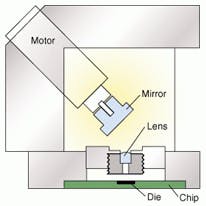

To generate regular scanning movements, a slightly tilted mirror is mounted on a motor at an angle of approximately 45°, and the mirror is focused through a lens onto the imager. As the motor spins, the image reflected by the mirror wobbles in a circular periodic pattern. A motor encoder outputs the angle of the mirror at any time point.

Whenever the scanning path of a photoreceptor crosses a sharp edge that causes an amplitude change exceeding the built-in threshold, at least one spike is generated at this point in the scanning cycle. The presence and location of this edge can be inferred off-chip by observing that a spike occurs at the scanning frequency at an essentially constant phase with respect to the scanning cycle.

Since sharp edges are phase-coded with respect to the scanning cycle, signatures of specific spatial patterns can be detected using delay-and-coincidence detectors. Gradient information is rate-coded and can be recovered by low-pass-filtering or histogram techniques. Using these techniques, Mitros and her colleagues have designed and simulated an algorithm for detecting the presence and orientation of a single edge from a pixel's spike train.

Such sensors may prove useful in future robotic vision systems, where cameras attached to robots already possess inherent mechanical vibrations. In such systems, effective resolution of image sensors could easily be increased to reduce fixed pattern noise and to lower cost. Indeed, according to Christopher Assad of the NASA Jet Propulsion Laboratory (Pasadena, CA), such systems could be mounted on a small roving robot such as the Mars Lander. In such applications, the energy from robot vibrations could power the sensor's movements.