IMAGE PROCESSING: Imaging system combines Camera Link and GigEVision

To perform parallel image processing on high-speed linescan images requires that the image be properly segmented among multiple CPUs, processed, and often then reassembled to display the result. In many Camera Link-based systems, high-speed cameras capture image data directly to memory where multicore CPUs can perform image-processing tasks on specific segments of the data before reassembly.

One of the drawbacks of these systems is that the Camera Link camera and host CPU must be located within meters of each other. If multiple CPUs are required to process these images, they too must be located relatively close to Camera Link systems. To overcome this problem and at the same time allow segments of images to be distributed remotely across multiple multicore CPUs, Stemmer Imaging (Puchheim, Germany; www.stemmer-imaging.de) has demonstrated a high-speed imaging system that combines the benefits of both Camera Link and GigE Vision while allowing remote high-speed processing of images.

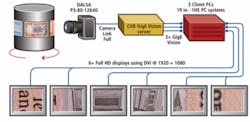

Debuted at VISION 2008 in Stuttgart, Germany, the linescan-based demonstration used a 12k × 1 P3-80-12k40 linescan camera from DALSA (Waterloo, ON, Canada; www.dalsa.com) imaging banknotes on a rotating scanner (see Fig. 4). As images are transferred to the PC at 650-MByte/s Full Camera Link rates using DALSA’s X64 Xcelera-CL PX4 Full PCI Express-based frame grabber, 2160 lines of 12k pixels are sequentially transferred to the CPU’s host memory buffer.

“As each batch of 2160, 12k lines arrive sequentially in the host memory buffer,” says Jonathan Vickers, technical manager with Stemmer Imaging UK, “The Common Vision Blox (CVB) linescan interface divides this image into blocks of 4k × 20 lines. The CVB acquisition engine then fires asynchronous events when a block is acquired. CVB GigE Vision Server then distributes these 20 lines to three separate client PCs through three Intel Pro 1000 GigE network interface cards, while the acquisition engine acquires the next block in parallel.

“Because each channel can run at 1 Gbit/s, data can be transferred directly from the camera to the host PC and transferred to the three client PCs in real time,” says Vickers. At VISION 2008, Stemmer demonstrated this by displaying the output of each individual client PC on two Full HD LCD monitors using a graphics card from NVIDIA (Santa Clara, CA, USA; www.nvidia.com) embedded into each client PC. Using Stemmer’s CVB FastLineScan Display module allows the resultant six-monitor display to show the full 12k × 1 linescan image in stationary mode.

While displaying the stationary images, one system acquires a sequence of 16 images to create a 4k × 16k image on each PC, which are then displayed in a synchronized scrolling mode where each PC scrolls synchronously. All re-paints are synchronized to the vertical blank of the monitors, resulting in a flicker-free, synchronized scroll of images of 12k × 16k on six monitors.

Although the demonstration itself was impressive, perhaps more important was the underlying technology used in its development. Partitioning regions of interest within an image and distributing them to multicore processors will allow developers to increase the throughput of their machine-vision systems.

“Although an overlap of segmented regions is required for such functions as image filtering,” says Vickers, “this can be accomplished within the GigE Vision Server using CVB’s Virtual Pixel Access Table (VPAT) software. Furthermore, because the GigE Vision server lies at the heart of the system, it can be programmed to send any part of an image, a complete image, or a specific sequence of images to any given server for further processing.

“In essence,” says Vickers, “the GigE Vision server appears to the system developer as a GenICam-based camera, offloading the task of programming the camera and frame grabber directly.” In this way, the developer can control the partitioning, processing, and display of the system by manipulating GenICam parameters and CVB software. Not only does this simplify distributed parallel processing, but it allows multiple multicore-based processors to be located at distances of up to 100 m from the image-capture system.

Of course, linescan cameras are not the only types of imaging devices that could benefit from this architecture. “Because the architecture is modular,” says Vickers, “it will prove useful in medical and military applications where large data sets need to be rapidly processed using area, linescan, multispectral, and other more specialized image-capture devices.”