In industrial image processing, cameras with traditional, image-based sensors have been the proven tool for inspection, quality control and process monitoring for decades. However, especially in dynamic scenes and fast production processes, conventional image sensors generate enormous amounts of data at high frame rates and sensor resolutions, while static images are unable to capture valuable information about changes over time. In order to obtain the desired motion information, high-performance image processing systems must process the flood of data, which requires enormous hardware resources.

Now imagine an industrial camera with a temporal resolution of around 10,000 images per second that captures even the fastest movements almost seamlessly and generates only a percentage of the data of a conventional camera. The exact name of this sensor technology is ‘event-based vision sensor’, or EVS for short.

Neuromorphic Sensing Technology—Inspired by the Human Brain

Once again, cutting-edge technology takes its inspiration from nature. The photoreceptors in human eyes continuously pick up light stimuli and send signals to the brain, which processes them, reacting in particular to changes such as differences in brightness, contrast and movement, while uniform input is often filtered out. This means that we automatically focus on movements instead of constantly re-recognizing every detail of our environment. This allows our brain to process relevant information quickly without being flooded with unnecessary data. To replicate this ability, Prophesee (Paris, France) has collaborated with Sony (Tokyo, Japan) to develop an event-based vision sensor (EVS) with special pixel electronics.

Related: Researchers Create Experimental Camera System for Event-Based Imaging

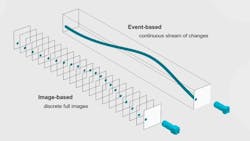

The key aspect of the solution is that no complete 2D images with unnecessary static data are generated. Instead, changes beyond a defined threshold value trigger events. These events are signalled individually and in real time, without being bound to a fixed time grid (cf. frame rate). The minimum time span between two pixel events is an important characteristic of this sensor and is referred to as ‘temporal resolution’. It lies in the microsecond range and therefore enables ultra-fast and almost ‘gapless’ sensing of movements in a time window of 1 millisecond or less, which would correspond to a frame rate of 10,000 images per second of a classic industrial camera. While these image-based cameras always transmit the full amount of data from the entire sensor surface, an event-based sensor often only generates a very small amount of data in the same period of time—a major reason for their impressive speed.

Only Changes Instead of Complete Images

Engineers of image processing systems no longer have to compromise between high frame rates and large amounts of redundant data to capture fast events. The amount of data generated by the EVS sensor is based on activity in the field of view (FOV) and automatically adapts as scene conditions change. Each pixel only sends information when something changes in its FOV—not according to a predetermined frame rate. This leads to a high-resolution sequence (stream) of events instead of a rigid image series with fixed frame rates. The movement data also contains valuable clues from which a lot more information can be derived—information that conventional cameras with a fixed frame rate are unable to capture due to their low and fixed sampling rate, or which is lost in a large amount of redundant data due to the nature of their output.

As contrast changes are less apparent on evenly illuminated surfaces, but primarily on object edges, the visualization of an event stream is comparable to a 2D camera image processed by an edge detector. EVS sensors therefore already support recognizing motion patterns and directions very efficiently. The time between detected events can directly be used to calculate the speed at which an object is moving—and all this without having to process many unnecessary images. This could be advantageous for autonomous vehicles or for applications in robotics where movements require immediate reactions. The extremely high precision at pixel level is also particularly helpful for analyzing fast movements, such as in industry or sports technology. Reducing unnecessary data also means lower memory requirements and therefore more efficient processing.

New Temporal Dimension

Time stamps of the pixel events with microsecond accuracy open up completely new application possibilities. If the location and time of several nodes are correlated over a specified time range in a 3D visualization, a motion path emerges, which in turn leads to a better understanding of how objects move. Speed information can also be easily extracted from this without complex image processing.

Event data offers additional interesting analysis options when creating slow motion recordings. By sorting the captured pixel events into a temporal grid and generating complete sensor images from them, slow-motion videos emerge with a variable ‘exposure time’. The playback speed also remains variable afterwards: from real time (super slow motion with one image per event) to real movement speed (at approx. 1 image per 33 ms) to an overall image that summarizes all captured events in a still image and thus makes the complete movement path visible. This enables detailed temporal motion analysis without having to perform complex calculations and with minimal data volume and low resource consumption with unprecedented efficiency.

Related: Multi-View Stereo Method Uses Event-based Omnidirectional Imaging

New Approach to Image Processing

But in order to use this new sensor information, developers have to completely rethink their approach and adopt new, alternative software approaches. Of course, the event data can be divided into classic frames to process them like conventional images. However, this method is not exactly optimal, as it does not take advantage of the actual event data, and its high temporal precision for fast movements and efficient processing of sparse data, which also reduces energy consumption. Using appropriate functions, tools and algorithm patterns, users can extract and process movements, times and structures quickly and efficiently from the event data. However, these cannot be found in any of the known generic standard vision frameworks today.

Related: Prophesee Releases Event-Based Vision Evaluation Kit

However, Prophesee and Sony, the manufacturers of the new sensor technology, have already established a corresponding processing method and made all the helpful functions available in a software development kit, the Metavision SDK, together with detailed documentation and numerous samples.

Applications in the Quality Sector

The capabilities of neuromorphic sensors can also play an important role in quality assurance and improvement. This is particularly true in applications where accuracy, speed and efficiency are required for error detection. The added value of being able to detect the smallest object and material changes in pixel size and in real time is evident, for example, when monitoring machines and processes. Thanks to the high temporal resolution, which extends into low microsecond range, even high-frequency movements such as vibrations or acoustic signals can be visualized. An analysis reveals unusual patterns (e.g. due to wear, malfunctions) at an early stage, which can lead to damage or production downtime.

Related: Three Dimensions of Measuring Asset Management Performance

As they only notice movements or contrasts, neuromorphic sensors are much less affected by lighting changes, making them far superior to conventional image processing systems in highly varying lighting conditions (e.g. reflections, shadows). When it comes to fast defect detection, process monitoring or inspections in difficult conditions, quality assurance processes can only benefit from the capabilities of neuromorphic sensors.

The New Must-Have Camera Technology?

Event-based sensors do not capture complete images, but only pixel changes over time. However, they can dynamically compose different types of images, providing applications with significantly more motion information than cameras with conventional image sensors. Therefore, the technologies do not compete with each other. Event-based sensors are definitely not a replacement for traditional image-based cameras or even AI-based image processing, but rather a complementary technology that opens up new possibilities when it comes to motion detection. In various applications, a single sensor type or type of data is not sufficient. A combination of different technologies is often necessary to optimize an application. Event-based cameras are therefore interesting and profitable components for fast motion analysis, robotics, autonomous systems and industrial quality assurance, and their full potential is far from being exhausted.

About the Author

Heiko Seitz

Heiko Seitz, an engineer, has worked for IDS-Imaging Development Systems (Obersulm, Germany) since 2001. He has applied his technical background as an author since 2016. Before then, he was involved in the evolution of machine vision products at IDS from frame grabbers to today's camera technology. Through his in-depth experience in various areas of camera software, he understands the challenges developer face as well as the requirements of the users.