Effective Lighting Design Strategies for Reliable Machine Vision Applications

What You Will Learn

- Managing complex defect detection requires balancing multiple lighting techniques and focusing on high-frequency defects to simplify system design.

- Ambient light is unpredictable and uncontrollable. To maintain image quality, consider spectral filters or overpowering ambient light sources through the choice of engineered application-specific lighting.

- Specular surfaces pose unique challenges. Diffuse lighting and polarization techniques can help achieve accurate imaging without glare or reflections.

- Early engagement with lighting specialists and proactive design adjustments are crucial for reliable machine vision system performance.

The design of lighting for machine vision applications has challenged machine vision system designers since the birth of machine vision around 1980. It continues to challenge system designers today and will likely challenge vision systems designers for years to come.

There are many challenges in lighting design for machine vision. This article covers five of these. Your experience will likely reveal additional challenges.

Optics Expertise

Most machine vision developers are not experts in optical physics. More likely they are experts in software development, mechanical engineering, controls engineering, or some other discipline useful in the design of machine vision systems. When it comes to lighting, it’s all about optical physics: how light interacts with objects and how to provide light that will give the highest contrast.

Starting in machine vision, my expertise was electronics, not optics. As I have worked in machine vision, I am fortunate to have mentors including Hal Schroeder (retired, EG&G Reticon; Salem, MA, USA), Ken Womak (retired, Eastman Kodak; Rochester, NY, USA), Matt Young (retired, National Institute of Standards and Technology; Gaithersburg, MD, USA) Kevin Harding (Optical Metrology Solutions; Niskayuna, NY, USA), Greg Hollows (Edmund Optics; Barrington, NJ, USA), Nitan Sampat (Edmund Optics; Barrington, NJ, USA), Stuart Singer (Schneider Optics; Hauppauge, NY, USA), Nick Sischka (Edmund Optics; Barrington, NJ, USA), and others.

Related: Lighting and Illumination Choices

In addition to the insight and advice from these experts, I also needed to investigate and study many aspects of optics that apply to machine vision. I am still learning today.

What every machine vision developer needs to do is to cultivate mentors who will help them gain an understanding of how to apply optical principles to machine vision. Along with advisors, a healthy curiosity and independent study are needed. A starting point could be the online courses on optics for machine vision at https://www.autovis.com/resources/videos/optics.

The good news is that you don’t need to become an optical physicist, you don’t need to run complex equations, and you don’t need to have specialized software (see Figure 1) to succeed with lighting design for almost every application. Understanding optical principles and recognizing how they are at work in the scene you are imaging is almost always sufficient.

Complex Requirements

One challenge to machine vision systems and lighting is dealing with a complex requirement. Often, this is for the inspection of cosmetic defects (see Figure 2). Normally, the requirement is not to detect just one type of defect, but a list of different defects such as scratches, dents, chips, stains, etc. Creating contrast for each of these defects can require different lighting solutions. Each lighting solution requires a separate lighting technique, camera exposure, and image to process.

The normal tendency is to try to find a compromise solution that will provide some contrast for each of the different defects using just one lighting technique. That means the software must deal with low-contrast and often somewhat noisy images. The accuracy of the vision system in detecting defects is compromised. The larger the number of defects to detect, the greater the compromise.

Take, for example, a vision system that must detect scratches and discoloration on a part’s surface. Scratches are mechanical changes to the surface and can often be detected best with dark field illumination. Discoloration does not change the surface mechanically and will likely require bright field illumination to detect. It is possible to have both bright field and dark field light sources for one camera. Two very rapidly acquired images with the two light sources strobed as required can solve the application.

However, what happens as the list of defects increases? The number of lighting solutions to cover the list of defects grows, and the vision system solution becomes cumbersome.

Related: How AI-Informed Imaging Can Accurately Detect Subtle Defects in Semiconductors

The path to simplifying the vision system’s design is to limit the number of defects the vision system is expected to detect. Start with a Pareto chart listing each defect ranked by its frequency of occurrence. Usually, there is a clear break between more frequent and less frequent defects. Focus the vision system on detecting only those defects that occur frequently and set aside the infrequent defects. Usually, manufacturing and quality control departments resist this approach because a human inspection is believed to be able to find any and all defects.

The Software Non-Solution

It happens every day, people expect a clever application of image processing algorithms to mitigate any shortcoming in optics and illumination. With the advent of deep learning for image processing, this expectation has exploded.

Some people suggest that AI/deep learning will lead to solutions that require less attention to lighting. Clearly, AI/deep learning can cope with changes in an image that would be difficult with traditional rule-based programming.

However, what does and does not show up in an image will affect the results of AI/deep learning just as it does for rule-based programming. Researchers in deep learning feel satisfied with 90% accuracy and extremely satisfied with 98% accuracy. However, industry typically wants Six Sigma performance. So, 99.9% is a better target for vision system accuracy.

What about self-driving cars? They need to be extremely reliable without engineered lighting. The vision application for self-driving cars has several attributes that are not available in most machine vision systems. First is sensor fusion. Self-driving cars do not rely just on vision; they have data from radar and often lidar sensors to combine with vision data.

Related: The HEKUtip QC Assistant Delivers Precise Measurements

Second is time series. Vision systems for self-driving cars bring in and analyze image data as a continuous video stream. Something that appears in one image but not the next is still relevant because of the short time interval.

Third is driving policy. Self-driving cars have a driving policy that compensates for errors in the analyzed vision stream to prevent problems. Machine vision systems installed in a manufacturing environment have none of these attributes and must rely on a single image from just one or a group of cameras.

From the very earliest days of computing, the phrase “garbage in, garbage out” has stood as a beacon to anyone willing to pay attention. Poor image quality is garbage and will always challenge image processing and lead to less accurate results than good image quality.

The need for well-engineered lighting for machine vision applications will not go away.

Ambient Light

Ambient light is whatever light exists in the vicinity of the vision system excluding light that was engineered specifically for the vision system application.

There are three truths about ambient light:

- It virtually always exists.

- It is not under the control of the vision system developer.

- It will change unpredictably.

What are the sources of ambient light? One is the area lighting for human workers (see Figure 3). This often leads to glare and glint if not anticipated and mitigated. Also, lamps and fixtures for area lighting do fail and repairing them may take hours to days in some cases. The vision system must continue to operate reliably during the outage. Other variables can be a flashing light on a forklift or even a person walking by wearing a white shirt or smock that reflects ambient light onto the scene.

Exterior light, often from the sun, is especially challenging because of its high intensity and its variability depending on time of day, season, and weather. Windows, skylights, and doors that may be opened or closed are sources of exterior light. The only practical way to deal with direct sunlight is to block it from the scene and from the camera.

Always keep in mind a vision system may work reliably for an extended period of time. Then, if the vision system is relocated, the ambient light environment will be different, and the vision system’s performance might degrade. Rarely is the vision engineer called in advance of the move to assess what measures need to be taken to keep the vision system operation reliable. However, when the vision system performance is less than acceptable, the vision engineer must respond with a rapid fix.

There are established techniques for mitigating the effect of ambient light. The first of these is to block ambient light with an enclosure or shroud. Some vision engineers dislike this approach since it impedes access to the area where the vision system is installed and makes maintenance more difficult.

Related: How to Avoid Lighting Pitfalls in Machine Vision Applications

A second very common approach is to use lighting with a narrow spectral band and a matching filter over the camera. The filter lets through most of the engineered light energy while suppressing a large percentage of ambient light, which is usually broad spectrum. This approach works extremely well except where color imaging is required.

A third approach is to overpower ambient light with a very bright light source. With LEDs that can be pulsed and over driven, this approach is very practical. Consider using two or three techniques for any vision system design.

Specular Surfaces

There is nothing harder to image than a mirror or any surface that is specular with the properties of a mirror (See Figure 4). Specular surfaces present two challenges:

- When a surface should appear bright, lighting it to give a uniformly lit surface.

- When the surface should appear dark, preventing any glint or glare from either ambient light or engineered light from reaching the camera’s lens.

For the specular surface to appear bright in the image, the most common technique is to use a highly diffuse light source like a dome (see Figure 5) or flat dome light (see Figure 6). While every point on the specular surface will be illuminated by light through a wide range of angles, only a small percentage of those light rays from any point on the object will reflect into the camera lens’ entrance pupil.

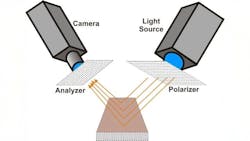

Another approach to imaging specular surfaces and eliminating glare from the engineered lighting is to use a polarizer-analyzer pair (see Figure 7). The polarizer is a linear polarizer placed over the light source. The analyzer is also a linear polarizer placed over the camera’s lens. The scene is illuminated with polarized light. Specular reflection preserves the polarization of the light while the scattering of the light by diffuse reflection eliminates polarization. The analyzer is rotated to eliminate the specularly reflected light. A good portion of the diffusely reflected light passes through the analyzer to form an image in the camera.

Conclusion

The design of lighting for reliable machine-vision system performance is critical. It poses unique challenges. Yet most of these challenges have been faced before and successfully solved. Do not underestimate the lighting design process in your projects. If you face challenges in your project that seem difficult to you, reach out to lighting companies or consultants with experience. They can usually guide you through the challenges, leading to a successful project. However, reach out earlier in the project rather than later. Addressing lighting challenges later in the project usually leads to more constraints in the types of solutions you can implement.

About the Author

Perry West

Founder and President

Perry C. West is founder and president of Automated Vision Systems, Inc., a leading consulting firm in the field of machine vision. His machine vision experience spans more than 30 years and includes system design and development, software development of both general purpose and application specific software packages, optical engineering for both lighting and imaging, camera and interface design, education and training, manufacturing management, engineering management, and marketing studies. He earned his BSEE at the University of California at Berkeley, and is a past President of the Machine Vision Association of SME Among his awards are the MVA/SME Chairman’s Award for 1990, and the 2003 Automated Imaging Association’s annual Achievement Award.