The Beneficial Effects of Thermal Cameras on Emergency Braking Systems

This article was originally published in Power & Motion, which is a brand within the Design & Engineering Group at Endeavor Business Media. Vision Systems Design is also part of the Design & Engineering Group.

Today, most newer cars and trucks are equipped with automatic emergency braking (AEB) systems but the sensor technologies they employ do not work well in all lighting conditions. Thermal cameras, however, are seen as a potential sensor option for AEB that could overcome the constraints of existing sensing technologies due to their ability to detect objects in low- or no-light conditions.

Despite the increased use of advanced driver assistance systems (ADAS) like emergency braking in vehicles, the number of accidents occurring each year remains high, especially those at night involving vulnerable road users (VRU) such as pedestrians, bicyclists and animals.

According to Wade Appelman, chief business officer at Owl Autonomous Imaging (Owl AI), pedestrian deaths have increased dramatically over the past 10 years with 76% occurring at night. Up until now, most safety regulations have focused on drivers. But many governments around the world are beginning to implement regulations aimed at improving pedestrian safety.

In the U.S., the National Highway Traffic Safety Administration (NHTSA) announced a Notice of Proposed Rulemaking in May 2023 which would require passenger cars and light trucks to be equipped with AEB and pedestrian AEB systems. This was followed in June by a similar proposed requirement for heavy vehicles— those with a gross vehicle weight rating (GVWR) greater than 10,000 lbs. such as heavy-duty trucks and buses.

However, early testing has shown the sensors and cameras currently used in the market for AEB systems do not work as well at night, said Appelman in an interview with Power & Motion. Therefore, new technology is needed to better protect VRU and meet mandates like that proposed by NHTSA.

Shortcomings of Current Sensor Technology

AEB systems utilize various sensors to detect objects and automatically apply the brakes—many of which rely on use of hydraulic or pneumatic technology—if a driver does not take action to avoid hitting the detected object.

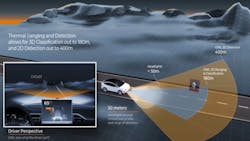

In order for AEB systems to make a braking decision, they have to first detect and classify an object, determining if it is a car, a person, a deer or something else. The system then needs to determine the distance of the object. “Only once you do that can you decide if you want to actuate your brakes,” says Appelman. “In order to make an act decision—automatically brake—you need to know [an object is] there, you need to know what it is, and you need to know how far away it is.”

Most vehicles today equipped with ADAS use RGB cameras, which see visible light. Appelman says these cameras do a great job of detecting objects, classifying them and in the right configuration can even determine an object’s distance. But like the human eye or cell phone cameras, if there is no illumination—such as from headlights, a flash or sunlight—these cameras cannot see. “[RGB cameras] fail in many of these challenging conditions but are used in a majority of vehicles today,” he says. “That’s why we have an increase in pedestrian fatalities because if it was working perfectly, we wouldn’t hit things.”

Related: Thermal Ranging Technique Delivers Detailed Images

Radar sensors are also commonly used for object detection in current safety systems. Appelman says these are good at sensing objects but not classifying them. Radar sensors are good at detection and range only, and are low-cost, which has helped their widespread use in the market.

LiDAR sensors are growing in use and do a slightly better job at detection than radar sensors, which is one of the reasons they are seen as a key sensor technology for autonomous vehicles. However, like radar they are unable to classify objects and remain expensive, although prices have started to come down in recent years.

Given some of the shortcomings associated with current sensing technologies, Appelman says other sensor types—specifically thermal cameras—should be considered to help provide better classification of objects and detection in low- or no-light conditions.

The Benefits of Thermal Cameras

As thermal cameras can see in complete darkness and blinding light, they are better able to detect objects in any light condition. “[Thermal cameras] do not need and are not affected by light,” Appelman says. “[They] operate in a totally different electromagnetic spectrum than [other technologies].”

He explains that thermal operates in the infrared band right below microwaves, at the 8,000-14,000 nanometer range, where it can pick up electromagnetic energy. “We can see through fog, rain, snow, dust and smoke by the properties that thermal allows,” says Appelman.

Because they do not require light, thermal cameras can detect outside the illumination beam of a vehicle’s headlights, which is about 50 m, he says. Depending on the lens configuration, the camera can determine range and classification of an object up to almost 180 m, which Appelman says is plenty of time to stop and decide on the appropriate action to take.

What is different between a thermal camera and a regular camera is the sensor layer, says Appelman. The type utilized by Owl AI is one which resonates when it gets energy from the heat in the environment being detected. “Everything has a relative temperature,” he says. “[Our technology] sees radiated energy at a spectrum that is outside of visible light.”

While this technology has existed for some time, it has typically been within military applications and been very expensive. However, costs are coming down, leading to greater commercial adoption, which in turn helps bring costs down further.

The resolution of the sensor has been a challenge to adoption as well, Appelman says. Owl AI has worked to improve the readout of the sensor technology used in its thermal cameras, so they produce a high-definition picture, in the range of 1,200 x 800 pixels, and are able to do so in a mass production way—which helps to make it cost effective.

Related: How Metal Recycling Facilities Use IoT and Thermal Imaging to Improve Early-Warning Fire Detection

Appelman notes the company’s thermal cameras can be used for forward facing vision detection as well as rear- and side-facing, enabling better detection all around a vehicle. They can also be used in tandem with other sensor technologies like radar and RGB cameras to further improve imaging and detection capabilities. For instance, overlaying RGB camera information on that from a thermal camera allows for more color intensity of the detected environment to be shown, making it easier to see things like a stop sign.

Owl AI pairs software with its thermal cameras to enable object segmentation and classification. The camera itself detects the objects, and the software then determines what those objects are. For those applications where it may be more important to focus on people detected in the area as opposed to buildings or other objects, the software can do so.

Pairing Thermal Imaging with Emergency Braking

Sensing technology like Owl AI’s thermal cameras play a key role in emergency braking systems as they are the device that detects objects so appropriate action can be taken. The camera is connected to a vehicle’s central processor which runs algorithms to classify or determine the range of a detected object.

Once an object has been identified, a signal is sent through the vehicle’s computer system to actuate the brakes.

How quickly an AEB system can detect, identify and take action will be vital to ensuring the safety of VRU, and meeting proposed regulations from NHTSA and other governmental agencies around the world. As Appelman explains, testing to date has shown the ability of thermal cameras to quickly and more accurately detect VRU, especially at night and will therefore be beneficial for the proposed mandates.

Currently, the NHTSA proposal for vehicles with a GVWR under 10,000 lbs. – passenger cars and light trucks – is calling for inclusion of AEB and pedestrian AEB systems capable of working day and night at speeds up to 62 mph while the proposed rule for heavy vehicles would require AEB systems which work at speeds from 6-50 mph.

The mandates are in the comment period but expected to become finalized regulations by the last half of 2023. When passed, vehicle OEMs about 3-5 years (depending on the final language) to implement and pass the safety standards, explains Appelman.

Testing procedures for the AEB systems have yet to be fully determined, but the current proposal is specifying ambient illumination of the test site for pedestrian AEBs to be no greater than 0.2 lux, which Appelman said is equivalent to low moonlight conditions. “A car has to be able to operate, stop and detect a pedestrian in that amount of light from very far away,” he says. “It is going to be really hard for a car to see something at night” using technology currently in the market.

As such, use of other technologies like thermal cameras, which can better detect objects at night, will likely be necessary to meet the proposed NHTSA regulation. Owl AI’s own testing has demonstrated the ability of thermal cameras to better detect and identify pedistrians and other VRU than RGB cameras at night so appropriate action can be taken more quickly.

Thermal’s ability to work in adverse weather conditions, such as rain and fog, will benefit AEB as well, ensuring safe actions can be taken at all times. This capability also aids use in off-highway machinery applications, such as agriculture and underground mining, where there may be a lot of dust and debris, as well as low light conditions that could hinder machine operators’ ability to see their surroundings. Use of thermal cameras, however, could provide better visibility of the work site and improve the safety of those working around a machine.

The continued progression toward higher levels of autonomy in various vehicles and mobile machines will also benefit from greater availability of thermal imaging technology. Being able to detect objects in various light or weather conditions will help to ensure safe operation of autonomous vehicles, currently a large area of concern—especially for those which operate on public roadways. The ability to use thermal with other sensing types will aid future autonomous developments as well by enabling a wider range of detection, and therefore safety, capabilities.

“I think we will always have multiple sensors on a vehicle for lots of reasons [such as] redundancy,” concludes Appelman. “There are going to be scenarios where different sensors are going to do a better job [than others such as] RGB cameras can see color where thermal only sees grayscale. Radar is highly accurate at ranging.”

The lower cost of RGB and radar will benefit their continued use, but as new technologies like thermal become more widely used their costs will come down as well, helping to provide greater sensing and safety capabilities.

About the Author

Sara Jensen

Technical Editor of Power & Motion

Sara Jensen is technical editor of Power & Motion, directing expanded coverage into the modern fluid power space, as well as mechatronic and smart technologies.

She has over 15 years of publishing experience. Prior to Power & Motion she spent 11 years with a trade publication for engineers of heavy-duty equipment, the last 3 of which were as the editor and brand lead.

Over the course of her time in the B2B industry, Sara has gained an extensive knowledge of various heavy-duty equipment industries — including construction, agriculture, mining and on-road trucks —along with the systems and market trends which impact them.