On-board processors turn into smart devices solid-state cameras

On-board processors turn into smart devices solid-state cameras

By Andrew Wilson, Editor at Large

Advances in complementary-metal-oxide-semiconductor (CMOS) technology are providing system developers with integrated image-processing cameras based on just a few integrated circuits. These cameras capture, digitize, and process images and provide the host central processing unit (CPU) or programmable logic controller with simple pass or fail decisions. At the

same time, some camera suppliers are using existing charge-coupled-device (CCD) cameras and interfacing them with on-board CPUs and digital-signal processors (DSPs), real-time operating systems, and image-processing software.

Often eliminating the need for frame grabbers, host CPUs, and host-based image processing, these "smart" cameras offer a method of adding intelligence to machine-vision systems while reducing the systems-integration effort. Of course, adding intelligence also increases the cost of such cameras. Because the need for a host CPU, image-processing software, and frame grabber can be eliminated, smart cameras should command the attention of systems integrators implementing a machine-vision system.

However, systems integrators expecting to find standardization in current smart cameras will be disappointed. While some cameras integrate on-board processing, others use DSPs, reduced-instruction-set-computing devices, or CPUs to perform on-camera image-processing functions. Moreover, embedded operating systems vary as widely as the RS-232, 1394 Firewire, and ProfiBus interfaces now available for some smart cameras. Similar to choosing an image-processing system, developers should consider all of these functions as well as the currently available software and camera designs before deciding on a "smart" machine-vision camera.

Retinal views

With image sensors ranging from linear, area, time-delay-integration (TDI), logarithmic, or foveal, to those with on-chip processing, it is important to understand which sensor is used in the design of a smart camera; in many cases, the sensor has been developed by the camera vendor. Armed with this information, developers are in a better position to judge the specifications of the camera and to match their application. Whereas current camera vendors often rely on off-the-shelf linear, area, and TDI arrays, several novel designs are incorporating foveal sensing mechanisms and on-chip digitizing and processing into their sensors and cameras.

Amherst Systems (Buffalo, NY), for example, has built two types of cameras that are based on biological foveal vision systems (see Vision Systems Design, Dec. 1998, p. 7). In biological systems, the region of the retina that has high acuity--the fovea--is centered at the optical axis and represents a small percentage of the overall field of view (FOV) of the eye. However, when coupled to the retina`s lower peripheral acuity, the biological system can acquire more relevant information than sensors with uniform acuity. Comparing images captured using both foveal sensors and uniform-acuity devices demonstrates the superior advantages of foveal sensors (see Fig. 1).

With wide FOVs and fast frame rates, the cameras being developed at Amherst Systems are incorporating both CCD and active-pixel lattice imagers with variable-size photogates. To properly model the human visual system, researchers are installing both variable acuity and gaze control so that the fovea can be rapidly aligned with relevant features in the image.

To include gaze control in its cameras, Amherst Systems is developing a fixed lattice imager that uses a servo actuator developed in collaboration with Moog (East Aurora, NY) for fast gazing. At the same time, the company has built a prototype camera, based on a reconfigurable active-pixel-array imager, that eliminates any mechanical pointing mechanism. The camera adapts a system topology to match the frame rate and spatial and temporal resolutions as they change during target-tracking applications. While mechanical pointing is still needed for wide-area surveillance applications, electronic gazing overcomes such factors as the settling time of mechanical systems.

Emulating the human-vision process is also the aim of the VE379 camera from Canpolar East (St. John`s, Newfoundland, Canada; see Fig. 2). Just as the human eye uses peripheral vision as an attention mechanism to direct its high-resolution foveal-vision segment of the eye to areas of interest within the FOV, the VE379 acquires images in a similar manner. In operation, the camera can acquire images with a wide FOV at 640 ¥ 480 resolution and images of interest at up to 40 times this resolution. To do so, the system uses an Intel Pentium CPU coupled to a TMS320C80 DSP from Texas Instruments (Dallas, TX).

To support the camera, Canpolar supplies both system-management software--VE Manager and Perceptools--a callable library of basic image-processing functions, and high-level functions such as pattern recognition and object classification. For industrial-inspection applications, Canpolar also offers custom software that can be used to locate targets and make decisions based on these data.

Using CMOS active-pixel-sensor technology, C-Cam Technologies (Heverlee, Belgium) also provides a retina-shaped sensor and an OEM camera-evaluation package--the Fuga18. Originally developed for a video telephony application, the Fuga18 uses more than 8000 randomly addressable pixels, with 56 circles of pixels in the retina and 20 in the fovea (see Fig. 3).

For device support, C-Cam offers the Fuga18 camera head and an OEM evaluation package that includes the sensor, analog-to-digital converter, and PC interface board. Demonstration software provides developers with control of the sensor and camera, including the speed of acquisition, position, aspect ratio, and automatic brightness control. In addition, C-Cam supplies other sensors and OEM camera systems based on this technology. They include the Fuga19 with a 1920 ¥ 152 rectangular array, the Fuga 22 with a 2048 ¥ 2048 area array, and the Fuga24, a 2048 ¥ 1 linear device and camera system.

On-chip processing

While some smart-camera vendors are looking to emulate the human eye, others are putting more-conventional types of image processing on the image sensor or coupling DSPs and CPUs to them. In this approach, image-processing functions can be off-loaded from the host PC, freeing the system for other tasks.

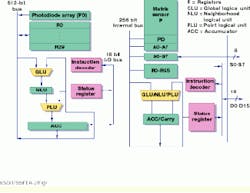

In the design of its linear LAPP1510 and area-array-based MAPP2200 PCI-based vision systems, for example, Integrated Vision Products (Linköping, Sweden) has combined image sensors and a general-purpose image processor on the same device. While the LAPP1510 linear-array picture processor contains a linear array of 512 sensors and processing elements, the MAPP2200 contains an area-array matrix of 256 ¥ 256 sensors and 256 processor units (see Fig. 4). These processing units are designed to perform image sensing, digitization, and data reduction for such applications as wood inspection, paper quality control, and electronics manufacturing.

Although both intelligent sensors are packaged in aluminum camera housings, they can be interfaced to the PCI bus using a PCI-based adapter board, such as supplied by Integrated Vision Products. PC-based application software consists of example programs, written in C, that can be linked together with camera control functions and then complied.

Rather than choose to develop proprietary image sensors and processors on a single chip, most smart-camera manufacturers are combining off-the-shelf CCD sensors with DSPs to add intelligence to their cameras. Vendors such as Vision Components (Cambridge, MA) and Wintress Engineering (San Diego, CA) are taking advantage of low-cost off-the-shelf components rather than develop costly intelligent sensors.

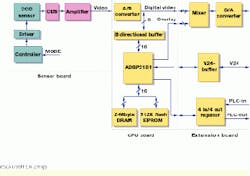

In the design of its VC Series of intelligent cameras, Vision Components used the ADSP 2181 DSP from Analog Devices (Norwood, MA) to off-load histograms, averaging, filtering, look-up-table operations, and dilations from the host CPU (see Fig. 5). At present, the company`s VC cameras use the 752 ¥ 582 ICX059AL monochrome and the ICX059AK color sensors from Sony Image Sensing (Park Ridge, NJ) in conjunction with the DSP processor.

Image-processing algorithms can be added to these cameras in two ways. Vision Components supplies both the original cross-development system from Analog Devices that consists of a C compiler, run-time library, debugger, assembler, simulator, and linker, as well as sample programs such as the fast Fourier transform (FFT) in ADSP 2181 assembly language. In addition, software support includes a real-time operating system for the camera and an image-processing library that contains operations for image averaging, noise filtering, run-length encoding, and feature extraction. Once developed on a PC host, such programs can be downloaded into the camera though its serial interface.

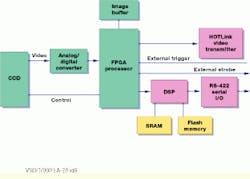

Like Vision Components, Wintress Engineering Corp. (San Diego, CA) uses a DSP in its Opsis 1300 MegaPixel cameras for image preprocessing functions. With an on-board field-programmable gate array (FPGA) and DSP, the camera can share processing performance with a host-based PC over a 330-Mbit/s HOTLink fibre-channel serial video port (see Fig. 6). While the 1300AS is a monochrome camera that couples the 1300 ¥ 1030 ICX085 monochrome CCD sensor from Sony to both the on-board FPGA and DSP, the 1300ASC uses the ICX085AK color interline transfer device to provide a color version, also with 1300 ¥ 1030 resolution.

By adding intelligence to their camera products, Wintress Engineering and others offer systems integrators an alternative to nonintelligent CCD-based cameras with analog or digital output. And, by providing on-chip acquisition, digitizing, and processing, developers can concentrate more on their application than systems integration issues.

Last year, several major semiconductor vendors entered the market with low-cost CMOS-based active-pixel sensors targeted mainly at video-teleconferencing applications. It is likely that this technology will be incorporated into the next generation of smaller, faster smart cameras with increased on-board image-processing power.

FIGURE 1. Foveal image sensors are based on image sensors with variably sized receptive fields. When capturing typical images (top left), the results of the variably sized receptive fields of the sensors can be seen in the captured image (top right). When compared to an image captured with a uniform sensor (bottom), the amount of relevant information that can be determined is different, although the amount of raw data is the same.

FIGURE 2. Emulating the performance of the human eye, the VE379 saccade camera from Canpolar East uses an on-board CPU and DSP for complex image-interpretation tasks involving variable background, color, and morphology.

FIGURE 3. Initially developed at IMEC, CMOS APS sensor technology has been used to build a retina-shaped sensor for video telephony applications. Now available as an OEM camera-evaluation package from C-Cam Technologies, the sensor achieves a dynamic range of 120 dB through logarithmic light-to-voltage conversion.

FIGURE 4. Adding image-processing functions on-chip is the aim of the linear array-based smart vision sensor (left) and the matrix array sensor (right) from Integrated Vision Products. Both devices are based on a single-instruction multiple-data architecture in which single instructions are performed on a multiple image data stream.

FIGURE 5. On-board the VC Series of intelligent cameras from Vision Components, a ADSP 2181 digital signal processor (DSP) is used to offload image-processing functions from the host CPU.

FIGURE 6. In the design of the 1300AS MegaPixel camera, Wintress Engineering coupled a 1300 ¥ 1030 monochrome interline CCD from Sony with an FPGA and DSP for on-camera image processing.