IMAGING SENSORS: Sensor-processor bridge facilitates low-cost camera designs

Designers of embedded imaging systems must develop architectures that cost-effectively interface image sensors with low-cost image processors. However, since most image sensors produce image data at very high data rates, designers cannot easily interface the sensors with low-cost microcontrollers (MCUs); often no buffering is provided within the image sensors themselves and most MCUs have limited internal memory.

“Since many image-processing algorithms require random access to pixel data,” says Tareq Hasan Khan of the Multimedia Processing and Prototyping Laboratory at the University of Saskatchewan (Saskatoon, SK, Canada), “this lack of memory presents a problem for developers wishing to interface high-speed image sensors with low-cost microcontrollers such as Intel’s [Santa Clara, CA, USA] 8051.”

While high-speed image sensors can be interfaced to more advanced MCUs such as the AT91CAP7E from Atmel (San Jose, CA, USA), the additional features of those MCUs, such as peripheral DMA controllers and USB and FPGA interfaces, may not be required for simple, low-cost imaging applications.

Tareq Hasan Khan and Khan Wahid have developed a digital-video-port (DVP) compatible device designed to bridge any general-purpose image sensor with low-cost MCUs. The device, known as iBRIDGE, allows commercially available DVP-based CMOS image sensors—such as the TCM8230MD from Toshiba (Tokyo, Japan), OVM7690 from OmniVision (Santa Clara, CA, USA), MT9V011 from Aptina (San Jose, CA, USA), LM9618 from National Semiconductor (Santa Clara, CA, USA), KAC-9630 from Kodak (Rochester, NY, USA), and PO6030K from Pixelplus (Gyeonggi-do, Korea)—that use DVP to be interfaced to MCUs such as the 8051 and the PIC from Microchip Technology (Chandler, AZ, USA).

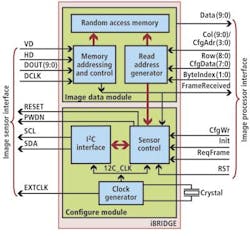

To allow these DVP-based image sensors to interface with slower, low-power MCUs, the iBRIDGE design features an image data module and a system configuration module (see figure). Since most standard-definition (SD) and high-definition (HD) CMOS image sensors transfer image data using the DVP interface, this is implemented in the iBRIDGE design. This interface uses the imager’s vertical sync (VD) and horizontal sync (HD) pins to indicate the end of frame and end of row, respectively while pixel data is transferred over DOUT.

Initialization and configuration of the image sensor is performed over the I2C protocol. This controls the imager’s frame size, color, and sleep and wakeup mode by using two wires to send serial data (SDA) and serial clock data (SCL) to the image sensor.

To configure and access image data, the processor provides both configuration signals and frame access signals. The configuration signals are asynchronous; frame access signals depend on the speed of the MCU and are not synchronized with the iBRIDGE’s internal clock. A single-port random access memory module is used to store a frame of data from the DVP-compatible imager.

For evaluation, Khan modeled the iBRIDGE in VHDL and used the DE2 Development and Education board from Altera (San Jose, CA, USA) to synthesize the design onto the board’s Cyclone II 2C35 FPGA. This FPGA was then interfaced to a TCM8230MD DVP-based CMOS imager from Toshiba and an ATmega644 MCU from Atmel. The MCU was interfaced to a PC using the COM port of the MCU and captured images displayed on the PC via software written in Visual Basic.

Using the bridge between high-performance image sensors running as fast as 254 MHz and low-speed MCUs running at 1 MHz, VGA images can be captured at up to 333 frames/s. Thus, by employing the iBRIDGE and low-cost MCU, any DVP-compatible commercial image sensor can be converted to a high-speed randomly accessible image sensor.

Bridging the gap between high-speed image sensors and slower microcontrollers, the iBRIDGE allows embedded system designers to use high-performance CMOS imagers in low-cost embedded designs.

With support from the National Science Foundation (NSF; Arlington, VA, USA) and the Gigascale Systems Research Center (GSRC; Princeton, NJ, USA), Silvio Savarese of the University of Michigan (Ann Arbor, MI, USA) and his colleagues have developed a multicore processor specifically designed to increase the speed of feature-extraction algorithms.

Announced at the June 2011 Design Automation Conference (DAC) held in San Diego, CA, the EFFEX processor uses a programmable architecture, making it applicable to a range of feature-extraction algorithms such as the Features from Accelerated Segment Test (FAST), the Histogram of Oriented Gradients (HOG), and the Scale Invariant Feature Transform (SIFT).

“These algorithms represent a tradeoff of quality and performance, ranging from the high-speed, low-quality FAST algorithm to the very high-quality and computationally expensive SIFT algorithm. In addition, these algorithms are representative of the type of operations typically found in feature-extraction algorithms,” says Savarese.

Although these algorithms are complex, many of the operations used within them are repetitive, allowing them to be optimized by a combination of functional units and parallel processing.

For example, one of the major computational tasks performed in the FAST algorithm is corner location—performed by comparing a single pixel to a number of surrounding pixels—a task that lends itself to implementation in a hardware-based multiplier accumulator (MAC). Since SIFT finds image features by applying a difference of Gaussian function in scale-space to a series of smooth and resampled images, this also requires multiple convolutions, and its speed can also be increased using a MAC. Similarly, the most computationally intensive function within the HOG algorithm is building image feature descriptors using a histogram of the gradients of pixel intensities within a region of the image, a function whose speed can also be increased in hardware.

To exploit the parallelism inherent in these algorithms, the EFFEX processor consists of one complex core surrounded by a variable number of simple cores in a mesh network (see figure). While the complex core is used to perform high-level system tasks, the simple cores are dedicated to the repetitive tasks associated with pattern-recognition algorithms.

Three application-specific functional units are incorporated into the processor to speed the feature-extraction tasks. These include a one-to-many compare unit, a MAC used for convolution, and a gradient unit.

“Since searching for feature point locations in algorithms such as FAST and SIFT involves comparing a single pixel location and its neighbors across an entire image, this processing can be performed by the one-to-many processing unit in hardware and in parallel using an instruction extension of the EFFEX processor,” says Savarese. This simple one-to-many compare unit can also be used for histogram binning by comparing a value to the limits of the histogram bins.

Convolution functions used in SIFT and HOG are similarly performed in parallel using the convolution multiply accumulate (CMAC) unit. To speed the gradient computation functions associated with SIFT and HOG, the processor employs a single-instruction multiple-data (SIMD) gradient processor that computes the gradient of an image patch using a Prewitt version of the Sobel convolution kernel.

To benchmark the processor, Savarese and his colleagues simulated the architecture with a single complex core based on a 1-GHz ARM A5 (with floating point) model and eight specialized simple cores.

For the SIFT, HOG, and FAST algorithms, the resulting speed increases over a single ARM Core running at the same speed were 4x, 12x, and 4x faster, respectively. At present, Savarese is looking to expand the processor’s functionality to include the machine-learning-based algorithms used in analyzing extracted features.

Vision Systems Articles Archives