Sensors may offer robotic control

Over the past 30 years, the transition from photographic to electronic imaging has been driven by electronic circuits that capture, process, store, and transmit information. And, while devices such as CCDs are often used for image capture, they are relatively simple structures that capture an array of individual photons and translate these into electrical signals. To build the next generation of image sensors, image-sensor vendors are taking advantage of CMOS processes to add image-processing circuits to their designs.

As commercial CCD and CMOS vendors add more complexity to their sensors, many researchers are looking to develop devices that model aspects of the biological function and structure of the eye. Using continuous-time circuits instead of frame-based processing, small, low-power neuromorphic sensors can be developed that allow biological models of image-based motor-control systems to be evaluated.

Much of the work currently underway in neuromorphic sensors is being performed at the Institute for Neuroinformatics (Zurich, Switzerland). There, researchers are incorporating the principles of biological sensory systems into analog VLSI devices to better understand the visual-based motor control. To do so, Shih-Chii Liu and Alessandro Usseglio-Viretta have developed an analog VLSI motion sensor that models the optomotor response of a fruitfly.

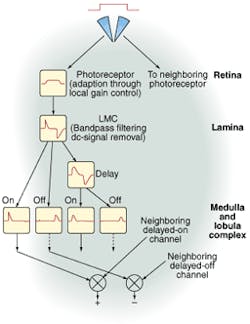

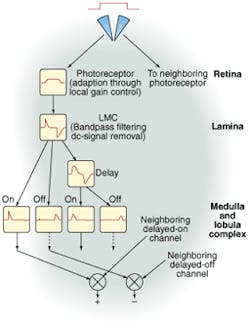

To model the anatomical layout of the fly's visual system, Liu and Usseglio-Viretta developed an elementary motion detector (EMD) that performs three levels of processing. At the first layer, each photoreceptor transforms the incoming photons to an electrical signal. Here, gain control is used to adapt the signal to any background intensity and to produce large gains when transient changes in intensity occur.

The second layer of the EMD is designed to model the response of the laminar monopolar cells (LMCs) in the next layer of the fly's visual system. These LMCs are responsible for local contrast enhancement by both reducing redundant parts of the signal and signal amplification. In the EMD design, this is accomplished by bandpass filtering and removal of the dc component of the signal.

For motion detection, the third layer splits the LMC signal into two pathways; one path is delayed through a low-pass filter. The resulting four transient currents are then correlated (or multiplied) with neighboring EMD pixel currents. The final "+" output is then sensitive to a stimulus movement from left to right while the "-" output is sensitive to a stimulus moving in the opposite direction.

To test the effects of the sensor in a motion-control environment, two of these sensors were mounted on a Koala multipurpose robotic platform from K-Team S.A. (Préverenges, Switzerland). Using an on-board control system, the robot's course stabilization is activated whenever the retinal image moves in the same direction in both sensors. Modelling the fixation behavior of the fly, in which the fly orients itself toward a single object, was accomplished by triggering the robot when a motion signal was measured in only one of the sensors.